Science of Security (SoS) Newsletter (2015 - Issue 5)

Science of Security (SoS) Newsletter (2015 - Issue 5)

Each issue of the SoS Newsletter highlights achievements in current research, as conducted by various global members of the Science of Security (SoS) community. All presented materials are open-source, and may link to the original work or web page for the respective program. The SoS Newsletter aims to showcase the great deal of exciting work going on in the security community, and hopes to serve as a portal between colleagues, research projects, and opportunities.

Please feel free to click on any issue of the Newsletter, which will bring you to their corresponding subsections:

- In the News

- Conferences

- Publications of Interest

- Upcoming Events of Interest.

Publications of Interest

The Publications of Interest provides available abstracts and links for suggested academic and industry literature discussing specific topics and research problems in the field of SoS. Please check back regularly for new information, or sign up for the CPSVO-SoS Mailing List.

Table of Contents

Science of Security (SoS) Newsletter (2015 - Issue 5)

- In the News (2015 - Issue 5)

- International Security Related Conferences (2015 - Issue 5)

- International Conferences: Software Analysis, Evolution and Reengineering (SANER) Quebec, Canada

- International Conferences: Cloud Engineering (IC2E), 2015 Arizona

- International Conferences: CODASPY 15, San Antonio, Texas

- International Conferences: Cryptography and Security in Computing Systems, 2015, Amsterdam

- International Conferences: Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), 2015 Singapore

- International Conferences: Software Testing, Verification and Validation Workshops (ICSTW), Graz, Austria

- International Conferences: Workshop on Security and Privacy Analytics (IWSPA) ’15, San Antonio, Texas

- Publications of Interest (2015 - Issue 5)

- Cryptology and Data Security, 2014

- Deterrence

- Deterrence, 2014 (ACM Publications)

- Elliptic Curve Cryptography from ACM, 2014, Part 1

- Elliptic Curve Cryptography from ACM, 2014, Part 2

- Hard Problems: Predictive Security Metrics (ACM)

- Hard Problems: Predictive Security Metrics (IEEE)

- Hard Problems: Resilient Security Architectures (ACM)

- Journal: IEEE Transactions on Information Forensics and Security, March 2015

- Malware Analysis, 2014, Part 1 (ACM)

- Malware Analysis, 2014, Part 2 (ACM)

- Malware Analysis, Part 1

- Malware Analysis, Part 2

- Malware Analysis, Part 3

- Malware Analysis, Part 4

- Malware Analysis, Part 5

- Resilient Security Architectures (IEEE)

- Smart Grid Security

- Software Security, 2014 (ACM), Part 1

- Software Security, 2014 (ACM), Part 2

- Software Security, 2014 (IEEE), Part 1

- Software Security, 2014 (IEEE), Part 2

- Trustworthy Systems, Part 1

- Trustworthy Systems, Part 2

- SoS Lablet Quarterly Meeting - CMU

- Upcoming Events of Interest (2015 - Issue 5)

(ID#:15-5926)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

In the News

|

In The News |

This section features topical, current news items of interest to the international security community. These articles and highlights are selected from various popular science and security magazines, newspapers, and online sources.

US News

"Feds Extradite 'Most Wanted' ATM Hacker", GovInfoSecurity, 24 June 2015. [Online]. After his arrest by German police in late 2013, Turkish citizen Ercan Findikoglu is set to face trial in New York on June 23rd. Findikoglu is accused of stealing from ATMs since 2008, netting himself close to $60 million. He and his partners-in-crime used a hacking technique targeted at credit card processors, allowing them to withdraw vast sums of money from compromised accounts. (ID#: 15-50342) See http://www.govinfosecurity.com/feds-extradite-most-wanted-atm-hacker-a-8341

"iOS 9, Android M Place New Focus On Security, Privacy", Information Week, 24 June 2015. [Online]. Managing permissions on Apple and Android mobile devices has become a major battle between users and app developers, who often seek valuable user information for targeted advertising. Google and Apple both have announced plans to give the user more control over privacy and encryption with tools like iOS 9's "App Transport Security" tool. (ID#: 15-50345) See http://www.informationweek.com/it-life/ios-9-android-m-place-new-focus-on-security-privacy/a/d-id/1321005?

"Swift Key Vulnerability on Samsung Phones", Information Security Buzz, 24 June 2015. [Online]. Swift Key, a pre-installed keyboard software that comes on Samsung phones and cannot be disabled, was found to have a severe security flaw. When exploited, hackers can remotely control network traffic and execute high-privileged code. (ID#: 15-50346) See http://www.informationsecuritybuzz.com/swift-key-vulnerability-on-samsung-phones/

"NIST releases cyber security guidelines for government contractors", Cyber Defense Magazine, 24 June 2015. [Online]. NIST has released "Publication Citation: Protecting Controlled Unclassified Information in Nonfederal Information Systems and Organizations", a guideline on how federal agencies should handle the way they allow contractors and "outsiders" to handle their sensitive data. (ID#: 15-50361) See http://www.cyberdefensemagazine.com/nist-releases-cyber-security-guidelines-for-government-contractors/

"Hackers targeted the Polish Airline LOT, grounded 1,400 Passengers", Cyber Defense Magazine, 23 June 2015. [Online]. Over 1,400 passengers hoping to fly on flights with Polish carrier LOT had wrench throw in their travel plans when a cyber attack forced LOT to cancel 10 flights. The attack affected ground computer systems, preventing the creation of flight plans for outbound flights. The attack was resolved within five hours. (ID#: 15-50362) See http://www.cyberdefensemagazine.com/hackers-targeted-the-polish-airline-lot-grounded-1400-passengers/

"Major Banking Corporation Investing in Quantum Cryptography Company", Information Security Buzz, 23 June 2015. [Online]. The banking industry is becoming increasingly interested in the security that quantum cryptography can provide. QLabs has developed a way to use quantum technology to encrypt sensitive data with keys that are "unhackable", with help from banking investors. Though quantum cryptography has a great deal of potential, it is not a "fix-all" for cybersecurity issues. (ID#: 15-50347) See http://www.informationsecuritybuzz.com/major-banking-corporation-investing-in-quantum-cryptography-company/

"There's Another Adobe Flash Zero-Day And Chinese Hackers Are Abusing It", Forbes, 23 June 2015. [Online]. A group of Chinese hackers known as APT3 have been sending uncharacteristically unimaginative and generic phishing emails to exploit an Adobe Flash Zero-day. Once in a network, the attackers use advanced C&C techniques, backdoors, and other tricks to spy and steal information. The flaw, CVE-2015-3113, affects Internet Explorer and Firefox on certain operating systems. (ID#: 15-50348) See http://www.forbes.com/sites/thomasbrewster/2015/06/23/adobe-flash-zero-day-used-by-china-hackers/?ss=Security

"Facebook Makes It Free And Easy To Kill Latest Mac And iPhone Zero-Days", Forbes, 23 June 2015. [Online]. Facebook has developed "osquery", a tool to help detect vulnerabilities in Apple desktop and mobile operating systems. Osquery was developed in response to the discovery of cross-app resource attack vulnerability by Indiana University Bloomington researcher Luyi Xing. (ID#: 15-50349) See http://www.forbes.com/sites/thomasbrewster/2015/06/23/facebook-offers-apple-zero-day-protection/?ss=Security

"OPM Director Rejects Blame for Breach", GovInfoSecurity, 23 June 2015. [Online]. OPM Director Katherine Archuleta testified to a Senate panel that nobody in the OPM should be held personally accountable for the massive OPM breach, leaving the panel and many others with frustration over the lack of "clear lines of accountability". The blame, according to Archuleta, rests in the legacy systems used by the agency, as well as the attackers themselves. (ID#: 15-50343) See http://www.govinfosecurity.com/opm-director-rejects-blame-for-breach-a-8338

"IRS, industry to share data to fight tax fraud", GCN, 23 June 2015. [Online]. The IRS is taking steps towards fighting tax fraud by leveraging data sharing with industry. By using analytics and shared data, the IRS hopes to be able to better validate tax returns. (ID#: 15-50357) See http://gcn.com/articles/2015/06/23/irs-industry-tax-fraud-fight.aspx?admgarea=TC_SecCybersSec

"FitBit, Acer Liquid Leap Fail In Security Fitness", Dark Reading, 22 June 2016. [Online]. Security testing organization AV-TEST evaluated nine different fitness tracking devices, and found that all were lacking to at least a small degree in Bluetooth security. Some of them — especially the Acer Liquid Leap and FitBit Charge — are host to numerous serious security threats, including lack of code obfuscation and pairing with a smartphone without confirmation. (ID#: 15-50339) See http://www.darkreading.com/endpoint/fitbit-acer-liquid-leap-fail-in-security-fitness/d/d-id/1320984

"Getting Proactive Against Insider Threats", Security Week, 22 June 2015. [Online]. Mitigating insider threats requires a proactive, "forward-leaning" approach: allocating capital towards preventative measures, using "hyper-vigilant" security models, and focusing on the critical assets can vastly decrease the likeliness of suffering from a malicious insider. (ID#: 15-50350) See http://www.securityweek.com/getting-proactive-against-insider-threats

"How encryption keys could be stolen by your lunch", Computerworld, 22 June 2015. [Online]. Israeli researchers have developed a tool that can sniff electromagnetic signals from a computer and extrapolate information. Fittingly named "PITA", the device is around the size of a small piece of pita bread. It has been designed to sniff encryption keys from laptops running the open-source encryption software GnuPG 1.x. (ID#: 15-50354) See http://www.computerworld.com/article/2938753/security/how-encryption-keys-could-be-stolen-by-your-lunch.html

"Toshiba Working on Unbreakable Encryption Technology", The Wall Street Journal, 22 June 2014. [Online]. Toshiba has plans for a completely unbreakable encryption technology that relies on one of the fundamental concepts of the quantum world: observing a particle must change, in some manner, the state of the particle. One-time use encryption keys would be transmitted on a custom fiber optic cable; any attempt to steal these keys would change the data and make the snooping detectable. (ID#: 15-50355) See http://blogs.wsj.com/digits/2015/06/22/toshiba-working-on-unbreakable-encryption-technology/

"RubyGems Software Flaw Affects Millions of Installs", Infosecurity Magazine, 22 June 2015. [Online]. Researchers have discovered a security flaw in RubyGems, a distribution software that helps businesses and developers distribute software. The flaw allows attackers to bypass HTTPS and thereby force redirection to their own "gem servers", allowing them to distribute malware. (ID#: 15-50358) See http://www.infosecurity-magazine.com/news/rubygems-software-flaw-affects/

"Cybercrime is paying with 1,425% return on investment", Cyber Defense Magazine, 22 June 2015. [Online]. A report by Trustwave indicates that the typical cybercriminal should expect to make a 1,425% return on the money they spend executing attacks. They found that ransomware and exploit kits are among the most common methods used to compromise a victim's systems, along with extortion and ransoms. CTB-Locker was found to be one of the more notable pieces of malware. (ID#: 15-50363) See http://www.cyberdefensemagazine.com/cybercrime-is-paying-with-1425-return-on-investment/

"Underwriters of cyberinsurance policies need better understanding of cyber risks", Homeland Security Newswire, 19 June 2015. [Online]. With cyberattacks increasingly becoming a norm in the business world, cybersecurity insurance is becoming more popular. For the cyber insurance market to succeed, insurance underwriters need to have a firm grasp on the severity and significance of varying forms of cyber risks. This is made difficult by the constantly changing, dynamic nature of cyber threats. (ID#: 15-50353) See http://www.homelandsecuritynewswire.com/dr20150619-underwriters-of-cyberinsurance-policies-need-better-understanding-of-cyber-risks

"UC Irvine Medical Center announces breach affecting 4,859 patients", SC Magazine, 19 June 2015. [Online]. University of California (UC) Irvine Medical Center announced that it suffered a breach, affecting nearly 5,000 patients. The perpetrator was a single employee, who is said to have accessed records over the course of four years for a non-job-related purpose. (ID#: 15-50364) See http://www.scmagazine.com/uc-irvine-medical-center-announces-breach-affecting-4859-patients/article/421645/

"New Apple iOS, OS X Flaws Pose Serious Risk", Dark Reading, 18 June 2015. [Online]. Indiana University in Bloomington researchers discovered vulnerabilities in iOS and OS X that put passwords and other sensitive data at risk. The fault lies in a weakness in application isolation and authentication that could allow cross-application resource access attacks (XARAs). (ID#: 15-50340) See http://www.darkreading.com/endpoint/new-apple-ios-os-x-flaws-pose-serious-risk/d/d-id/1320949

"Routers Becoming Juicy Targets for Hackers", Tech News World, 18 June 2015. [Online]. Router vulnerabilities have become an item of increased interest for hackers, as they are often "low-hanging fruit": full of security holes and often under appreciated by users and ISPs. Backdoors and cross-site request forgery attacks make it easy for attackers to use routers to gain access to networks or mount Denial of Service attacks. (ID#: 15-50352) See http://www.technewsworld.com/story/82188.html

"White House: IRS, OMB appropriations bill increases cyber risk", FCW, 18 June 2015. [Online]. A bill approved by the House Appropriations Committee is set to reduce the IRS budget by nearly a billion dollars. Supporters point out the increased need for money elsewhere; however, others argue that the IRS needs money to better secure itself against attacks, such as the recent theft of taxpayer data. (ID#: 15-50356) See http://fcw.com/articles/2015/06/18/appropriations-cyber-risk.aspx

"U.S. Coast Guard commandant releases cyber strategy", GSN, 17 June 2015. [Online]. The U.S. Coast Guard has rolled out a new cyber strategy to help "ensure the prosperity and security of the nation's Maritime Transportation System (MTS) in the face of a rapidly evolving cyber domain." The framework is based on three priorities: maintaining information networks, combat adversaries by means of cyber capabilities, and to protect critical infrastructure. (ID#: 15-50351) See http://gsnmagazine.com/node/44709?c=cyber_security

"FBI Investigates Baseball Hack Attack", GovInfoSecurity, 17 June 2015. [Online]. An alleged cyber attack on the Houston Astros baseball team by rival team St. Louis Cardinals is being investigated by the FBI and DoJ. If it did indeed happen, the hack might be related to a data leak the Astros suffered in 2014, in which the team's internal trade talks were leaked onto a text-sharing site. (ID#: 15-50344) See http://www.govinfosecurity.com/fbi-investigates-baseball-hack-attack-a-8321

"New Malware Found Hiding Inside Image Files", Dark Reading, 16 June 2015. [Online]. Dell researchers published their findings on Stegoloader, a malware family that uses PNG files to store malicious code. This technique is a form of digital stenography, which has been used by malware authors for years; however, researchers fear that Stegoloader might indicate a new resurgence in stenography and similar countermeasures. (ID#: 15-50341) See http://www.darkreading.com/endpoint/new-malware-found-hiding-inside-image-files/d/d-id/1320895

"Wikipedia to Switch on HTTPS to Counter Surveillance Threat", InfoSecurity Magazine, 15 June 2015. [Online]. The Wikimedia Foundation announced that Wikipedia will be switching to HTTPS to hamper censorship and surveillance. The transition has been slow, as Wikimedia has been optimizing performance for people in regions where telecommunications infrastructure is less advanced. (ID#: 15-50326) See http://www.infosecurity-magazine.com/news/wikipedia-switch-https-counter/

"Update: Russia and China cracked Snowden's files, identified U.S., UK spies", Computerworld, 14 June 2015. [Online]. Agents with the U.K.'s MI6 have reportedly been withdrawn from foreign nations after Russian and Chinese agencies decrypted Snowden files that revealed the identities of UK and US agents. (ID#: 15-50329) See http://www.computerworld.com/article/2935558/cybercrime-hacking/russia-and-china-cracked-snowdens-files-identified-us-uk-spies.html

"Private security clearance info accessed in second OPM breach", SC Magazine, 13 June 2015. [Online]. In the wake of the massive OPM breach, the agency revealed that it suffered a separate breach. The attackers appear to have accessed Standard Form 86 documents, which contain information on not only security clearance applicants themselves but relatives and associates as well. (ID#: 15-50323) See http://www.scmagazine.com/detailed-sf-86-forms-may-have-been-tapped-by-chinese-operatives/article/420581/

"OPM's 'Cyber Pearl Harbor' might affect 14 million", FCW, 12 June 2015. [Online]. The OPM breach was first thought to have exposed sensitive information from 4 million current and former federal employees, though there is reason to believe that the number might be closer to 14 million, according to a scathing letter by the American Federation of Government Employees. (ID#: 15-50322) See http://fcw.com/articles/2015/06/12/opm-breach-gets-worse.aspx

"Apple iOS flaw exploitable to steal user password with a phishing email", Cyber Defense Magazine, 12 June 2015. [Online]. Forensics experts were able to create the iOS 8.3 Mail.app inject kit, which uses a vulnerability in Apple's default email app to inject HTML and collect user's passwords. Millions of users are vulnerable to the bug, which remains unpatched as of yet. (ID#: 15-50325) See http://www.cyberdefensemagazine.com/apple-ios-flaw-exploitable-to-steal-user-password-with-a-phishing-email/

"Chinese Hackers Circumvent Popular Web Privacy Tools", New York Times, 12 June 2015. [Online]. Silicon Valley security company AlienVault discovered that Chinese hackers have been able to circumvent TOR and VPNs, tools which Chinese citizens and journalists use to bypass China's internet censorship. Attackers targeted the websites of journalists and ethnic minorities, stealing personal info including names, addresses, and browser cookies. (ID#: 15-50334) See http://www.nytimes.com/2015/06/13/technology/chinese-hackers-circumvent-popular-web-privacy-tools.html?_r=1

"Hundreds Of Wind Turbines And Solar Systems Wide Open To Easy Exploits", Forbes, 12 June 2015. [Online]. German researcher Maxim Rupp discovered serious flaws in several clean energy systems, including wind turbines and solar-powered lighting. The vulnerabilities, which could allow an attacker to remotely disable or control the systems, were given a severity rating of 10 out of 10 due to their ease of implementation. (ID#: 15-50336) See http://www.forbes.com/sites/thomasbrewster/2015/06/12/hacking-wind-solar-systems-is-easy/?ss=Security

"Duqu2.0 knocks Kaspersky and security peers", SC Magazine, 11 June 2015. [Online]. After recovering from a major attack, Kaspersky Labs believes it was the victim of a version 2.0 of the highly sophisticated Duqu malware. Though it relies on numerous particularly advanced techniques and three individual zero-day vulnerabilities, the delivery method was nothing new — a simple email attachment. (ID#: 15-50321) See http://www.scmagazine.com/duqu20-knocks-kaspersky-and-security-peers/article/420251/

"SC Congress Toronto: Experts discuss incident response in a breach era", SC Magazine, 11 June 2015. [Online]. Security experts converged on the SC Congress Toronto to discuss management of and response to breaches. Transparency, straightforwardness, and a lack of speculation can help prevent further problems in the wake of a breach, while proper preparation can mitigate potential future security incidents. (ID#: 15-50324) See http://www.scmagazine.com/sc-congress-toronto-panel-plies-ways-to-improve-incident-response/article/420231/

"Why Organisations Should Consider Cloud Based Disaster Recovery", InformationSecurity Buzz, 11 June 2015. [Online]. Recent large-scale security issues with cloud-based data storage have damaged trust in cloud-based tech; however, cloud-based disaster recovery may not be under fair consideration by many businesses. Whereas "in-house" data takes up resources, a cloud-based solution can be used by an organization to make quick and flexible responses to problems without the need for worrying about hardware. (ID#: 15-50337) See http://www.informationsecuritybuzz.com/why-organisations-should-consider-cloud-based-disaster-recovery/

"Russia Pegged for ‘Cyber Caliphate’ Attack on TV5Monde", InfoSecurity Magazine, 10 June 2015. [Online]. Government-supported Russian hacking group APT28 is believed to be behind an attack on French television company TV5Monde this past Spring, which caused several channels to go down. The code was written in Cyrillic during St. Petersburg / Moscow business hours, and used the same server and registrar that has been used by the group in the past. (ID#: 15-50327) See http://www.infosecurity-magazine.com/news/russia-pegged-cyber-caliphate/

"VMware patches virtual machine escape flaw on Windows", Computerworld, 10 June 2015. [Online]. VMware has fixed an issue with a Windows printer interface feature in its virtual machine software that would allow hackers to jump out of the VM and execute code on the host operating system. This vulnerability, along with a few others that were also patched, would have helped facilitate DoS attacks. (ID#: 15-50330) See http://www.computerworld.com/article/2934185/security0/vmware-patches-virtual-machine-escape-flaw-on-windows.html

"Army fights a two-front cyber war", FCW, 10 June 2015. [Online]. A recent attack on the public website of the U.S. Army, believed to have been orchestrated by Syrian hackers, took down the website for several hours. The Army has taken extensive measures to keep communications systems secure, though high-level cyber personnel believe there is still work to be done — especially when it comes to fielded systems. (ID#: 15-50333) See http://fcw.com/articles/2015/06/10/army-fights-cyber-war.aspx

"Can the power grid survive a cyberattack?", Homeland Security Newswire, 10 June 2015. [Online]. As America's economy and capabilities become increasingly reliant on an already stressed power grid, the threat of cyber attack is becoming an item of concern. The increasing connectivity and networking of power systems could open new vectors from which to attack. Further research and development into securing the power grid may be one of the most significant challenges for our generation. (ID#: 15-50335) See http://www.homelandsecuritynewswire.com/dr20150610-can-the-power-grid-survive-a-cyberattack

"Flash Malware Soars Over 300% in Q1 2015", InfoSecurity Magazine, 09 June 2015. [Online]. A recent and dramatic rise in the prevalence of flash malware could be due to the CTB-Locker and other new malware families. These advanced examples of malware are very hard to detect; for example, CTB-locker uses C&C servers on the TOR network, as well as self-healing viruses that re-download themselves even after reformatting the HDD. (ID#: 15-50328) See http://www.infosecurity-magazine.com/news/flash-malware-soars-over-300-in-q1/

"Google: Let the machines remember the password", GCN, 09 June 2015. [Online]. Google’s Advanced Technology and Projects group has developed Project Vault, a microSD-based computing environment that uses complete encryption and other features to securely store and transmit data, especially passwords and biometric data. Google hopes that this technology, along with advanced behavioral biometrics being developed under Project Abacus, will vastly improve outdate alphanumeric password-based security. (ID#: 15-50331) See http://gcn.com/articles/2015/06/09/google-project-vault-abacus.aspx?admgarea=TC_SecCybersSec

"White House Calls For Encryption By Default On Federal Websites By Late 2016", Dark Reading, 09 June 2015. [Online]. With a mere third of federal agencies employing encrypted, HTTPS websites, the Office of Management and Budget (OMB) has decided on a deadline (December 31, 2016) by which all agencies will be required to use HTTPS. This move will help, but not fix, security issues: "HTTPS-only guarantees the integrity of the connection between two systems, not the systems themselves." (ID#: 15-50338) See http://www.darkreading.com/application-security/white-house-calls-for-encryption-by-default-on-federal-websites-by-late-2016/d/d-id/1320789

"Office 365 gets additional email protections", GCN, 08 June 2015. [Online]. Microsoft is offering Office 365 customers additional protection against malicious emails. Exchange Online Advanced Threat Protection is available to government and commercial customers; non-profit and educational organizations will have to wait till a later date. The software detects malicious attachments, blocks unsafe links, and lets IT staff track malicious links that users have clicked. (ID#: 15-50332) See http://gcn.com/articles/2015/06/08/office-365-email-apt.aspx?admgarea=TC_SecCybersSec

"Russia Blamed for Data Stealing Attack on German Parliament", Infosecurity Magazine, 05 June 2015. [Online]. Almost a month after the IT staff at Bundestag (the German Parliament) noticed that attackers were trying to penetrate their computer network, an investigation has indicated that the attack was likely state-sponsored. The trojan used in the attack resembles malware used previously in a suspected Russian attack on German computer networks, and given tensions between Russians and the West over Ukraine, many are pointing to the Russia as a culprit. (ID#: 15-50294) See http://www.infosecurity-magazine.com/news/russia-blamed-data-stealing-attack/

"Auditing GitHub users’ SSH key quality", Benjojo.co.uk (Blog post), 05 June 2015. [Online]. Analysis of nearly 1.4 million publicly-available GitHub SSH keys reveals that a large number of GitHub users have very small and weak encryption keys, opening their account to being compromised. Some of these users even have commit access to significant projects like Django and Python crypto libraries. (ID#: 15-50296) See https://blog.benjojo.co.uk/post/auditing-github-users-keys

"Data hacked from U.S. government dates back to 1985: U.S. official", Reuters, 05 June 2015. [Online]. Hackers believed to be Chinese have breached the Office of Personnel Management computers, compromising personal data of as many as 4 million current and retired government employees, dating back to 1985. It is not known if that attack was state-sponsored. (ID#: 15-50297) See http://www.reuters.com/article/2015/06/06/us-cybersecurity-usa-idUSKBN0OL1V320150606

"Situational Awareness: Elusive Key Ingredient of Worthwhile Cyber Threat Intelligence", Security Week, 05 June 2015. [Online]. Combatting cyber security threats requires situational awareness in all levels of an organization. Four different mindsets can provide a barrier to situational awareness: "Somebody else's problem", tunnel-vision, "a case of mistaken identity", and a "nice to have" attitude towards security. (ID#: 15-50300) See http://www.securityweek.com/situational-awareness-elusive-key-ingredient-worthwhile-cyber-threat-intelligence

"After breaches, higher-ed schools adopt two-factor authentication", Computerworld, 05 June 2015. [Online]. Early last year, an unusually sophisticated phishing attack allowed criminals to steal the paychecks of thirteen faculty members at Boston University. Similar incidents at other universities have prompted many universities to adopt better two-factor authentication techniques. (ID#: 15-50311) See http://www.computerworld.com/article/2931843/security0/after-breaches-higher-ed-schools-adopt-two-factor-authentication.html

"The NSA boosted Internet monitoring to catch hackers", Computerworld, 04 June 2015. [Online]. According to leaked NSA documents, the DoJ permitted the NSA to target internet traffic for the purpose of catching hackers from crime organizations or foreign governments. Despite controversy over the lack of warrants, the NSA points out that it "plays a pivotal role in producing intelligence against foreign powers that threaten our citizens and allies while safeguarding personal privacy and civil liberties." (ID#: 15-50312) See http://www.computerworld.com/article/2931724/internet/the-nsa-boosted-internet-monitoring-to-catch-hackers.html

"Tesla Debuts Bug Bounty Program", Infosecurity Magazine, 05 June 2015. [Online]. Tesla Motors has started a bug-bounty program for teslamotors.com and other domains owned by the website. It does not offer bounties for vulnerabilities in the cars themselves; the program for finding these vulnerabilities is much less publicized. (ID#: 15-50315) See http://www.infosecurity-magazine.com/news/tesla-debuts-bug-bounty-program/

"Windows PowerShell and OpenSSH: Together at last after nearly a decade", ExtremeTech, 04 June 2015. [Online]. PowerShell, Microsoft's response to UNIX and Linux shells like BASH, will be receiving an unexpected upgrade: PowerShell will soon be able to support OpenSSH. The ability to work with the open-source tool will give admins and network specialists a much better way to communicate data securely while mitigating attacks that unencrypted protocols like telnet, rlogin, and ftp are vulnerable to. (ID#: 15-50295) See http://www.extremetech.com/computing/207364-windows-powershell-and-openssh-together-at-last-after-nearly-a-decade

"Google's Android Permissions Get Granular", TechNewsWorld, 04 June 2015. [Online]. Google announced at Google I/O that the new Android M mobile operating system will take a more "granular" approach to app permissions. By simplifying the permission set and allowing the user to decide what data their apps can access, Google hopes to increase user freedom and increase consumer security. (ID#: 15-50301) See http://www.technewsworld.com/story/82139.html

"DISA redoing content delivery", FCW, 04 June 2015. [Online]. HP was recently awarded a $469 million contract that aims to create a unified platform that the Defense Department can use for both classified and unclassified telecommunications. The new system, called the Universal Content Delivery Service, will replace the existing Global Content Delivery Service. (ID#: 15-50307) See http://fcw.com/articles/2015/06/04/disa-content-delivery.aspx

"Mac zero-day makes rootkit infection very easy", Cyber Defense Magazine, 04 June 2015. [Online]. Security Researcher Pedro Vilaça discovered a flaw that makes EFI firmware in a few specific Apple computers vulnerable to attack. By utilizing a flaw in the way that the computer protects flash memory while the computer cycles in and out of sleep mode, an attacker could remotely access a very low level on the machine and install an EFI rootkit. (ID#: 15-50317) See http://www.cyberdefensemagazine.com/mac-zero-day-makes-rootkit-infection-very-easy/

" 'MEDJACK' tactic allows cyber criminals to enter healthcare networks undetected", SC Magazine, 04 June 2015. [Online]. Security company TrapX released a report describing "MEDJACK", a tactic which hackers could use (and likely have used) to move throughout the main network of healthcare providers by utilizing outdated, unpatched medical devices such as an X-ray scanner. So-called medjacking could be partly to blame for recent breaches like that of CareFirst BlueCross BlueShield. (ID#: 15-50318) See http://www.scmagazine.com/trapx-profiles-medjack-threat/article/418811/

"States flex cyber leadership muscle", GCN, 03 June 2015. [Online]. With the increase of cyberattacks on state and local governments, Governor Chris Christie followed a recent trend in state-level defensive cyber measures by signing the New Jersey Cybersecurity and Communications Integration Cell (NJCCIC). The NJCCIC is intended to promote cooperation and sharing between industry and government in the state. (ID#: 15-50308) See http://gcn.com/articles/2015/06/03/states-cyber-leadership.aspx?admgarea=TC_SecCybersSec

"NIST drafts framework for privacy risk", GCN, 03 June 2015 [Online]. With more and more of people's private information being put in potentially compromising situations, the NIST has responded with a draft of a framework for managing privacy risks in federal information systems. The document, called Privacy Risk Management for Federal Information Systems, will utilize systematic methods for both identifying and addressing security risks. (ID#: 15-50310) See http://gcn.com/articles/2015/06/03/nist-privacy-risk.aspx?admgarea=TC_SecCybersSec

"Locker ransomware author quickly apologizes, decrypts victims' files", SC Magazine, 03 June 2015. [Online]. In an unusual turn of events, the author of a new ransomware called "Locker" apologized to victims and posted decryption keys so they could retrieve their locked files. It's not certain exactly why the hacker, who made a mere $169, had such a sudden change of heart. (ID#: 15-50319) See http://www.scmagazine.com/locker-author-apologizes-for-resulting-scams/article/418529/

"FireEye Researchers Identify Malware Threat Targeting POS Terminals", Information Security Buzz, 02 June 2015. [Online]. Security researchers have identified a new instance of POS malware that masquerades as an attachment on a fake resume email. When opened, it uses an unusually well-engineered method to try and infiltrate a specific Windows-based POS system. (ID#: 15-50299) See http://www.informationsecuritybuzz.com/fireeye-researchers-identify-malware-threat-targeting-pos-terminals/

"Google Creates One-Stop Privacy and Security Shop", TechNewsWorld, 02 June 2015. [Online]. Tech giant Google released a feature called "My Account", which allows users to manage the privacy and security settings for their Google accounts. The changes "are mainly about multifactor authentication and SSO, and linking all of your devices so Google knows it's you," though centralization might make personal data more vulnerable to attack. (ID#: 15-50302) See http://www.technewsworld.com/story/82126.html

"Feds' Photobucket Strategy Could Hobble White Hats", 02 June 2015. [Online]. To combat the spread of new malware, the DoJ and FBI are taking a new approach: by going after distributers, they hope to stem the ever-steady flow of new malware samples into the hands of those who use it. This new trend is indicated by an increase in arrests of distributers, notably the recent arrests of two men who made an app for stealing Photobucket content. (ID#: 15-50303) See http://www.technewsworld.com/story/82125.html

"USMobile launches Scrambl3 mobile, Top Secret communication-standard app", Homeland Security Newswire, 02 June 2015. [Online]. Mobile phone services developer USMobile announced a new tool called Scrambl3, which uses "tunnels" in the deep web to encrypt and protect smartphone communications. This technology can help anyone from business, government, or even just personal communications. (ID#: 15-50305) See http://www.homelandsecuritynewswire.com/dr20150602-usmobile-launches-scrambl3-mobile-top-secret-communicationstandard-app

"Facebook Can Now Encrypt Notification Emails", PC Mag., 02 June 2015. [Online]. Though Facebook has offered encryption for a few years already, it wasn't until now that user have the option of protecting their Facebook notification emails with encryption. This is accomplished with OpenPGP, which requires the user to manually manage two keys (one private, one public). (ID#: 15-50306) See http://www.pcmag.com/article2/0,2817,2485201,00.asp

"New SOHO router security audit uncovers more than 60 flaws in 22 models", Computerworld, 02 June 2015. [Online]. As part of a master's thesis, researchers at Spain's Universidad Europea de Madrid released a list of over 60 security flaws in 22 routers from various manufacturers. The vulnerabilities allow hackers to inject malicious code, use malicious websites to control a router, and cross-site request forgery (CSRF) attacks, among others. (ID#: 15-50313) See http://www.computerworld.com/article/2930554/security/new-soho-router-security-audit-uncovers-more-than-60-flaws-in-22-models.html

"Cyber-pledge: US, Japan Have Each Other's Backs", Infosecurity Magazine, 02 June 2015. [Online]. The U.S. and Japan have agreed to lend help to each other in the event of a cyber attack. This is particularly important for Japan, which has a cybersecurity strength that some would consider disproportionately small considering the nation's prominence in the tech world. (ID#: 15-50314) See http://www.infosecurity-magazine.com/news/cyberpledge-us-japan-have-each/

"US Healthworks Suffers Data Breach Via Unencrypted Laptop", Forbes, 01 June 2015. [Online]. Early this past May, a US Healthworks laptop was stolen from an employee. The laptop, though password protected, was unencrypted and had personally identifiable information on it, potentially exposing names, addresses, date of birth and Social Security numbers. (ID#: 15-50359) See http://www.forbes.com/sites/davelewis/2015/06/01/us-healthworks-suffers-data-breach-via-unencrypted-laptop/?ss=Security

"Apple 'Text of Death' Flaw Hits Twitter, Snapchat", Infosecurity Magazine, 31 May 2015. [Online]. A flaw in CoreText, Apple's text-processing system, leaves all Apple products — everything from desktops to smart watches — vulnerable to DoS attacks. When CoreText tries to handle a specific sequence of non-Latin characters, the entire device crashes. The flaw is not limited to Apple devices, as both Twitter and Snapchat were found to be vulnerable to the bug. (ID#: 15-50316) See http://www.infosecurity-magazine.com/news/apple-text-of-death-flaw-hits/

"FBI to Dig Into IRS Data Breach Debacle", TechNewsWorld, 29 May 2015. [Online]. Following warnings by the U.S. Government Accountability Office in March, personal data from at least 100,000 taxpayers was stolen when hackers were able to use the Get Transcript application to access IRS user accounts. (ID#: 15-50304) See http://www.technewsworld.com/story/82111.html

"Biz Email Fraud Could Hit $1 Billion", GovInfoSecurity, 28 May 2015. [Online]. By utilizing social engineering and "spearphishing" tactics, cyber criminals can trick staff at a business or banking institution into helping them facilitate wire fraud schemes. The cost of these "masquerading" schemes, by the estimate of bank fraud prevention officer David Pollino, could reach $1 billion in 2015. Criminals may call or email staff at an institution, using social engineering to claim to be someone they aren't, and make an urgent request for money to be wired. (ID#: 15-50298) See http://www.govinfosecurity.com/biz-email-fraud-could-hit-1-billion-a-8266

"ACLU urges gov't to establish bug bounty programs, disclosure policies", SC Magazine, 28 May 2015. [Online]. In a recent letter to the U.S. Department of Commerce's Internet Policy Task Force, the ACLU called for more incentives for researchers to help the government's cybersecurity cause, saying "…we are not aware of any U.S government agency that has established a bug bounty program intended to reward researchers who find flaws in U.S. government systems and websites." The ACLU believes that, in addition to bug bounties, publishing a disclosure policy and publicizing bug bounty programs can help government agencies stay cyber-secure. (ID#: 15-50320) See http://www.scmagazine.com/govt-needs-to-catch-up-with-tech-coss-researcher-friendly-policies-aclu-says/article/417266/

"VA security holding in face of mounting threats", GCN, 27 May 2015. [Online]. Despite facing the never-ending onslaught of cyber attacks, the Department of Veterans Affairs appears to be fairing well, with a lower-than average number of medical devices being compromised, fewer suspicious emails, and zero data lost through breaches. (ID#: 15-50309) See http://gcn.com/articles/2015/05/27/va-malware.aspx?admgarea=TC_SecCybersSec

International News

“How a Hacker Could Hijack a Plane From Their Seat”, Homeland Security News Wire, 21 May 2015. [Online]. If flying in an airplane did not scare you before, it may now. Reports have surfaced that an anonymous cybersecurity professional successfully took over an airplane’s controls, all from his seat. The safety of wireless networks on aircrafts is now in question. (ID#: 15-60013)

See: http://www.homelandsecuritynewswire.com/dr20150521-how-a-hacker-could-hijack-a-plane-from-their-seat

“Iran Blames U.S. for Cyber-Attack on Oil Ministry”, Info Security, 29 May 2015. [Online]. The Iranian Oil Ministry was hit with a cyber-attack in March, the country has since blamed the U.S. The U.S. also partnered with Israel to create the Stuxnet attack in 2009. However, Cylance, a cyber-security company, published a report in December that dubbed Iran as “the new China” after uncovering their plans to launch a massive cyber-attack with the intention of stealing military information. (ID#: 15-60019)

See: http://www.infosecurity-magazine.com/news/iran-blames-us-for-cyber-attack-on/

“U.S. to Bring Japan Under Its Cyber Defense Umbrella”, NBC News, 01 June 2015. [Online]. The U.S. agreed to lend a helping hand to Japan's cybersecurity forces. The partnership will provide much needed assistance to the Japanese, as their cybersecurity development has fallen behind that of the United States. The U.S. and Japan aim to protect against attacks from common adversaries such as China as well as attacks from independent parties. (ID#: 15-60015)

See: http://www.nbcnews.com/tech/security/u-s-bring-japan-under-its-cyber-defense-umbrella-n367651

“United Nations: We Need Strong Encryption to Defend Free Speech”, Info Security, 01 June 2015. [Online]. The United Nations is calling for stronger encryption to protect people’s rights to anonymity online. By using the internet anonymously, people are guaranteed free speech. Governments have argued back by saying that strong encryption aids criminals in cyber crime. (ID#: 15-60017)

See: http://www.infosecurity-magazine.com/news/united-nations-strong-encryption/

“Japan Pension Service hack used classic attack method”, The Japan Times, 02 June 2015. [Online]. Personal data of over 1 Million people was stolen from the Japan Pension Service (JPS). Unlike many of the major complex hacks in the news lately, it is believed that the attack was carried out when employees accidentally opened a well-disguised email containing a virus. The JPS stated that the attack was limited to ID numbers, names, and addresses but all vital information remained secure. (ID#: 15-60016)

See: http://www.japantimes.co.jp/news/2015/06/02/national/social-issues/japan-pension-service-hack-used-classic-attack-method/#.VXrgsOsW15g

“U.K. Government Urges Action as Cost of Cyber Security Breaches Doubles”, Forbes, 02 June 2015. [Online]. Over the last year, certain cyber-attacks have cost U.K. businesses as much as $5 million. Despite more companies utilizing the government’s cyber security advice and resources, new threats continue to emerge such as employees. A shocking 75% of big businesses suffered a cyber-attack involving one of their own employees. (ID#: 15-60017)

See: http://www.forbes.com/sites/dinamedland/2015/06/02/uk-government-urges-action-as-cost-of-cyber-security-breaches-doubles/?ss=Security

“Google Creates One-Stop Privacy and Security Shop”, Tech News World, 02 June 2015. [Online]. Google released a new centralized interface for users to manage all of their settings, security, and privacy in one location called “My Account.” It intends to allow users to easily find and manage information that they would’ve had to dig for in the past, however, there are potential downsides. Jon Rudolph commented that if an attacker gets access to a user’s My Account page then it is just that much easier for information to be taken in bulk. (ID#: 15-60022)

See: http://www.technewsworld.com/story/82126.html

“Microsoft lets EU governments inspect source code for security issues”, Computer World, 03 June 2015. [Online]. Microsoft opened the doors of what they are calling a “Transparency Center” in Belgium. The goal of the new facility is to provide governments with a convenient place to come and look in depth at the code behind some of Microsoft’s products so they can review potential threats and security flaws. Microsoft hopes to strengthen their relationship with different governments through this new program. (ID#: 15-60018)

See: http://www.computerworld.com/article/2931107/government-it/microsoft-lets-eu-governments-inspect-source-code-for-security-issues.html

“Obama: U.S. Cybersecurity Problems Will Get Worse”, NBC News, 08 June 2015. [Online]. The president reiterated that the government is well aware the country’s cyber security is not up to par. This follows the data breach at the office of personal management in which roughly 4 million government workers had private information stolen. (ID#: 15-60023)

See: http://www.nbcnews.com/tech/security/obama-u-s-cybersecurity-problems-will-get-worse-n371651

“Federal Government Suffers Massive Hacking Attack”, Huffington Post, 09 June 2015. [Online].

The FBI is looking in to a cyber-attack on government officials, believed to have originated in China. The hack targeted the personal information of members of the Office of Personnel Management and the Interior Department. At this time it is still unclear as to why the attack was not detected. (ID#: 15-60012)

See: http://www.huffingtonpost.com/2015/06/04/government-data-breach_n_7514620.html?utm_hp_ref=cybersecurity

"Cybersecurity Firm Says Spying Operation Targeted Hotels Hosting Iran Nuclear Talks", Star Tribune, 10 June 2015. [Online]. Russian based cybersecurity firm, Kaspersky Lab, was hacked into via three separate, and previously unknown, exploits in Microsoft Software Installer. The firm also reported that the creator of this attack used it to hack into multiple hotels where Iranian government officials were meeting with other leaders. (ID#: 15-60014)

See: http://www.startribune.com/cybersecurity-firm-says-spying-campaign-targeted-iran-talks/306790151/

“The Dinosaurs of Cybersecurity Are Planes, Power Grids and Hospitals”, Tech Crunch, 10 July 2015. [Online]. One of the most prominent risks in cybersecurity comes in the form of infrastructure and things like airplanes and hospitals. As these systems are compromised, patches are developed to remedy the problem. However patches are slow to roll out and take a great deal of time to develop. By the time patches are complete, often, the damage has already been done. (ID#: 15-60040)

See: http://techcrunch.com/2015/07/10/the-dinosaurs-of-cybersecurity-are-planes-power-grids-and-hospitals/

“Why Cyber Security Experts Are Taking Aim At Sourced Traffic”, Ad Exchanger, 22 June 2015. [Online]. Almost 90% of all sourced traffic is generated from bots. These bots are designed to operate as closely as possible to a normal user. Cutting off this source of income would have a large impact on criminals committing fraud. (ID#: 15-60024)

See: http://adexchanger.com/online-advertising/why-cyber-security-experts-are-taking-aim-at-sourced-traffic/

“Up to the US to resume cyber-security talks, says China”, Reuters, 23 June 2015. [Online]. Tensions are growing after the United States accused China of stealing personal information from the Office of Personnel Management. In the past, the two nations had worked together to improve security however, as of late that relationship has been strained. China now says that if the U.S. wants to continue to negotiate cyber security, it is up to it to jump start the conversation. (ID#: 15-60020)

See: http://www.reuters.com/article/2015/06/23/us-usa-china-cybersecurity-idUSKBN0P30ZA20150623

“The Real Cost of Ignoring Cybersecurity”, Bisnow, 23 June 2015. [Online]. A saddening number of small businesses are being forced to shut down due to cyber-attacks. Bytegrid chief revenue officer Drew Fassett estimates that losing just 20MB of data to a cyber-attack can cost a company nearly $20,000. (ID#: 15-60025)

See: https://www.bisnow.com/national/news/data-center-bisnow-national/the-real-cost-of-ignoring-cybersecurity-47421

“Jeb Bush blasts the White House on cybersecurity”, Fortune, 23 June 2015. [Online]. Jeb Bush called for the president to prioritize the country’s cybersecurity. Bush was critical of President Obama’s handling of the OPM data breach. (ID#: 15-60026)

See: http://fortune.com/2015/06/23/jeb-bush-cybsersecurity/

“U.S., China agree to cybersecurity code of conduct”, SC Magazine, 26 June 2015. [Online]. The US and China hope to bring peace to their long unsteady cyber relationship with a new agreement. The agreement was one of the results of a three-day meeting between the two countries. (ID#: 15-60027)

See: http://www.scmagazine.com/us-china-summit-talks-turn-to-cybersecurity/article/423175/

“Report on Sony's Cybersecurity Blunder Shows the Pitfalls of Negligence”, Inc, 26 June 2015. [Online]. A new report claims that Sony was well aware of the risk they were taking after releasing The Interview. Instead of adding to their cybersecurity, Sony opted to alter scenes from the movie to try and make it less offensive. According to the report, Sony lacked even basic precautions that more than likely would have been enough to repel the hack. (ID#: 15-60028)

See: http://www.inc.com/spencer-bokat-lindell/sony-was-made-aware-of-risks-to-their-cybersecurity-and-did-little-to-prevent-them.html

“Masdar and MIT to Collaborate in Cybersecurity”, Gulf News, 28 June 2015. [Online]. Over the next two years, MIT and the Masdar Institute for Science and Technology will collaborate to study, and hopefully prevent, cyber-attacks on the infrastructure of the United Arab Emirates. The two groups hope that this case study will not only help them to share knowledge and skills, but also generate some interest in cybersecurity in the UAE. (ID#: 15-60021)

See: http://gulfnews.com/news/uae/crime/masdar-and-mit-to-collaborate-in-cybersecurity-1.1542037

“New Tactics for Improving Critical Infrastructure Cybersecurity Pushed by MIT Consortium”, Search Compliance, 29 June 2015. [Online]. MIT created the (IC)3 consortium as a way to push the cybersecurity of critical infrastructure. Professor Stuart Madnick said that they want to focus on management and strategy rather than the technical issues. (ID#: 15-60029)

See: http://searchcompliance.techtarget.com/feature/New-tactics-for-improving-critical-infrastructure-cybersecurity-pushed-by-MIT-consortium

“A Bird’s Eye View of the Legal Landscape for Cybersecurity”, Inside Counsel, 29 June 2015. [Online]. The field of cybersecurity is constantly undergoing rapid changes. With so many laws, regulations, and guidelines to abide by, businesses must adapt quickly. (ID#: 15-60030)

See: http://www.insidecounsel.com/2015/06/29/a-birds-eye-view-of-the-legal-landscape-for-cybers

“Teach All Computing Students About Cybersecurity, Universities Told”, Times Higher Education, 30 June 2015. [Online]. New regulations for UK universities now require cybersecurity to be included as part of a computer science degree. The regulations say students need to learn about securing systems and responding to attacks. Universities will be given two years to comply with the new guidelines. (ID#: 15-60031)

See: https://www.timeshighereducation.co.uk/news/teach-all-computing-students-about-cybersecurity-universities-told

“Doctors See Big Cybersecurity Risks, Compliance as Key for Hospitals”, Xconomy, 01 July 2015. [Online]. A recent poll shows that doctors are not confident in their hospitals' cybersecurity. Poll results show where opinions on the issue differ between the doctors and some of the system administrators. Both parties agree that the key to solving their problem is compliance. (ID#: 15-60032)

See: http://www.xconomy.com/boston/2015/07/01/doctors-see-big-cybersecurity-risks-compliance-as-key-for-hospitals/

“Is the information security industry having a midlife crisis?”, CSO Online, 01 July 2015. [Online]. The battle of information security is one that is being lost. Tsion Gonen, CSO of SafeNet, said that it is not reasonable to still attempt and prevent breaches. Instead, he suggested that focus needs to be on preventing attackers from finding any important data once they are inside. (ID#: 15-60033)

See: http://www.csoonline.com/article/2941097/security-awareness/is-the-information-security-industry-having-a-midlife-crisis.html

"Germany passes strict cyber-security law to protect 'critical infrastructure'", RT, 11 July 2015. [Online]. A new law in Germany requires over 2000 service providers to comply with new information security standards. The law covers any service labelled as "critical infrastructure" including transportation, utilities, finance and more. (ID#: 15-60034)

See: http://rt.com/news/273058-german-cyber-security-law/

“Google Bets Big on Cybersecurity with $100M Investment”, PYMNTS, 14 July 2015. [Online]. CrowdStrike, a cybersecurity company specializing in endpoint protection, confirmed they received $100 million from Google Capital. The company believes that as firewalls and antivirus slowly become less and less effective, their endpoint protection service puts them ahead of the competition. Google released a statement saying that they were very impressed with the rapid growth of the company. (ID#: 15-60035)

See: http://www.pymnts.com/news/2015/google-bets-big-on-cybersecurity-with-100m-investment/

“Can artificial intelligence stop hackers?”, Fortune, 14 July 2015. [Online]. Over the last 10 years, cybersecurity spending has seen an increase of almost 700%. Despite that staggering number, the results have not followed. Symantec CTO Amit Mital believes that the answer lies with artificial intelligence. He stated that humans simply take too long to identify an attack and then counter it. However, this raises the question of how much power humans are willing to turn over to machines. (ID#: 15-60036)

See: http://fortune.com/2015/07/14/artificial-intelligence-hackers/

“Chinese Bid for US Chipmaker Could Raise Cybersecurity Fears”, The Hill, 14 July 2015. [Online]. The Chinese government-operated company, Tsinghua Unigroup, is attempting to purchase Micron Technology, an American chip producer. Meanwhile, China is pushing a new law that would allow them to review all networking equipment. Some fear that this may be an attempt to gain access to the source code of many foreign products and use it to their advantage in hacking campaigns or other malicious activities. (ID#: 15-60037)

See: http://thehill.com/policy/cybersecurity/247801-chinese-bid-for-us-chip-maker-could-raise-cybersecurity-fears

“Automakers Unite to Prevent Cars from Being Hacked”, Fortune, 14 July 2015. [Online]. The Alliance of Automobile Manufacturers, a group of car companies including Ford and GM, teamed up to create an information hub that will be used primarily to share data in an effort to improve the cybersecurity of motor vehicles. The center is expected to be operational by the end of this calendar year. (ID#: 15-60038)

See: http://fortune.com/2015/07/14/automakers-share-security-data/

“Cybersecurity Intern Accused in Huge Hacking Bust”, CNN Money, 15 July 2015. [Online]. The US Department of Justice announced that they were able to take down Darkode, one of many online “black markets”. Among those charged with crimes was Morgan Culbertson, an intern of the cybersecurity firm FireEye, where he has researched malware on Android devices. He is charged with creating a malicious program known as Dendroid, capable of stealing data and assuming control over any Android device that it infects. (ID#: 15-60039)

See: http://money.cnn.com/2015/07/15/technology/hacker-fireeye-intern/

(ID#: 15-5612)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

International Security Related Conferences

|

Conferences |

The following pages provide highlights on Science of Security related research presented at the following International Conferences:

- International Conferences: Software Analysis, Evolution and Reengineering (SANER) Quebec, Canada

- International Conferences: CODASPY 15, San Antonio, Texas

- International Conferences: Cloud Engineering (IC2E), 2015 Arizona

- International Conferences: Cryptography and Security in Computing Systems, 2015, Amsterdam

- International Conferences: Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), 2015 Singapore

- International Conferences: Software Testing, Verification and Validation Workshops (ICSTW), Graz, Austria

- International Conferences: Workshop on Security and Privacy Analytics (IWSPA) ’15, San Antonio, Texas

(ID#: 15-5614)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

International Conferences: Software Analysis, Evolution and Reengineering (SANER) Quebec, Canada

|

International Conferences: Software Analysis, Evolution and Reengineering (SANER) Quebec, Canada |

The 2015 IEEE 22nd International Conference Software Analysis, Evolution and Reengineering (SANER) was held in Montréal from 2-6 March 2015, at École Polytechnique de Montréal, Québec, Canada. SANER is a research conference on the theory and practice of recovering information from existing software and systems. It explores innovative methods of extracting the many kinds of information that can be recovered from software, software engineering documents, and systems artifacts, and examines innovative ways of using this information in system renovation and program understanding. Details about the conference can be found on its web page at: http://saner.soccerlab.polymtl.ca/doku.php?id=en:start The presentations cited here relate specifically to the Science of Security.

Saied, M.A.; Benomar, O.; Abdeen, H.; Sahraoui, H., "Mining Multi-level API Usage Patterns," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 23, 32, 2-6 March 2015. doi: 10.1109/SANER.2015.7081812

Abstract: Software developers need to cope with complexity of Application Programming Interfaces (APIs) of external libraries or frameworks. However, typical APIs provide several thousands of methods to their client programs, and such large APIs are difficult to learn and use. An API method is generally used within client programs along with other methods of the API of interest. Despite this, co-usage relationships between API methods are often not documented. We propose a technique for mining Multi-Level API Usage Patterns (MLUP) to exhibit the co-usage relationships between methods of the API of interest across interfering usage scenarios. We detect multi-level usage patterns as distinct groups of API methods, where each group is uniformly used across variable client programs, independently of usage contexts. We evaluated our technique through the usage of four APIs having up to 22 client programs per API. For all the studied APIs, our technique was able to detect usage patterns that are, almost all, highly consistent and highly cohesive across a considerable variability of client programs.

Keywords: application program interfaces; data mining; software libraries; MLUP; application programming interface; multilevel API usage pattern mining; Clustering algorithms; Context; Documentation; Graphical user interfaces; Java; Layout; Security; API Documentation; API Usage; Software Clustering; Usage Pattern (ID#: 15-5411)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081812&isnumber=7081802

Ladanyi, G.; Toth, Z.; Ferenc, R.; Keresztesi, T., "A Software Quality Model for RPG," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 91, 100, 2-6 March 2015. doi: 10.1109/SANER.2015.7081819

Abstract: The IBM i mainframe was designed to manage business applications for which the reliability and quality is a matter of national security. The RPG programming language is the most frequently used one on this platform. The maintainability of the source code has big influence on the development costs, probably this is the reason why it is one of the most attractive, observed and evaluated quality characteristic of all. For improving or at least preserving the maintainability level of software it is necessary to evaluate it regularly. In this study we present a quality model based on the ISO/IEC 25010 international standard for evaluating the maintainability of software systems written in RPG. As an evaluation step of the quality model we show a case study in which we explain how we integrated the quality model as a continuous quality monitoring tool into the business processes of a mid-size software company which has more than twenty years of experience in developing RPG applications.

Keywords: DP industry; IBM computers; IEC standards; ISO standards; automatic programming; report generators; software maintenance; software quality; software reliability; software standards; source code (software); IBM i mainframe; ISO/IEC 25010 international standard; RPG programming language; business applications management; business processes; continuous quality monitoring tool; development costs; mid-size software company; national security; quality characteristic; reliability; reporting program generator; software maintainability level; software quality model; source code maintainability; Algorithms; Cloning; Complexity theory; Measurement; Object oriented modeling; Software; Standards; IBM i mainframe; ISO/IEC 25010;RPG quality model; Software maintainability; case study (ID#: 15-5412)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081819&isnumber=7081802

Xiaoli Lian; Li Zhang, "Optimized Feature Selection Towards Functional And Non-Functional Requirements In Software Product Lines," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 191, 200, 2-6 March 2015. doi: 10.1109/SANER.2015.7081829

Abstract: As an important research issue in software product line, feature selection is extensively studied. Besides the basic functional requirements (FRs), the non-functional requirements (NFRs) are also critical during feature selection. Some NFRs have numerical constraints, while some have not. Without clear criteria, the latter are always expected to be the best possible. However, most existing selection methods ignore the combination of constrained and unconstrained NFRs and FRs. Meanwhile, the complex constraints and dependencies among features are perpetual challenges for feature selection. To this end, this paper proposes a multi-objective optimization algorithm IVEA to optimize the selection of features with NFRs and FRs by considering the relations among these features. Particularly, we first propose a two-dimensional fitness function. One dimension is to optimize the NFRs without quantitative constraints. The other one is to assure the selected features satisfy the FRs, and conform to the relations among features. Second, we propose a violation-dominance principle, which guides the optimization under FRs and the relations among features. We conducted comprehensive experiments on two feature models with different sizes to evaluate IVEA with state-of-the-art multi-objective optimization algorithms, including IBEAHD, IBEAε+, NSGA-II and SPEA2. The results showed that the IVEA significantly outperforms the above baselines in the NFRs optimization. Meanwhile, our algorithm needs less time to generate a solution that meets the FRs and the constraints on NFRs and fully conforms to the feature model.

Keywords: feature selection; genetic algorithms; software product lines; IBEAε+; IBEAHD; IVEA; NFR optimization; NSGA-II;SPEA2;multiobjective optimization algorithm; nonfunctional requirements; numerical constraint; optimized feature selection; selection method; software product line; two-dimensional fitness function; violation-dominance principle; Evolutionary computation;Optimization;Portals;Security;Sociology;Software;Statistics; Feature Models; Feature Selection; Multi-objective Optimization; Non-functional requirements optimization; Software Product Line (ID#: 15-5413)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081829&isnumber=7081802

Zekan, B.; Shtern, M.; Tzerpos, V., "Protecting Web Applications Via Unicode Extension," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 419, 428, 2-6 March 2015. doi: 10.1109/SANER.2015.7081852

Abstract: Protecting web applications against security attacks, such as command injection, is an issue that has been attracting increasing attention as such attacks are becoming more prevalent. Taint tracking is an approach that achieves protection while offering significant maintenance benefits when implemented at the language library level. This allows the transparent re-engineering of legacy web applications without the need to modify their source code. Such an approach can be implemented at either the string or the character level.

Keywords: program debugging; security of data; software maintenance; command injection; language library level; legacy Web application; maintenance benefit; security attack; taint tracking; unicode extension; Databases; Java; Operating systems; Prototypes; Security; Servers (ID#: 15-5414)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081852&isnumber=7081802

Cadariu, M.; Bouwers, E.; Visser, J.; van Deursen, A., "Tracking Known Security Vulnerabilities In Proprietary Software Systems," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 516, 519, 2-6 March 2015. doi: 10.1109/SANER.2015.7081868

Abstract: Known security vulnerabilities can be introduced in software systems as a result of being dependent upon third-party components. These documented software weaknesses are “hiding in plain sight” and represent low hanging fruit for attackers. In this paper we present the Vulnerability Alert Service (VAS), a tool-based process to track known vulnerabilities in software systems throughout their life cycle. We studied its usefulness in the context of external software product quality monitoring provided by the Software Improvement Group, a software advisory company based in Amsterdam, the Netherlands. Besides empirically assessing the usefulness of the VAS, we have also leveraged it to gain insight and report on the prevalence of third-party components with known security vulnerabilities in proprietary applications.

Keywords: outsourcing; safety-critical software; software houses; software quality; Amsterdam; Netherlands; VAS usefulness assessment; documented software weaknesses; empirical analysis; external software product quality monitoring; known security vulnerability tracking; proprietary applications; proprietary software systems; software advisory company; software improvement group; software life cycle; software systems; third-party components; tool-based process; vulnerability alert service; Companies; Context; Java; Monitoring; Security; Software systems (ID#: 15-5415)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081868&isnumber=7081802

Kula, R.G.; German, D.M.; Ishio, T.; Inoue, K., "Trusting A Library: A Study Of The Latency To Adopt The Latest Maven Release," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 520, 524, 2-6 March 2015. doi: 10.1109/SANER.2015.7081869

Abstract: With the popularity of open source library (re)use in both industrial and open source settings, `trust' plays vital role in third-party library adoption. Trust involves the assumption of both functional and non-functional correctness. Even with the aid of dependency management build tools such as Maven and Gradle, research have still found a latency to trust the latest release of a library. In this paper, we investigate the trust of OSS libraries. Our study of 6,374 systems in Maven Super Repository suggests that 82% of systems are more trusting of adopting the latest library release to existing systems. We uncover the impact of maven on latent and trusted library adoptions.

Keywords: public domain software; security of data; software libraries; trusted computing; Maven superrepository; OSS library; open source software library; trusted library adoption; Classification algorithms; Data mining; Java; Libraries; Market research; Software systems (ID#: 15-5416)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081869&isnumber=7081802

Laverdiere, M.-A.; Berger, B.J.; Merloz, E., "Taint Analysis of Manual Service Compositions Using Cross-Application Call Graphs," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 585, 589, 2-6 March 2015. doi: 10.1109/SANER.2015.7081882

Abstract: We propose an extension over the traditional call graph to incorporate edges representing control flow between web services, named the Cross-Application Call Graph (CACG). We introduce a construction algorithm for applications built on the Jax-WS standard and validate its effectiveness on sample applications from Apache CXF and JBossWS. Then, we demonstrate its applicability for taint analysis over a sample application of our making. Our CACG construction algorithm accurately identifies service call targets 81.07% of the time on average. Our taint analysis obtains a F-Measure of 95.60% over a benchmark. The use of a CACG, compared to a naive approach, improves the F-Measure of a taint analysis from 66.67% to 100.00% for our sample application.

Keywords: Web services; data flow analysis; flow graphs; Apache CXF; CACG construction algorithm; F-measure; JBossWS; Jax-WS standard; Web services; control flow; cross-application call graph; manual service compositions; service call targets; taint analysis; Algorithm design and analysis; Androids; Benchmark testing; Java; Manuals; Security; Web services (ID#: 15-5417)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081882&isnumber=7081802

Qingtao Jiang; Xin Peng; Hai Wang; Zhenchang Xing; Wenyun Zhao, "Summarizing Evolutionary Trajectory by Grouping and Aggregating Relevant Code Changes," Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference on, pp. 361, 370, 2-6 March 2015. doi: 10.1109/SANER.2015.7081846

Abstract: The lifecycle of a large-scale software system can undergo many releases. Each release often involves hundreds or thousands of revisions committed by many developers over time. Many code changes are made in a systematic and collaborative way. However, such systematic and collaborative code changes are often undocumented and hidden in the evolution history of a software system. It is desirable to recover commonalities and associations among dispersed code changes in the evolutionary trajectory of a software system. In this paper, we present SETGA (Summarizing Evolutionary Trajectory by Grouping and Aggregation), an approach to summarizing historical commit records as trajectory patterns by grouping and aggregating relevant code changes committed over time. SETGA extracts change operations from a series of commit records from version control systems. It then groups extracted change operations by their common properties from different dimensions such as change operation types, developers and change locations. After that, SETGA aggregates relevant change operation groups by mining various associations among them. The proposed approach has been implemented and applied to three open-source systems. The results show that SETGA can identify various types of trajectory patterns that are useful for software evolution management and quality assurance.

Keywords: public domain software; software maintenance; software quality; SETGA; evolution history; historical commit records; large-scale software system; open-source systems; relevant code changes; software evolution management; software quality assurance; summarizing evolutionary trajectory by grouping and aggregation; trajectory patterns; Data mining; History; Software systems; Systematics; Trajectory; Code Change; Evolution; Mining; Pattern; Version Control System (ID#: 15-5418)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7081846&isnumber=7081802

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

International Conferences: CODASPY 15, San Antonio, Texas

|

International Conferences: CODASPY 15, San Antonio, Texas |

Fifth ACM Conference on Data and Application Security and Privacy (CODASPY 15) was held in San Antonio, Texas on March 2-5, 2015. The conference offers to provide a dedicated venue for high-quality research in the data and applications arena and seeks to foster a community with the focus in cyber security. The CODASPY web page is available at: http://codaspy.org/

Jonathan Dautrich, Chinya Ravishankar; “Tunably-Oblivious Memory: Generalizing ORAM to Enable Privacy-Efficiency Tradeoffs;” CODASPY '15 Proceedings of the 5th ACM Conference on Data and Application Security and Privacy, March 2015, Pages 313-324. Doi: 10.1145/2699026.2699097

Abstract: We consider the challenge of providing privacy-preserving access to data outsourced to an untrusted cloud provider. Even if data blocks are encrypted, access patterns may leak valuable information. Oblivious RAM (ORAM) protocols guarantee full access pattern privacy, but even the most efficient ORAMs to date require roughly L log2 N block transfers to satisfy an L-block query, for block store capacity N. We propose a generalized form of ORAM called Tunably-Oblivious Memory (lambda-TOM) that allows a query's public access pattern to assume any of lambda possible lengths. Increasing lambda yields improved efficiency at the cost of weaker privacy guarantees. 1-TOM protocols are as secure as ORAM. We also propose a novel, special-purpose TOM protocol called Staggered-Bin TOM (SBT), which efficiently handles large queries that are not cache-friendly. We also propose a read-only SBT variant called Multi-SBT that can satisfy such queries with only O(L + log N) block transfers in the best case, and only O(L log N) transfers in the worst case, while leaking only O(log log log N) bits of information per query. Our experiments show that for N = 2^24 blocks, Multi-SBT achieves practical bandwidth costs as low as 6X those of an unprotected protocol for large queries, while leaking at most 3 bits of information per query.

Keywords: data privacy, oblivious ram, privacy trade off (ID#: 15-5533)

URL: http://doi.acm.org/10.1145/2699026.2699097

Matthias Neugschwandtner, Paolo Milani Comparetti, Istvan Haller, Herbert Bos; “The BORG: Nanoprobing Binaries for Buffer Overreads;” CODASPY '15 Proceedings of the 5th ACM Conference on Data and Application Security and Privacy, March 2015, Pages 87-97. Doi: 10.1145/2699026.2699098

Abstract: Automated program testing tools typically try to explore, and cover, as much of a tested program as possible, while attempting to trigger and detect bugs. An alternative and complementary approach can be to first select a specific part of a program that may be subject to a specific class of bug, and then narrowly focus exploration towards program paths that could trigger such a bug. In this work, we introduce the BORG (Buffer Over-Read Guard), a testing tool that uses static and dynamic program analysis, taint propagation and symbolic execution to detect buffer overread bugs in real-world programs. BORG works by first selecting buffer accesses that could lead to an overread and then guiding symbolic execution towards those accesses along program paths that could actually lead to an overread. BORG operates on binaries and does not require source code. To demonstrate BORG's effectiveness, we use it to detect overreads in six complex server applications and libraries, including lighttpd, FFmpeg and ClamAV.

Keywords: buffer overread, dynamic symbolic execution, out-of-bounds access, symbolic execution guidance, targeted testing (ID#: 15-5534)

URL: http://doi.acm.org/10.1145/2699026.2699098

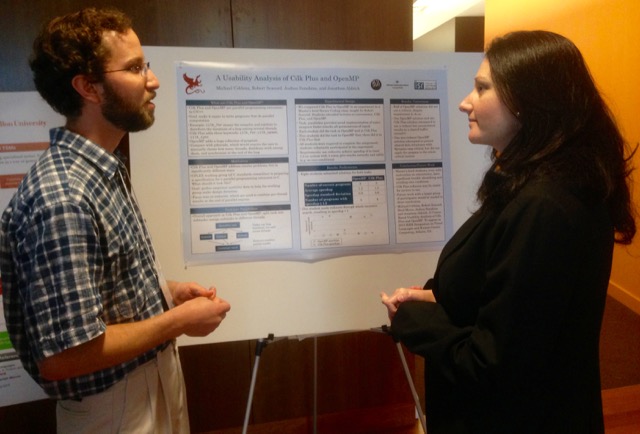

Sebastian Banescu, Alexander Pretschner, Dominic Battre, Stefano Cazzulani, Robert Shield, Greg Thompson; “Software-Based Protection against ‘Changeware’;” CODASPY '15 Proceedings of the 5th ACM Conference on Data and Application Security and Privacy, March 2015, Pages 231-242. Doi: 10.1145/2699026.2699099