Compendium of Science of Security Articles of Interest

|

Compendium of Science of Security Articles of Interest |

The following material details some recent activity associated with the Science of Security, including relevant workshops and articles. Some of the items have been published elsewhere and some may be published in the future. We invite you to read the articles below and direct your comments and questions to the Science of Security Virtual Organization (SoS-VO) at http://cps-vo.org/group/SoS using the Contact tab on the left.

(ID#: 15-5933)

Adoption of Cybersecurity Technology Workshop

|

Adoption of Cybersecurity Technology Workshop |

The Special Cyber Operations Research and Engineering (SCORE) Subcommittee sponsored the 2015 Adoption of Cybersecurity Technology (ACT) workshop at the Sandia National Laboratories in Albuquerque, New Mexico from 3-5 March 2015. As a community, researchers and developers have produced many effective cybersecurity technology solutions that are not implemented for a variety of reasons. Many cybersecurity professionals believe that 80% of the current problems in cyberspace have known solutions that have not been implemented. In order to illuminate systemic barriers to adoption of security measures, the workshop focused specifically on countering the phishing threat and its aftermath. The vision for the workshop was to change business practices for adoption of cybersecurity technologies; expose developers, decision makers, and implementers to others’ perspectives; address the technology, process, and usability roadblocks to adoption; and build a Community of Interest (COI) to engage regularly.

This was the first in what is expected to be an annual workshop to address issues associated with barriers to adoption of cybersecurity technologies. The workshop, itself, however, was simply the kickoff activity for a sustained effort to implement cybersecurity technology solutions throughout the US Government. Workshop participants were primarily government personnel, with some individuals from Federally Funded Research and Development Centers (FFRDCs), academia, and industry.

Figure 1: Overview of organizations participating in the ACT 2015 Workshop

There were four groups of attendees representing researchers and developers, decision-makers, implementers, and human behavior experts. Participants explored, developed, and implemented action plans for four use cases that addressed each of the four fundamental cybersecurity goals shown below: Device Integrity; Authentication and Credential Protection/Defense of Accounts; Damage Containment; and Secure and Available Transport, and how they are applied in the attack lifecycle. This construct provided the workshop with a framework that allowed participants to apply critical solutions specifically against the spear phishing threat.

Figure 2: Key areas necessary for success

Figure 3: Mitigations Framework

Participants in the workshop identified systemic issues preventing the adoption of such solutions and suggested how to change business practices to enable these cybersecurity technology practices to be adopted. The agenda included briefings on specific threat scenarios, briefings on cohorts’ concerns to promote understanding among groups, facilitated sessions that addressed the four use cases, and the development of action plans to be implemented via 90 day spins.

The First Day established the framework for the remainder of the workshop. There were two introductory briefings that focused on the phishing threat, one classified and one unclassified. The unclassified briefing “Phishing from the Front Lines” was presented by Matt Hastings, a Senior Consultant with Mandiant, a division of FireEye, Inc. Following a description of workshop activities, individuals associated with each of the four cohorts met to identify and then share the specific barriers to the adoption of cybersecurity technologies that they have experienced as developers, decision-makers, implementers, and human behavior specialists. After a working lunch that included a briefing on Secure Coding from Robert Seacord, Secure Coding Technical Manager at the Computer Emergency Response Team Division at the Software Engineering Institute, Carnegie Mellon University (CERT/SEI/CMU), participants were briefed on NSA’s Integrated Mitigations Framework and the Use Case Construct and Descriptions that would form the basis of the remainder of the workshop.

On Day Two, after participants received their use case assignments based on their stated interests and their roles, Dr. Douglas Maughan, Director of the Department of Homeland Security’s Science and Technology Directorate’s Cyber Security Division presented “Bridging the Valley of Death,” a briefing designed to help workshop participants identify how to overcome barriers to cybersecurity technology adoption. The first breakout session, Discovery, allowed participants to consider what technologies and/or best practices could be implemented over the next year. A lunchtime briefing on Technology Readiness Levels and the presentation of Success Stories by workshop participants, provided a good segue into the second breakout session, Formulation of Action Plans.

Dr. Greg Shannon, Chief Scientist of SEI/CMU, presented “Accelerating Innovation in Security and Privacy Technologies—IEEE Cybersecurity Initiative” to start Day Three. The third use case breakout session allowed use case participants to identify the next steps for implementation, after which all of the use cases presented their plans.

- The Device Integrity group chose to implement two tools to address the threat of malicious, unauthorized access, selecting two government networks for deployment.

- The Damage Containment group chose to assess user-system behavior by implementing a capability that enables the modeling and classification of user and systems behavior within the network and selected two academic institution networks for implementation.

- The Defense of Accounts group chose to look at strategies for securing emails and will be deploying the selected technologies on two government networks.

- The Secure and Available Transport group also focused on emails, selecting a technology for deployment on one government network that is already at Technology Readiness Level 7/8 and is operational in a limited environment.

Use case participants identified plans for each of the four 90-day Spins that they will brief to the ACT Organizing Committee and threat analysts who will assess progress against the goals. The Spin reports will address successes, challenges, and the specific steps taken to overcome roadblocks to the realization of the adoption of cybersecurity technologies. All of the Spin meetings will include updates from those responsible for implementing the chosen technology as well as use case team breakout sessions after the presentations. The Organizing Committee is now working on the specific logistics details for the four Spins. Spin 1 will be a half day event held in the DC area during the week of 15 June; Spin 2 will be a day-long meeting held within a few hours of the DC area sometime in mid-September; the location of the Spin 3 meeting is still to be determined, but it will be a half day event held in early December. The final spin will coincide with the second ACT workshop and will be held at Sandia Labs in mid-March 2016.

The participants were fully engaged in the two and a half day workshop and demonstrated commitment to both the concept of the workshop and to the follow-up activities. In providing feedback, 33 of the 35 respondents found value in attending, and 32 of the 35 would be interested in participating in the next workshop.

The goal over the next year is to strengthen government networks against spear phishing attacks by applying the selected technologies. Through the identification and subsequent removal of barriers to the adoption of these specific technologies, the use cases will identify ways to reduce obstacles to implementing known solutions to known problems, thus enabling more research to bridge the valley of death. Although the activity over the next year is tactical in nature, it provides an underlying strategy for achieving broader objectives as well as a foundation enabling transition from addressing threats on a transactional basis to collaborative cybersecurity engagements. Ultimately, ACT will strengthen our nation’s ability to address cybersecurity threats and improve our ability to make more of a difference more of the time.

(ID#: 15-5934)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Building Secure and Resilient Software from the Start

|

Building Secure and Resilient Software from the Start |

NC State Lablet addresses soft underbelly of software, resilience and measurement.

The hard problems of resilience and predictive metrics are being addressed by the team of knowledgeable and experienced researchers at the NC State University Science of Security Lablet. The two Principal Investigators (PI), Laurie Williams and Mladen Vouk have worked extensively in industry, as well as academe. Their experience brings a practical dimension to solving software-related hard problems, and their management skills have generated a well-organized and implemented research agenda.

Their general approach to software security is to build secure software from the start, to build in rather than bolt on, security. They seek to prevent security vulnerabilities in software systems. A security vulnerability is an instance of a fault in a program that violates an implicit or explicit security policy.

Using empirical analysis, they have determined security vulnerabilities can be introduced into a system because the code is too complex, changed too often, or not changed appropriately. These potential causes, both technical and behavioral, are then captured in their software metrics. They then examine whether statistical correlations between software metrics and security vulnerabilities exist in a given system. One NCSU study found that source code files changed by nine developers or more were 16 times more likely to have at least one post-release security vulnerability—many hands make, not light work, but poor work from a security perspective. From such analyses, predictive models and useful statistical associations to guide the development of secure software can be developed and disseminated.

Resilience of software to attacks is an open problem. According to NCSU researchers, two questions arise. First, if highly attack-resilient components and appropriate attack sensors are developed, will it become possible to compose a resilient system from these component parts? If so, how does that system scale and age? Finding the answers to these questions requires rigorous analysis and testing. Resilience, they say, depends on the science as well as the engineering behind the approach used. For example, a very simple and effective defensive strategy is to force attackers to operate under a “normal” operational profile of an application by building a dynamic application firewall in, so that it does not respond to “odd” or out of norm inputs. While not fool-proof, a normal operational profile appears to be less vulnerable, and such a device may be quite resistant to zero-day attacks.

The research has generated tangible results. Three recent papers have been written as a result of this research. "On Coverage-Based Attack Profiles," by Anthony Rivers, Mladen Vouk, and Laurie Williams; "A Survey of Common Security Vulnerabilities and Corresponding Countermeasures for SaaS," by Donhoon Kim, Vouk, and Williams; and “Diversity-based Detection of Security Anomalies,” by Roopak Venkatakrishnan and Vouk. (The last was presented at the Symposium and Bootcamp on the Science of Security, HOT SoS 2014.)

Bibliographical citations and more detailed descriptions of the research follow.

Rivers, A.T.; Vouk, M.A.; Williams, L.A.; "On Coverage-Based Attack Profiles"; Software Security and Reliability-Companion (SERE-C), 2014 IEEE Eighth International Conference on, vol., no., pp. 5, 6, June 30 2014-July 2 2014. doi:10.1109/SERE-C.2014.15

Abstract: Automated cyber attacks tend to be schedule and resource limited. The primary progress metric is often "coverage" of pre-determined "known" vulnerabilities that may not have been patched, along with possible zero-day exploits (if such exist). We present and discuss a hypergeometric process model that describes such attack patterns. We used web request signatures from the logs of a production web server to assess the applicability of the model.

Keywords: Internet; security of data; Web request signatures; attack patterns; coverage-based attack profiles; cyber attacks; hypergeometric process model; production Web server; zero-day exploits; Computational modeling; Equations; IP networks; Mathematical model; Software; Software reliability; Testing; attack; coverage; models; profile; security

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=6901633&isnumber=6901618

Kim, Donghoon; Vouk, Mladen A.; "A Survey of Common Security Vulnerabilities and Corresponding Countermeasures for SaaS"; Globecom Workshops, 2014, vol., no., pp. 59, 63, 8-12 Dec. 2014. doi:10.1109/GLOCOMW.2014.7063386

Abstract: Software as a Service (SaaS) is the most prevalent service delivery mode for cloud systems. This paper surveys common security vulnerabilities and corresponding countermeasures for SaaS. It is primarily focused on the work published in the last five years. We observe current SaaS security trends and a lack of sufficiently broad and robust countermeasures in some of the SaaS security area such as Identity and Access management due to the growth of SaaS applications.

Keywords: Authentication; Cloud computing; Google; Software as a service; XML

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7063386&isnumber=7063320

Roopak Venkatakrishnan, Mladen A. Vouk; "Diversity-based Detection of Security Anomalies"; HotSoS '14 Proceedings of the 2014 Symposium and Bootcamp on the Science of Security, April 2014, Article No. 29. doi:10.1145/2600176.2600205

Abstract: Detecting and preventing attacks before they compromise a system can be done using acceptance testing, redundancy based mechanisms, and using external consistency checking such external monitoring and watchdog processes. Diversity-based adjudication, is a step towards an oracle that uses knowable behavior of a healthy system. That approach, under best circumstances, is able to detect even zero-day attacks. In this approach we use functionally equivalent but in some way diverse components and we compare their output vectors and reactions for a given input vector. This paper discusses practical relevance of this approach in the context of recent web-service attacks.

Keywords: attack detection, diversity, redundancy in security, web services

URL: http://doi.acm.org/10.1145/2600176.2600205

Dr. Laurie Williams is the Acting Department Head of Computer Science, a Professor in the Department and co-director of the NCSU Science of Security Lablet. Her research focuses on software security in healthcare IT, agile software development, software reliability, and software testing and analysis. She has published extensively on these topics and on electronic commerce, information and knowledge management, as well as cyber security and software engineering and programming languages.

Dr. Laurie Williams is the Acting Department Head of Computer Science, a Professor in the Department and co-director of the NCSU Science of Security Lablet. Her research focuses on software security in healthcare IT, agile software development, software reliability, and software testing and analysis. She has published extensively on these topics and on electronic commerce, information and knowledge management, as well as cyber security and software engineering and programming languages.

Email: williams@csc.ncsu.edu

Web page: http://collaboration.csc.ncsu.edu/laurie/

(ID#: 15-5940)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

CMU Fields Cloud-Based Sandbox

|

CMU Fields Cloud-Based Sandbox |

CMU fields cloud-based sandbox as part of a broader study on modeling and measuring sandbox encapsulation.

“Sandboxes” are security layers for encapsulating components of software systems and imposing security policies to regulate the interactions between the isolated components and the rest of the system. Sandboxing is a common technique to secure components of systems that cannot be fully verified or trusted. Sandboxing is used both to protect systems from potentially dangerous components and also to protect critical components from the other parts of the overall system. Sandboxes have a role in metrics research because they provide a mechanism to work with a full sampling of components and applications, even when they are known to be malicious. This ability to work with dangerous materials can improve the validity of the resulting metrics. Of course, if the sandbox fails or is bypassed, then the production environment may be compromised.

In their research work “In-Nimbo Sandboxing,” researchers Michael Maass, William L. Scherlis, and Jonathan Aldrich from the Institute for Software Research in the School of Computer Science at Carnegie Mellon University propose a method to mitigate the risk of sandbox failure and raise the bar for potential threats. Using a technique they liken to software-as-a-service, they propose a cloud-based sandbox approach that focuses on tailoring the sandbox to the specific application. Tailoring provides flexibility in attack surface design. They encapsulate components with smaller, more defensible attack surfaces compared to other techniques. Their remote encapsulation reduces both sides of the risk product, the likelihood of attack success and the magnitude or degree of consequence of damage resulting from an attack, were it to be successful against the sandboxed component. They call their approach in nimbo sandboxing, after the Latin word for cloud.

Maass, Scherlis, and Aldrich assessed their approach on the basis of three principal criteria: performance, usability, and security. They conducted a field-trial deployment with a major aerospace firm, and were able to compare an encapsulated component deployed in an enterprise-managed cloud with the original version of the component deployed in the relatively higher-value user environment without encapsulation. In evaluating performance data, they focus on latency and ignore resource consumption. For the applications that were deployed, the technique only slightly increases user-perceived latency of interactions. In evaluating usability, the design of the sandbox mechanism was structured to present an experience identical to the local version, and users judged that this was accomplished. Another dimension of usability is the difficulty to developers of creating and deploying in-nimbo sandboxes for other applications. The field trial system is built primarily using widely adopted established components, and the authors indicate that the approach may be feasible for a variety of systems.

Cloud-based sandboxes allow defenders to customize the computing environment in which an encapsulated computation takes place, thereby making it more difficult to attack. And, since cloud environments are by nature “approximately ephemeral,” it also becomes more difficult for attackers to achieve both effects and persistence in their attacks. Their term “ephemeral” refers to an ideal computing environment with a short, isolated, and non-persistent existence. Even if persistence is achieved, the cloud computing environment offers much less benefit in a successful attack as compared, for example, with the relatively higher value of an end-user desktop environment.

According to the authors, “most mainstream sandboxing techniques are in-situ, meaning they impose security policies using only Trusted Computing Bases (TCBs) within the system being defended. Existing in-situ sandboxing approaches decrease the risk that a vulnerability will be successfully exploited, because they force the attacker to chain multiple vulnerabilities together or bypass the sandbox. Unfortunately, in practice these techniques still leave a significant attack surface, leading to a number of attacks that succeed in defeating the sandbox.”

The overall in-nimbo system is structured to separate a component of interest from its operating environment. The component of interest is relocated from the operating environment to the ephemeral cloud environment and is replaced (in the operating environment) by a specialized transduction mechanism which manages interactions with the now-remote component. The cloud environment hosts a container for the component of interest which interacts over the network with the operating environment. This reduces the internal attack surface in the operating environment, effectively affording defenders a mechanism to design and regulate attack surfaces for high-value assets. This approach naturally supports defense-in-depth ideas through layering on both sides.

The authors compare in-nimbo sandboxes with environments (Polaris and Terra) whose concept approaches an idealized ephemeral computing environment. Cloud-based sandboxing can closely approximate ephemeral environments. The “ephemerality” is a consequence of the isolation of the cloud-hosted virtual computing resource from other (perhaps higher-value) resources through a combination of virtualization and separate infrastructure for storage, processing, and communication. Assuming the persistent state is relatively minimal (e.g., configuration information), it can be hosted anywhere, enabling the cloud environment to persist just long enough to perform a computation before its full state is discarded and environment refreshed.

Using Adobe Reader as an example component of interest, the team built an in-nimbo sandbox and compared results with the usual in-situ deployment of Reader. Within some constraints, they concluded that the in-nimbo sandboxes could usefully perform potentially vulnerable or malicious computations away from the environment being defended.

They conclude with a multi-criteria argument for why their sandbox improves security, building on both quantitative and qualitative scales because many security dimensions cannot yet be feasibly quantified. They suggest that structured criteria-based reasoning that is built on familiar security-focused risk calculus can lead to solid conclusions. They indicate that this work is a precursor to an extensive study, still being completed, that evaluates more than 70 examples of sandbox designs and implementations against a range of identified criteria. This study employs a range of technical, statistical, and content-analytic approaches to map the space of encapsulation techniques and outcomes.

Carnegie Mellon University's Science of Security Lablet (SOSL) is one of four Lablets funded by NSA that is addressing the hard problems of cybersecurity. The five problem areas are scalability and composability, policy-governed secure collaboration, predictive security metrics, resilient architectures, and human behavior. The in-nimbo sandbox supports efforts in the development of predictive metrics, as well as scalability and composability. The broad goal of SOSL is "to identify scientific principles that can lead to approaches to the development, evaluation, and evolution of secure systems at scale." More about the CMU Lablet and its research goals can be found on the CVS-VO web page at http://cps-vo.org/group/sos/lablet/cmu.

The original article is available at the ACM Digital Library as: Maass, Michael and Scherlis, William L. and Aldrich, Jonathan. “In-Nimbo Sandboxing.” Proceedings of the 2014 Symposium and Bootcamp on the Science of Security. (HotSoS '14}, Raleigh, North Carolina, April, 2014, pp. 1:1, 1:12. ISBN: 978-1-4503-2907-1. doi:10.1145/2600176.2600177

URL: http://doi.acm.org/10.1145/2600176.2600177

(ID#: 15-5936)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Computational Cybersecurity in Compromised Environments Workshop

|

Computational Cybersecurity in Compromised Environments (C3E) Workshop |

The Special Cyber Operations Research and Engineering (SCORE) Subcommittee sponsored the 2014 Computational Cybersecurity in Compromised Environments (C3E) Workshop at the Georgia Tech Research Institute (GTRI) Conference Center from 20-22 October 2014. The research workshop brought together a diverse group of top academic, industry, and government experts to examine new ways of approaching the cybersecurity challenges facing the Nation and how to enable smart, real-time decision-making in Cyberspace through both “normal” complexity and persistent adversarial behavior.

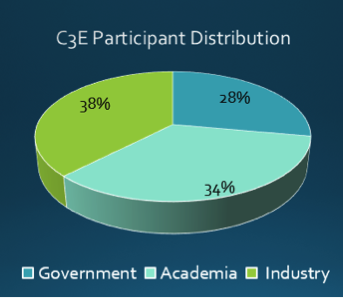

Figure 1: C3E Participation by Organizational Category

This was the sixth in a series of annual C3E research workshops, drawing upon the efforts between 2009 and 2013 on adversarial behavior, the role of models and data, predictive analytics, and the need for understanding how best to employ human and machine-based decisions in the face of emerging cyber threats. Since its inception, C3E has had two overarching objectives: 1) development of ideas worth additional cyber research; and 2) the development of a Community of Interest (COI) around unique, analytic, and operational approaches to the persistent cyber security threat.

C3E 2014 continued to focus on the needs of the practitioner and leverage past C3E themes of predictive analytics, decision-making, consequences, and visualization. To enhance the prospects of deriving applicable and adoptable results from C3E track work, the selection of the track topics, Security by Default and Data Integrity, was based on recommendations from senior government officials.

To accomplish its objectives, C3E 2014 drew upon the approaches that have been used in C3E since the beginning: 1) Keynote Speakers who provided tailored and provocative talks on a wide range of topics related to cyber security; 2) a Discovery or Challenge Problem that attracted the analytic attention of participants prior to C3E; and especially 3) Track Work that involved dedicated, small group focus on Security by Default and Data Integrity.

The C3E 2014 Discovery Problem was a Metadata-based Malicious Cyber Discovery Problem. The first goal of the task was to invent and prototype approaches for identifying high interest, suspicious, and likely malicious behaviors from metadata that challenge the way we traditionally think about the cyber problem. The second goal was to introduce participants to the DHS Protected Repository for the Defense of Infrastructure against Cyber Threats (PREDICT) datasets for use on this and other future research problems. PREDICT is a rich repository of datasets that contains routing (BGP) data, naming (DNS) data, data application (net analyzer) data, infrastructure (census probe) data, and security data (botnet sinkhole data, dark data, etc.). There are hundreds of actual and simulated dataset categories in PREDICT that provide samples of cyber data for researchers. DHS has addressed and resolved the legal and ethical issues concerning PREDICT, and the researchers found the PREDICT datasets to be a valuable resource for the C3E 2014 Discovery Problem. Each of the five groups that worked on the Discovery Problem presented their findings: 1) An Online Behavior Modeling Based Approach to Metadata-Based Malicious Cyber Discovery; 2) Implementation Based Characterization of Network Flows; 3) APT Discovery Beyond Time and Space; 4) Genomics Inspired Cyber Discovery; and 5) C3E Malicious Cyber Discovery: Mapping Access Patterns. Several government organizations have expressed interest in follow-up discussions on a couple of the approaches.

The purpose of the Data Integrity Track was to engage a set of researchers from diverse backgrounds to elicit research themes that could improve both understanding of cyber data integrity issues and potential solutions that could be developed to mitigate them. Track participants addressed data integrity issues associated with finance and health/science, and captured relevant characteristics, as shown below.

Figure 2: Characteristics of Data Integrity

Track participants also identified potential solutions and research themes for addressing data integrity issues: 1) Diverse workflows and sensor paths; 2) Cyber insurance and regulations; and 3) Human-in-the-loop data integrity detection.

The Security by Default (SBD) track focused on addressing whether secure, “out of the box” systems can be created, e.g., systems that are secure when they are fielded. There is a perception among stakeholders that systems that are engineered and/or configured to be secure by default may be less functional, less flexible, and more difficult to use, explaining the market bias toward insecure initial designs and configurations. Track participants identified five areas of focus that might merit additional study: 1) The building code analogy—balancing the equities; 2) Architecture and design—expressing security problems understandably; 3) Architecture and design—delivering and assuring secure components and infrastructure; 4) Biological analogs—supporting adaptiveness and architectural dynamism; and 5) Usability and metrics—rethinking the “trade-off” of security and usability.

In preparation for the next C3E workshop, a core group of participants will meet to refine specific approaches, and, consistent with prior workshops, will identify at least two substantive track areas through discussions with senior government leaders.

C3E remains focused on cutting-edge technology and understanding how people interact with systems and networks. In evaluating the 2014 workshop, fully 92% of the participants were both interested in the areas discussed and believed that the other participants were contributors in the field. While C3E is often oriented around research, the workshops have begun to incorporate practical examples of how different government, scientific, and industry organizations are actually using advanced analysis and analytics in their daily business and creating a path to applications for the practitioner, thus providing real solutions to address cyber problems.

(ID#: 15-5935)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Improving Power Grid Cybersecurity

|

Improving Power Grid Cybersecurity |

Illinois Research Efforts Spark Improvements in Power Grid Cybersecurity.

The Information Trust Institute (ITI) at the University of Illinois Urbana Champaign has a broad research portfolio. In addition to its work as a Science of Security Lablet where it is contributing broadly to the development of security science in resiliency—a system's ability to maintain security properties during ongoing cyber attacks—ITI has been working on issues related to the electric power infrastructure and the development of a stronger, more resilient power grid.

The Trustworthy Cyber Infrastructure for the Power Grid (TCIPG) project is a partnership among Illinois, Dartmouth, Arizona State, and Washington State Universities, as well as government and industry. Formed to meet challenges related to the health of the underlying computing and communication network infrastructure at serious risk from malicious attacks on grid components and networks, and accidental causes, such as natural disasters, misconfiguration, or operator errors, they continually collaborate with the national laboratories and the utility sector to protect the U.S. power grid by significantly improving the way the power grid infrastructure is designed, making it more secure, resilient, and safe.

TCIPG comprises several dozen researchers, students, and staff who bring interdisciplinary expertise essential to the operation and public adoption of current and future grid systems. That expertise extends to power engineering; computer science and engineering; advanced communications and networking; smart grid markets and economics; and Science, Technology, Engineering, and Math (STEM) education.

Ten years ago, the electricity sector was largely “security-unaware.” More recently, and thanks in part to TCIPG, there has been broad adoption of security best practices. That transition came about from breakthrough research, national expert panels, and in writing key documents. However, because the threat landscape continuously evolves, resiliency in a dynamic environment is key and an area where continuous improvement is needed.

TCIPG Research in Smart Grid Resiliency

Countering threats to the nation’s cyber systems in critical infrastructure such as the power grid has become a major strategic objective and was identified as such in Homeland Security Presidential Directive 7. Smart grid technologies promise advances in efficiency, reliability, integration of renewable energy sources, customer involvement, and new markets. But to achieve those benefits, the grid must rely on a cyber-measurement and control infrastructure that includes components ranging from smart appliances at customer premises to automated generation control. Control systems and administrative systems no longer have an air gap; security between the two has become more complicated and complex.

TCIPG research has produced important results and innovative technologies in addressing that need and the complexity by focusing on the following areas:

- Detecting and responding to cyber attacks and adverse events, as well as incident management of these events.

- Securing of the wide-area measurement system on which the smart grid relies.

- Maintaining power quality and integrating renewables at multiple scales in a dynamic environment.

- Advanced testbeds for experiments and simulation using actual power system hardware “in the loop.”

Much of this work has been achieved because of the success of the experimental testbed.

Testbed Cross-Cutting Research

Experimental validation is critical for emerging research and technologies. The TCIPG testbed enables researchers to conduct, validate, and evolve cyber-physical research from fundamentals to prototype, and finally, transition to practice. It provides a combination of emulation, simulation, and real hardware to realize a large-scale, virtual environment that is measurable, repeatable, flexible, and adaptable to emerging technology while maintaining integration with legacy equipment. Its capabilities span the entire power grid: transmission, distribution & metering, distributed generation, and home automation and control – providing true end-to-end capabilities for the smart grid.

The cyber-physical testbed facility uses a mixture of commercial power system equipment and software, hardware and software simulation, and emulation to create a realistic representation of the smart grid. This representation can be used to experiment with next-generation technologies that span communications from generation to consumption and everything in between. In addition to offering a realistic environment, the testbed facility is instrumented with cutting-edge research and commercial tools to explore problems from multiple dimensions, tackling in-depth security analysis and testing, visualization and data mining, and federated resources, and developing novel techniques that integrate these systems in a composable way.

A parallel project funded by the State of Illinois, the Illinois Center for a Smarter Electric Grid (ICSEG), is a 5-year project to develop and operate a facility to provide services for the validation of information technology and control aspects of Smart Grid systems, including micro grids and distributed energy resources. The key objective of this project is to test and validate in a laboratory setting how new and more cost-effective Smart Grid technologies, tools, techniques, and system configurations can be used in trustworthy configurations to significantly improve those in common practice today. The laboratory is also a resource for Smart Grid equipment suppliers and integrators and electric utilities to allow validation of system designs before deployment.

Education and Outreach

In addition to basic research, TCIPG has addressed needs in education and outreach. Nationally, there is a shortage of professionals who can fill positions in the power sector. Skills required for smart grid engineers have changed dramatically. Graduates of the collaborating TCIPG universities are well-prepared to join the cyber-aware grid workforce as architects of the future grid, as practicing professionals, and as educators.

TCIPG has conducted short courses for practicing engineers and for DOE program managers. In addition to a biennial TCIPG Summer School for university students and researchers, utility and industry representatives, and government and regulatory personnel, TCIPG organizes a monthly webinar series featuring thought leaders in cyber security and resiliency in the electricity sector. In alignment with national STEM educational objectives, TCIPG conducts extensive STEM outreach to K-12 students and teachers. TCIPG has developed interactive, open-ended apps (iOS, Android, MinecraftEdu) for middle-school students, along with activity materials and teacher guides to facilitate integration of research, education, and knowledge transfer by linking researchers, educators, and students.

The electricity industry in the U.S. is made up of thousands of utilities, equipment and software vendors, consultants, and regulatory bodies. In both its NSF-funded and DOE/DHS-funded phases, TCIPG has actively developed extensive relationships with such entities and with other researchers in the sector, including joint research with several national laboratories.

The involvement of industry and other partners in TCIPG is vital to its success, and is facilitated by an extensive Industry Interaction Board (IIB) and a smaller External Advisory Board (EAB). The EAB, with which TCIPG interacts closely, includes representatives from the utility sector, system vendors, and regulatory bodies, in addition to DOE-OE and DHS S&T.

Partnerships & Impact

While university-led, TCIPG has always stressed real-world impact and industry partnerships.That is why TCIPG technologies have been adopted by the private sector.

- Several TCIPG technologies have been or are currently deployed on a pilot basis in real utility environments.

- A leading equipment vendor adopted TCIPG advanced technologies for securing embedded systems in grid controls.

- Three startup companies in various stages of launch employ TCIPG foundational technologies.

Leadership

Director: Professor William H. Sanders, who is also Co-PI for the UIUC Science of Security Lablet

Personal web page: http://www.crhc.illinois.edu/Faculty/whs.html

TCIPG URL: http://tcipg.org/about-us

Recent publications arising from TCIPG’s work:

- CPINDEX: Cyber-Physical Vulnerability Assessment for Power-Grid Infrastructures

- Real Time Modeling and Simulation of Cyber-Power System

- A Hybrid Network IDS for Protective Digital Relays in the Power Transmission Grid

- Power system analysis criteria-based computational efficiency enhancement for power flow and transient stability

- Cyber Physical Security for Power Grid Protection

- An Analysis of Graphical Authentication Techniques for Mobile Platforms as Alternatives to Passwords

- Portunes: Privacy-Preserving Fast Authentication for Dynamic Electric Vehicle Charging

- Secure Data Collection in Constrained Tree-Based Smart Grid Environments

- Practical and Secure Machine-to-Machine Data Collection Protocol in Smart Grid

- Searchable Encrypted Mobile Meter

- Context-sensitive Key Management for Smart Grid Telemetric Devices

URL: http://tcipg.org/research/

(ID#: 15-5941)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Selection of Android Graphic Pattern Passwords

|

Selection of Android Graphic Pattern Passwords |

Lablet partners study mobile passwords, human behavior

Android mobile phones come with four embedded access methods: a finger swipe, a pattern, a PIN, or a password, in ascending order of security. In the pattern method, the user is required to select a pattern by traversing a grid of 3x3 points. A pattern must contain at least four points, cannot use a point twice, and all points along a path must be sequential and connected, that is, no skipping of points. The pattern can be visible or cloaked. When a user enables such a feature, how do they trade off security with usability? And, are there visual cues that lead users to select one password over another and whether for usability or security? Researchers at the U.S. Naval Academy and Swarthmore, partners of the University of Maryland Science of Security Lablet, conducted a large user study of preferences for usability and security of the Android password pattern to provide insights into user perceptions that inform choice.

The study by Adam Aviv and Dane Fichter, “Understanding Visual Perceptions of Usability and Security of Android’s Graphical Password Pattern,” used a survey methodology that asked participants to select between pairs of patterns indicating either a security or usability preference. By carefully selecting password pairs to isolate a visual feature, visual perceptions of usability and security of different features were measured. They had a sample size of 384 users, self selected on Amazon Mechanical Turk. They found visual features that can be attributed to complexity indicated a stronger perception of security, while spatial features, such as shifts up/down or left/right were not strong indicators either for security or usability.

In their study, Aviv and Fichter selected pairs of patterns based on six visual features: Length (total number of contacts points used), Crosses (occur when the pattern doubles-back on itself by tracing over a previously contacted point), Non-Adjacent (The total number of non-adjacent swipes which occur when the pattern double-backs on itself by tracing over a previously contacted point), Knight-Moves (two spaces in one direction and then one space over in another direction), Height (amount the pattern is shifted towards the upper or lower contact points), and Side (amount the pattern is shifted towards the left or right contact points).

They asked users to select between two passwords, indicating a preference for one password in the pair that met a particular criterion, such as perceived security or usability, compared to the other password. By carefully selecting these password pairs, visual features of the passwords can be isolated and the impact of that feature on users’ perception of security and usability measured.

The researchers concluded that spatial features have little impact, but more visually striking features have a stronger impact, with the length of the pattern being the strongest indicator of preference. These results were extended and applied by constructing a predictive model with a broader set of features from related work, and the researchers found that the distance factor, the total length of all the lines in a pattern, is the strongest predictor of preference. These findings provide insight into users’ visual calculus when assessing a password, and the information may be used to develop new password systems or user selection tools, like password meters.

Moreover, Aviv and Fichter concluded that, with a good predictive model of user preference, their findings could be applied to a broader set of passwords, including those not used in the survey, and that this research could be expanded. For example, ranking data based on learned pairwise preferences is an active research area in machine learning, and the resulting rankings metric over all potential patterns in the space would be greatly beneficial to the community. It could enable new password selection procedures where users are helped in identifying a preferred usable password that also meets a security requirement.

The study is available at: http://www.usna.edu/Users/cs/aviv/papers/p286-aviv.pdf

Adam J. Aviv, Assistant Professor, Computer Science, USNA--PI

Email: aviv@usna.edu

Email: aviv@usna.edu

Webpage: http://www.usna.edu/Users/cs/aviv/

(ID#: 15-5937)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

SoS and Resilience for Cyber-Physical Systems Project

|

SoS and Resilience for Cyber-Physical Systems Project |

Nashville, TN

20 March 2015

On March 17 and 18, 2015, researchers from the four System Science of SecUrity and REsilience for Cyber-Physical Systems (SURE) project universities (Vanderbilt, Hawai’i, California-Berkeley, and MIT) met with members of NSA’s R2 Directorate to review their first six months of work. SURE is the NSA-funded project aimed at improving scientific understanding of resiliency, that is, robustness to reliability failures or faults and survivability against security failures and attacks in cyber-physical systems (CPS). The project addresses the question of how to design systems that are resilient despite significant decentralization of resources and decision-making.

Waseem Abbas and Xenofon Koutsoukos, Vanderbilt,

listen to comments about resilient sensor designs from

David Corman, National Science Foundation.

Initially looking at water distribution and surface traffic control architectures, air traffic control and satellite systems are added examples of the types of cyber-physical systems being examined. Xenofon Koutsoukos, Professor of Electrical Engineering and Computer Science in the Institute for Software Integrated Systems (ISIS) at Vanderbilt University, the Principle Investigator (PI) for SURE, indicated the use of these additional cyber-physical systems is to demonstrate how the SURE methodologies can apply to multiple systems. Main research thrusts include hierarchical coordination and control, science of decentralized security, reliable and practical reasoning about secure computation and communication, evaluation and experimentation, and education and outreach. The centerpiece is their testbed for evaluation of CPS security and resilience.

The development of the Resilient Cyber-Physical Systems (RCPS) Testbed supports evaluation and experimentation across the complete SURE research portfolio. This platform is being used to capture the physical, computational and communication infrastructure; describes the deployment, configuration of security measures and algorithms; and provides entry points for injecting various attack or failure events. "Red Team" vs. "Blue Team" simulation scenarios are being developed. After the active design phase—when both teams are working in parallel and in isolation—the simulation is executed with no external user interaction, potentially several times. The winner is decided based on scoring weights and rules that are captured by the infrastructure model.

The Resilient Cyber-Physical System Testbed hardware component.

In addition to the testbed, ten research projects on resiliency were presented. These presentations covered both behavioral and technical subjects including adversarial risk, active learning for malware detection, privacy modeling, actor networks, flow networks, control systems, software and software architecture, and information flow policies. The CPS-VO web site, its scope and format was also briefed. Details of these research presentations are presented in a companion newsletter article.

See: http://cps-vo.org/node/18610.

In addition to Professor Koutsoukos, participants included his Vanderbilt colleagues Gabor Karsai, Janos Sztipanovits, Peter Volgyesi, Yevgeniy Vorobeychik and Katie Dey. Other participants were Saurabh Amin, MIT; Dusko Pavlovic, U. of Hawai'i; and Larry Rohrbough, Claire Tomlin, and Roy Dong from California-Berkeley. Government representatives from the National Science Foundation, Nuclear Regulatory Commission, and Air Force Research Labs also attended, as well as the sponsoring agency, NSA.

Vanderbilt graduate students Pranav Srinivas Kumar (L) and William Emfinger

demonstrated the Resilient Cyber-Physical Systems Testbed.

(ID#: 15-5939)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Wyvern Programming Language Builds Secure Apps

|

Wyvern Programming Language Builds Secure Apps |

CMU Wyvern Programming Language Builds Secure Apps, Promotes Composability

A wyvern is a mythical winged creature with a fire-breathing dragon's head, a poisonous bite, a scaly body, two legs, and a barbed tail. Deadly and stealthy by nature, wyverns are silent when flying and keep their shadows unseen. They hide in caves to protect their loot, and they are popular figures in heraldry and in electronic games. As the mythical wyvern protects its trove, so the Wyvern programming language is designed to help create secure programs to protect applications and data.

Led by Dr. Jonathan Aldrich, Institute for Software Research (ISR), researchers at the Carnegie Mellon University (CMU) Science of Security Lablet (SOSL), along with Dr. Alex Potanin and collaborators at the Victoria University of Wellington, have been developing Wyvern to build secure web and mobile applications. Wyvern is designed to help software engineers build those secure applications using several type-based, domain-specific languages (DSLs) within the same program. It is able to exploit knowledge of sublanguages (SQL, HTML, etc.) used in the program based on types and their context, which indicate the format and typing of the data.

Dr. Aldrich and his team recognized that the proliferation of programming languages used in developing web and mobile applications is inefficient and thwarts scalability and composability. Software development has come a long way, but the web and mobile arenas nonetheless struggle to cobble together a "...mishmash of artifacts written in different languages, file formats, and technologies." http://www.cs.cmu.edu/~aldrich/wyvern/spec-rationale.html

Constructing web pages often requires HTML for structure, CSS for design, JavaScript to handle user interaction, and SQL to access the database back-end. The diversity of languages and tools used to create an application increases the associated development time, cost, and security risks. It also creates openings for Cross-Site Scripting and SQL Injection attacks. Wyvern eliminates the need to use character strings as commands, as is the case, for instance, with SQL. By allowing character strings, malicious users with a rough knowledge of a system's structure could execute destructive commands such as DROP TABLE, or manipulate instituted access controls. Instead, Wyvern is a pure object-oriented language that is value-based, statically type-safe, and supports functional programming. It supports HTML, SQL, and other web languages through a concept of “Composable Type-Specific Languages (TSLs).”

Composable Type-Specific Languages are the equivalent of a "...skilled international negotiator who can smoothly switch between languages..," according to Dr. Aldrich. The system can discern which sublanguage is being used through context, much as “...a person would realize that a conversation about gourmet dining might include some French words and phrases.” Wyvern strives to provide flexible syntax, using an internal DSL strategy; static type-checking based on defined rules in Wyvern-internal DSLs; secure language and library constructs providing secure built-in datatypes and database access through an internal DSL; and high-level abstractions, wherein programmers will be able to define an application's architecture, to be enforced by the type system, and implemented by the compiler and runtime. Wyvern follows the principle that objects should only be accessible by invoking their methods. With Wyvern's use of TSLs, a type is invoked only when a literal appears in the context of the expected type, ensuring non-interference (Omar 2014, at: http://www.cs.cmu.edu/~aldrich/papers/ecoop14-tsls.pdf).

Wyvern is an ongoing project hosted at the open-source site GitHub. Interested potential users may explore the language at: https://github.com/wyvernlang/wyvern. Interest in Wyvern programming language has been growing in the security world. Gizmag reviews and describes Wyvern as “something of a meta-language,” and agrees that the web would be a much more secure place if not for vulnerabilities due to the common coding practice of “pasted-together strings of database commands” (Moss 2014, accessed at: http://www.gizmag.com/wyvern-multiple-programming-languages/33302/#comments). The CMU Lablet and Wyvern were also featured in a press release by SD Times, which mentions the integration of multiple languages, citing flexibility in terms of additional sublanguages, and easy-to-implement compilers. The article may be accessed at: http://sdtimes.com/wyvern-language-works-platforms-interchangeably/. Communications of the ACM (CACM) explain Wyvern as a host language that allows developers to import other languages for use on a project, but warns that Wyvern, as a meta-language, could be vulnerable to attack. The CACM article can be accessed at: http://cacm.acm.org/news/178649-new-nsa-funded-programming-language-could-closelong-standing-security-holes/fulltext.

The WYVERN project is part of the research being done by the Carnegie Mellon University Science of Security Lablet supported by NSA and other agencies to address hard problems in cybersecurity, including scalability and composability. Other hard problems being addressed include policy-governed secure collaboration, predictive security metrics, resilient architectures, and human behavior.

References and Publications

A description of the Wyvern Project is available on the CPS-VO web page at: http://cps-vo.org/node/15054. A succinct PowerPoint presentation about Wyvern and specific examples may be accessed at: http://www.cs.cmu.edu/~comar/GlobalDSL13-Wyvern.pdf. As of March 15, 2015, Wyvern is publically distributed on GIT HUB under a GPLv2 license. https://github.com/wyvernlang/wyvern.

The latest research work on Wyvern was presented at PLATEAU ’14 in Portland, Oregon. That paper is available on the ACM Digital Library as:

Darya Kurilova, Alex Potanin, Jonathan Aldrich; Wyvern: Impacting Software Security via Programming Language Design; PLATEAU '14 Proceedings of the 5th Workshop on Evaluation and Usability of Programming Languages and Tools; October 2014, Pages 57-58; doi:10.1145/2688204.2688216

Abstract: Breaches of software security affect millions of people, and therefore it is crucial to strive for more secure software systems. However, the effect of programming language design on software security is not easily measured or studied. In the absence of scientific insight, opinions range from those that claim that programming language design has no effect on security of the system, to those that believe that programming language design is the only way to provide "high-assurance software." In this paper, we discuss how programming language design can impact software security by looking at a specific example: the Wyvern programming language. We report on how the design of the Wyvern programming language leverages security principles, together with hypotheses about how usability impacts security, in order to prevent command injection attacks. Furthermore, we discuss what security principles we considered in Wyvern's design.

Keywords: command injection attacks, programming language, programming language design, security, security principles, usability, wyvern

URL: http://doi.acm.org/10.1145/2688204.2688216

An earlier work by the research group is also available at: Darya Kurilova, Cyrus Omar, Ligia Nistor, Benjamin Chung, Alex Potanin, Jonathan Aldrich; Type Specific Languages To Fight Injection Attacks; HotSoS '14 Proceedings of the 2014 Symposium and Bootcamp on the Science of Security, April 2014, Article No. 18; doi:10.1145/2600176.2600194

Abstract: Injection vulnerabilities have topped rankings of the most critical web application vulnerabilities for several years. They can occur anywhere where user input may be erroneously executed as code. The injected input is typically aimed at gaining unauthorized access to the system or to private information within it, corrupting the system's data, or disturbing system availability. Injection vulnerabilities are tedious and difficult to prevent.

Keywords: extensible languages; parsing; bidirectional typechecking; hygiene

URL: http://doi.acm.org/10.1145/2600176.2600194

Some other Publications Related to Wyvern:

Joseph Lee, Jonathan Aldrich, Troy Shaw, and Alex Potanin; A Theory of Tagged Objects; In Proceedings European Conference on Object-Oriented Programming (ECOOP), 2015.

http://ecs.victoria.ac.nz/foswiki/pub/Main/TechnicalReportSeries/ECSTR15-03.pdf

Cyrus Omar, Chenglong Wang, and Jonathan Aldrich; Composable and Hygienic Typed Syntax Macros; In Proceedings of the 30th Annual ACM Symposium on Applied Computing (SAC '15). 2015. Doi:10.1145/2695664.2695936

http://doi.acm.org/10.1145/2695664.2695936

Cyrus Omar, Darya Kurilova, Ligia Nistor, Benjamin Chung, Alex Potanin, and Jonathan Aldrich; Safely Composable Type-Specific Languages; In Proceedings, European Conference on Object-Oriented Programming, 2014.

http://www.cs.cmu.edu/~aldrich/papers/ecoop14-tsls.pdf

Jonathan Aldrich, Cyrus Omar, Alex Potanin, and Du Li; Language-Based Architectural Control; International Workshop on Aliasing, Capabilities, and Ownership (IWACO '14), 2014.

http://www.cs.cmu.edu/~aldrich/papers/iwaco2014-arch-control.pdf

Ligia Nistor, Darya Kurilova, Stephanie Balzer, Benjamin Chung, Alex Potanin, and Jonathan Aldrich; Wyvern: A Simple, Typed, and Pure Object-Oriented Language; Mechanisms for Specialization, Generalization, and Inheritance (MASPEGHI), 2013.

http://www.cs.cmu.edu/~aldrich/papers/maspeghi13.pdf

Cyrus Omar, Benjamin Chung, Darya Kurilova, Alex Potanin, and Jonathan Aldrich; Type-Directed, Whitespace-Delimited Parsing for Embedded DSLs; Globalization of Domain Specific Languages (GlobalDSL), 2013.

http://www.cs.cmu.edu/~aldrich/papers/globaldsl13.pdf

(ID#: 15-5938)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.