Science of Security (SoS) Newsletter (2016 - Issue 4)

Science of Security (SoS) Newsletter (2016 - Issue 4)

Each issue of the SoS Newsletter highlights achievements in current research, as conducted by various global members of the Science of Security (SoS) community. All presented materials are open-source, and may link to the original work or web page for the respective program. The SoS Newsletter aims to showcase the great deal of exciting work going on in the security community, and hopes to serve as a portal between colleagues, research projects, and opportunities.

Please feel free to click on any issue of the Newsletter, which will bring you to their corresponding subsections:

- In the News

- Conferences

- Publications of Interest

- Upcoming Events of Interest.

Publications of Interest

The Publications of Interest provides available abstracts and links for suggested academic and industry literature discussing specific topics and research problems in the field of SoS. Please check back regularly for new information, or sign up for the CPSVO-SoS Mailing List.

(ID#:16-8943)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Cyber Scene #1

|

Cyber Scene #1 |

This addition to the Newsletter is intended to provide an informative, timely backdrop of events, thinking, and developments that feed into technological advancement of SoS Cybersecurity collaboration and extend its outreach.

The Center for Cyber and Homeland Security at George Washington University (GWU) hosted its Annual Strategic Conference on 3 May 2016 focused on “Public-Private Sector Coordination on Cybersecurity.” Across multiple sessions, a swath of public sector leaders as well as US intelligence community “titans”—former heads of CIA’s Counterterrorism Center, the National Counterterrorism and Counterintelligence Centers, and present DHS officials—combined engaging presentations with vibrant Q & A exchanges open to the public and captured live by CSPAN. This session also included foreign audience participants and French embassy officials, following up the French Minister of Interior Cazeneuve’s 11 March GWU presentation on the heels of this year’s Paris attacks. Among other pressing issues, one panel predicted an upturn in the public’s interest in cybersecurity due to lawsuits against private sector companies deficient in providing sufficient cybersecurity—and attendant US congressional and judicial response to define and regulate such legal action. See (video or text): http://www.c-span.org/video/?409023-2/george-washington-university-national-security-cybersecurity-conference

As SoS outreach continues its transoceanic direction, the view on US private-public sector from the UK’s thoughtful Economist is enlightening. In 16 April 2016’s article entitled “Encryption and the law: Scrambled regs,” The Economist casts the present situation as a cold war heating up and putting “America’s technology firms on a collision course with its policemen and spies.” It too looks at US Congressional activity as well as the implication for US domestic rules that leave “the truly dangerous” using more robust software written overseas. See: http://www.economist.com/news/united-states/21696937-cold-war-between-government-and-computing-firms-hotting-up-scrambled-regs.

(ID#: 16-9554)

Hot Research in Cybersecurity Fuels HotSoS 2016

|

Hot Research at HotSoS 2016 |

Pittsburgh, PA

April 21, 2016

Hot research in cybersecurity fuels HotSoS 2016

The 2016 Symposium and Bootcamp on the Science of Security (HotSoS) was held April 19-21 in Pittsburgh, PA at Carnegie Mellon University. Researchers from multiple academic fields came together for presentations demonstrating methodical, rigorous, scientific approaches to identify, prevent, and remove cyber threats. A major focus of the conferences was on the advancement of scientific methods, including data gathering and analysis, experimental methods, and mathematical models for modeling and reasoning.

The 2016 Symposium and Bootcamp on the Science of Security (HotSoS) was held April 19-21 in Pittsburgh, PA at Carnegie Mellon University. Researchers from multiple academic fields came together for presentations demonstrating methodical, rigorous, scientific approaches to identify, prevent, and remove cyber threats. A major focus of the conferences was on the advancement of scientific methods, including data gathering and analysis, experimental methods, and mathematical models for modeling and reasoning.

Bill Scherlis, co-PI for the Carnegie Mellon Lablet, was conference co-chair. Introducing the event, he called for participants to interact and share ideas and thoughts and to ask questions about the nature of security and the nascent science that is emerging. Stuart Krohn, the initial program manager for the Science of Security initiative at NSA, welcomed the group. Noting the government’s long-term interest and commitment to their work, he challenged them to continue to address cybersecurity using strong scientific principles and rigorous methods. Four outside speakers addressed Science of Security from the perspectives of consumer interest, government policy, and industry, and on the value of a large graph method of analysis. Research papers, presentations, tutorials, and poster sessions rounded out the agenda.

Lorrie Cranor, CMU professor on loan to the Federal Trade Commission (FTC), addressed “Adventures in Usable Privacy and Security: From Empirical Studies to Public Policy.” Topics of interest to FTC are quantifying privacy interests, q disclosures, financial technologies, attack trends, improving complaint reporting, tools to automate tracking, targeted advertising, cross device tracking, fraud, and emerging scams. “Good warnings help users determine whether they are at risk,” she said, but “people ignore poor warnings that put the onus of calculating risk on the end user.” She described a study that shows that password expiry is counterproductive.

Greg Shannon, CMU professor currently working at the White House Office of Science and Technology (OSTP), spoke on the science challenges in “Trustworthy Cyberspace: Strategic Plan for the Federal Cybersecurity Research & Development Program” issued in February 2016. In the plan, OSTP is looking at the science and technology issues that affect policy and the policy issues that impact technology. The keys to addressing the problems, he said, include the creation of a Commission on Enhancing National Cybersecurity, a Federal CISO to take lead on policies, oversight, and strategy, budgeting a $3.1 billion IT modernization fund and working with industry to encourage broader use of security tools such as multi-factor authentication. The plan’s goals are to counter adversaries’ asymmetrical advantages; reverse those asymmetrical advantages; achieve science and technology advantages to achieve effective deterrence; and meet the long term goal that cybersecurity research, development, and the operations community will be able to quickly design, develop, deploy, and operate effective new cybersecurity technologies and services; that cybersecurity tasks for users will be few and easy to accomplish; and that many adversaries will be deterred from launching malicious cyber activities.

Christos Faloutsos, Professor of Electrical and Computer Engineering at CMU, spoke on “Anomaly Detection in Large Graphs.” Citing recent research from several projects, he demonstrated the value of using graph theory to identify patterns that are otherwise hidden. Motivating his research are the problems of identifying such patterns for fraud detection and patterns in time-evolving graphs/tensors. He uses Hadoop to search for many clusters in parallel, starting with random seeds, updates sets of pages and like times for each cluster, and then repeats until convergence is achieved. This approach has been deployed at Facebook as CopyCatch where it was determined that most clusters—77%— come from hard to detect real-but-compromised users and that easier to detect fake accounts are only 22%. For E-Bay fraud detection, the technique has been used on the non-delivery scam. Using NetProbe allows detection of the scam by identifying the groups of people who are cross rating each other to appear honest. The fraudulent nodes look trustworthy. His conclusions: patterns and anomalies go hand in hand; large data sets reveal patterns/outliers that are otherwise invisible.

FireEye’s Matt Briggs gave “A View from the Front Lines with M-Trends.” In corporate cybersecurity, he said, continuing trends show that spear phishing remains the most common entry point and that most hacks are leveraging trust relationships to get in, especially leveraging IT outsourcing. New trends are described as “David v. Goliath:” the rise of business disruption attacks that are politically motivated, cause data leaks to embarrass the company, are financially motivated, and produce the destruction of critical systems. His “good news” was that in the last five years the median number of days before discovery has been reduced from 416 days to 146.

Tutorials about ways to add rigor to relatively soft data analysis were provided. The first, “Systematic Analysis of Qualitative Data in Security” was given by Hanan Hibshi, Carnegie Mellon University. Her tutorial introduced participants to Grounded Theory, a qualitative framework to discover new theory from an empirical analysis of data. It is useful, she said, when analyzing text, audio or video artifacts that lack structure, but contain rich descriptions.

Tao Xie, University of Illinois Urbana-Champaign, and William Enck, North Carolina State University, presented the second tutorial, “Text Analytics for Security.” Researchers in security and software engineering have begun using text analytics to create initial models of human expectation. In this tutorial, they provided an introduction to popular techniques and tools of natural language processing and text mining, and shared their experiences in applying text analytics to security problems.

Nine research papers were presented on studies about intrusion detection, threat modeling, anomaly detection, and attack variants. Thirteen posters were also offered. The HotSoS 2016 Proceedings will be published by ACM and available online at the ACM Digital Library available at: http://dl.acm.org/. Abstracts of the tutorials, papers and posters are available with their Digital Object Identifier in a companion document.

Read more about the event in the related Newsletter article “HotSoS 2016 Papers, Posters, and Tutorials” at http://cps-vo.org/node/26082

Both members and non-members of the Science of Security Virtual Organization can view the agenda and presentations on the CPS-VO web site at: For non-members, information is available at: http://cps-vo.org/group/SoS.

(ID#: 16-9553)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Hot SoS 2016 Papers, Posters, and Tutorials

|

Hot SoS 2016 Papers, Posters, and Tutorials |

The 2016 Symposium and Bootcamp on the Science of Security (HotSoS) was held April 19-21 at Carnegie-Mellon University in Pittsburgh, PA. There were nine papers representing the work of forty-seven researchers and co-authors, two tutorials, and 14 posters presented. This bibliography provides the abstract and author information for each. Papers are cited first, then tutorials, and finally posters in the order they were presented. While the conference proceedings will soon be available from the ACM Digital Library, this bibliography includes digital object identifiers that will allow the reader to find the document prior to publication. The names of the authors who presented the work at Hot SoS are underlined. More information about Hot SoS 2016 can be found on the CPS-VO web page at: http://cps-vo.org/node/24119

Hui Lin, Homa Alemzadeh, Daniel Chen, Zbigniew Kalbarczyk, Ravishankar Iyer; “Safety-critical Cyber-physical Attacks: Analysis, Detection, and Mitigation Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898391

Hui Lin, Homa Alemzadeh, Daniel Chen, Zbigniew Kalbarczyk, Ravishankar Iyer; “Safety-critical Cyber-physical Attacks: Analysis, Detection, and Mitigation Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898391

Abstract: Today’s cyber-physical systems (CPSs) can have very different characteristics in terms of control algorithms, configurations, underlying infrastructure, communication protocols, and real-time requirements. Despite these variations, they all face the threat of malicious attacks that exploit the vulnerabilities in the cyber domain as footholds to introduce safety violations in the physical processes. In this paper, we focus on a class of attacks that impact the physical processes without introducing anomalies in the cyber domain. We present the common challenges in detecting this type of attacks in the contexts of two very different CPSs (i.e., power grids and surgical robots). In addition, we present a general principle for detecting such cyber-physical attacks, which combine the knowledge of both cyber and physical domains to estimate the adverse consequences of malicious activities in a timely manner.

Keywords: (not provided)

Aron Laszka, Waseem Abbas, Shankar Sastry, Yevgeniy Vorobeychik, Xenofon Koutsoukos; “Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898399

Abstract: Intrusion-detection systems can play a key role in protecting sensitive computer systems since they give defenders a chance to detect and mitigate attacks before they could cause substantial losses. However, an oversensitive intrusion-detection system, which produces a large number of false alarms, imposes prohibitively high operational costs on a defender since alarms need to be manually investigated. Thus, defenders have to strike the right balance between maximizing security and minimizing costs. Optimizing the sensitivity of intrusion detection systems is especially challenging in the case when multiple interdependent computer systems have to be defended against a strategic attacker, who can target computer systems in order to maximize losses and minimize the probability of detection. We model this scenario as an attacker-defender security game and study the problem.

Keywords: Intrusion detection system; game theory; economics of security; Stackelberg equilibrium; computational complexity

Atul Bohara, Uttam Thakore, and William Sanders; “Intrusion Detection in Enterprise Systems by Combining and Clustering Diverse Monitor Data.” DOI:http://dx.doi.org/10.1145/2898375.2898400

Atul Bohara, Uttam Thakore, and William Sanders; “Intrusion Detection in Enterprise Systems by Combining and Clustering Diverse Monitor Data.” DOI:http://dx.doi.org/10.1145/2898375.2898400

Abstract: Intrusion detection using multiple security devices has received much attention recently. The large volume of information generated by these tools, however, increases the burden on both computing resources and security administrators. Moreover, attack detection does not improve as expected if these tools work without any coordination.

In this work, we propose a simple method to join information generated by security monitors with diverse data formats. We present a novel intrusion detection technique that uses unsupervised clustering algorithms to identify malicious behavior within large volumes of diverse security monitor data. First, we extract a set of features from network-level and host-level security logs that aid in detecting malicious host behavior and flooding-based network attacks in an enterprise network system. We then apply clustering algorithms to the separate and joined logs and use statistical tools to identify anomalous usage behaviors captured by the logs. We evaluate our approach on an enterprise network data set, which contains network and host activity logs. Our approach correctly identifies and prioritizes anomalous behaviors in the logs by their likelihood of maliciousness. By combining network and host logs, we are able to detect malicious behavior that cannot be detected by either log alone.

Keywords: Security, Monitoring, Intrusion Detection, Anomaly Detection, Machine Learning, Clustering

Jeffrey Carver, Morgan Burcham, Sedef Akinli Kocak, Ayse Bener, Michael Felderer, Matthias Gander, Jason King, Jouni Markkula, Markku Oivo, Clemens Sauerwein, Laurie Williams; “Establishing a Baseline for Measuring Advancement in the Science of Security – an Analysis of the 2015 IEEE Security & Privacy Proceedings.” DOI:http://dx.doi.org/10.1145/2898375.2898380

Abstract: In this paper we aim to establish a baseline of the state of scientific work in security through the analysis of indicators of scientific c research as reported in the papers from the 2015 IEEE Symposium on Security and Privacy.

To conduct this analysis, we developed a series of rubrics to determine the completeness of the papers relative to the type of evaluation used (e.g. case study, experiment, proof). Our findings showed that while papers are generally easy to read, they often do not explicitly document some key information like the research objectives, the process for choosing the cases to include in the studies, and the threats to validity. We hope that this initial analysis will serve as a baseline against which we can measure the advancement of the science of security.

Keywords: Science of Security, Literature Review

Bradley Potteiger, Goncalo Martins, Xenofon Koutsoukos; “Software and Attack Centric Integrated Threat Modeling for Quantitative Risk Assessment.” DOI:http://dx.doi.org/10.1145/2898375.2898390

Abstract: Threat modeling involves understanding the complexity of the system and identifying all of the possible threats, regardless of whether or not they can be exploited. Proper identification of threats and appropriate selection of countermeasures reduces the ability of attackers to misuse the system.

This paper presents a quantitative, integrated threat modeling approach that merges software and attack centric threat modeling techniques. The threat model is composed of a system model representing the physical and network infrastructure layout, as well as a component model illustrating component specific threats. Component attack trees allow for modeling specific component contained attack vectors, while system attack graphs illustrate multi-component, multi-step attack vectors across the system. The Common Vulnerability Scoring System (CVSS) is leveraged to provide a standardized method of quantifying the low level vulnerabilities in the attack trees. As a case study, a railway communication network is used, and the respective results using a threat modeling software tool are presented.

Keywords: Quantitative risk assessment, threat modeling, cyber-physical systems

Antonio Roque, Kevin Bush, Christopher Degni; “Security is about Control: Insights from Cybernetics.” DOI:http://dx.doi.org/10.1145/2898375.2898379

Abstract: Cybernetic closed loop regulators are used to model sociotechnical systems in adversarial contexts. Cybernetic principles regarding these idealized control loops are applied to show how the incompleteness of system models enables system exploitation. We consider abstractions as a case study of model incompleteness, and we characterize the ways that attackers and defenders interact in such a formalism. We end by arguing that the science of security is most like a military science, whose foundations are analytical and generative rather than normative.

Keywords: Computer Security, Cybernetics, Control Systems

Zhenqi Huang, Yu Wang, Sayan Mitra, Geir Dullerud; “Controller Synthesis for Linear Dynamical Systems with Adversaries.” DOI:http://dx.doi.org/10.1145/2898375.2898378

Zhenqi Huang, Yu Wang, Sayan Mitra, Geir Dullerud; “Controller Synthesis for Linear Dynamical Systems with Adversaries.” DOI:http://dx.doi.org/10.1145/2898375.2898378

Abstract: We present a controller synthesis algorithm for a reach-avoid problem in the presence of adversaries. Our model of the adversary abstractly captures typical malicious attacks envisioned on cyber-physical systems such as sensor spoofing, controller corruption, and actuator intrusion. After formulating the problem in a general setting, we present a sound and complete algorithm for the case with linear dynamics and an adversary with a budget on the total L2-norm of its actions. The algorithm relies on a result from linear control theory that enables us to decompose and compute the reachable states of the system in terms of a symbolic simulation of the adversary-free dynamics and the total uncertainty induced by the adversary. With this decomposition, the synthesis problem eliminates the universal quantifier on the adversary's choices and the symbolic controller actions can be effectively solved using an SMT solver. The constraints induced by the adversary are computed by solving second-order cone programmings. The algorithm is later extended to synthesize state-dependent controller and to generate attacks for the adversary. We present preliminary experimental results that show the effectiveness of this approach on several example problems.

Keywords: Cyber-physical security, constraint-based synthesis, controller synthesis

Carl Pearson, Allaire Welk, William Boettcher, Roger Mayer, Sean Streck, Joseph Simons-Rudolph, Christopher Mayhorn; “Differences in Trust between Human and Automated Decision Aids.” DOI:http://dx.doi.org/10.1145/2898375.2898385

Abstract: Humans can easily find themselves in high cost situations where they must choose between suggestions made by an automated decision aid and a conflicting human decision aid. Previous research indicates that humans often rely on automation or other humans, but not both simultaneously. Expanding on previous work conducted by Lyons and Stokes (2012), the current experiment measures how trust in automated or human decision aids differs along with perceived risk and workload. The simulated task required 126 participants to choose the safest route for a military convoy; they were presented with conflicting information from an automated tool and a human. Results demonstrated that as workload increased, trust in automation decreased. As the perceived risk increased, trust in the human decision aid increased. Individual differences in dispositional trust correlated with an increased trust in both decision aids. These findings can be used to inform training programs for operators who may receive information from human and automated sources. Examples of this context include: air traffic control, aviation, and signals intelligence.

Keywords: Trust; reliance; automation; decision-making; risk; workload; strain

Phuong Cao, Eric Badger, Zbigniew Kalbarczyk, Ravishankar Iyer; “A Framework for Generation, Replay and Analysis of Real-World Attack Variants.” DOI:http://dx.doi.org/10.1145/2898375.2898392

Abstract: This paper presents a framework for (1) generating variants of known attacks, (2) replaying attack variants in an isolated environment and, (3) validating detection capabilities of attack detection techniques against the variants. Our framework facilitates reproducible security experiments. We generated 648 variants of three real-world attacks (observed at the National Center for Supercomputing Applications at the University of Illinois). Our experiment showed the value of generating attack variants by quantifying the detection capabilities of three detection methods: a signature-based detection technique, an anomaly-based detection technique, and a probabilistic graphical model-based technique.

Keywords: (not provided)

Tutorial:

Hanan Hibshi; “Systematic Analysis of Qualitative Data in Security.” DOI:http://dx.doi.org/10.1145/2898375.2898387

Hanan Hibshi; “Systematic Analysis of Qualitative Data in Security.” DOI:http://dx.doi.org/10.1145/2898375.2898387

Abstract: This tutorial will introduce participants to Grounded Theory, which is a qualitative framework to discover new theory from an empirical analysis of data. This form of analysis is particularly useful when analyzing text, audio or video artifacts that lack structure, but contain rich descriptions. We will frame Grounded Theory in the context of qualitative methods and case studies, which complement quantitative methods, such as controlled experiments and simulations. We will contrast the approaches developed by Glaser and Strauss, and introduce coding theory – the most prominent qualitative method for performing analysis to discover Grounded Theory. Topics include coding frames, first- and second-cycle coding, and saturation. We will use examples from security interview scripts to teach participants: developing a coding frame, coding a source document to discover relationships in the data, developing heuristics to resolve ambiguities between codes, and performing second-cycle coding to discover relationships within categories. Then, participants will learn how to discover theory from coded data. Participants will further learn about inter-rater reliability statistics, including Cohen's and Fleiss' Kappa, Krippendorf's Alpha, and Vanbelle's Index. Finally, we will review how to present Grounded Theory results in publications, including how to describe the methodology, report observations, and describe threats to validity.

Keywords: Grounded theory; qualitative; security analysis

Tutorial:

Tao Xie and William Enck; “Text Analytics for Security.” DOI:http://dx.doi.org/10.1145/2898375.2898397

Abstract: Computing systems that make security decisions often fail to take into account human expectations. This failure occurs because human expectations are typically drawn from in textual sources (e.g., mobile application description and requirements documents) and are hard to extract and codify. Recently, researchers in security and software engineering have begun using text analytics to create initial models of human expectation. In this tutorial, we provide an introduction to popular techniques and tools of natural language processing (NLP) and text mining, and share our experiences in applying text analytics to security problems. We also highlight the current challenges of applying these techniques and tools for addressing security problems. We conclude the tutorial with discussion of future research directions.

Keywords: Security; human expectations; text analytics

Poster:

Marwan Abi-Antoun, Ebrahim Khalaj, Radu Vanciu, Ahmad Moghimi; “Abstract Runtime Structure for Reasoning about Security.” DOI:http://dx.doi.org/10.1145/2898375.2898377

Abstract: We propose an interactive approach where analysts reason about the security of a system using an abstraction of its runtime structure, as opposed to looking at the code. They interactively refine a hierarchical object graph, set security properties on abstract objects or edges, query the graph, and investigate the results by studying highlighted objects or edges or tracing to the code. Behind the scenes, an inference analysis and an extraction analysis maintain the soundness of the graph with respect to the code.

Keywords: object graphs; ownership type inference; graph query

Poster:

Clark Barrett. Cesare Tinelli, Morgan Deters, Tianyi Liang, Andrew Reynolds, Nestan Tsiskaridze; “Efficient Solving of String Constraints for Security Analysis.” DOI:http://dx.doi.org/10.1145/2898375.2898393

Abstract: The security of software is increasingly more critical for consumer confidence, protection of privacy, protection of intellectual property, and even national security. As threats to software security have become more sophisticated, so too have the techniques developed to ensure security. One basic technique that has become a fundamental tool in static security analysis is symbolic execution. There are now a number of successful approaches that rely on symbolic methods to reduce security questions about programs to constraint satisfaction problems in some formal logic (e.g., [4, 5, 7, 16]). Those problems are then solved automatically by specialized reasoners for the target logic. The found solutions are then used to construct automatically security exploits in the original programs or, more generally, identify security vulnerabilities.

Keywords: String solving, SMT, automated security analysis

Poster:

Susan G. Campbell, Lelyn D. Saner, Michael F. Bunting; “Characterizing Cybersecurity Jobs: Applying the Cyber Aptitude and Talent Assessment Framework.” DOI:http://dx.doi.org/10.1145/2898375.2898394

Abstract: Characterizing what makes cybersecurity professions difficult involves several components, including specifying the cognitive and functional requirements for performing job-related tasks. Many frameworks that have been proposed are focused on functional requirements of cyber work roles, including the knowledge, skills, and abilities associated with them. In contrast, we have proposed a framework for classifying cybersecurity jobs according to the cognitive demands of each job and for matching applicants to jobs based on their aptitudes for key cognitive skills (e.g., responding to network activity in real-time). In this phase of research, we are investigating several cybersecurity jobs (such as operators vs. analysts), converting the high-level functional tasks of each job into elementary tasks, in order to determine what cognitive requirements distinguish the jobs. We will then examine how the models of cognitive demands by job can be used to inform the designs of aptitude tests for different kinds of jobs. In this poster, we will describe our framework in more detail and how it can be applied toward matching people with the jobs that fit them best.

Keywords: Aptitude testing; job analysis; task analysis; cybersecurity workforce; selection

Poster:

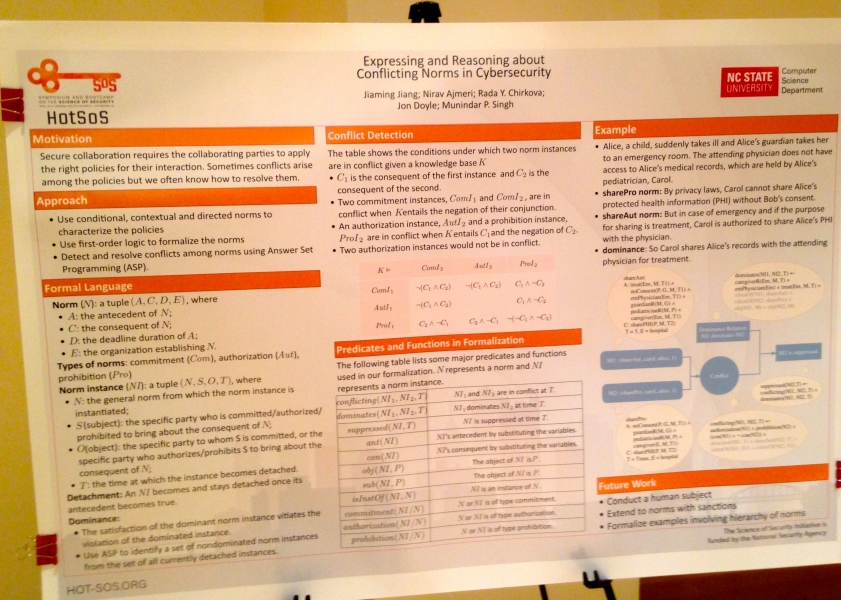

Jiaming Jiang, Nirav Ajmeri, Rada Y. Chirkova, Jon Doyle, Munindar P. Singh; “Expressing and Reasoning about Conflicting Norms in Cybersecurity.” DOI:http://dx.doi.org/10.1145/2898375.2898395

Abstract: Secure collaboration requires the collaborating parties to apply the right policies for their interaction. We adopt a notion of conditional, directed norms as a way to capture the standards of correctness for a collaboration. How can we handle conflicting norms? We describe an approach based on knowledge of what norm dominates what norm in what situation. Our approach adapts answer-set programming to compute stable sets of norms with respect to their computed conflicts and dominance. It assesses agent compliance with respect to those stable sets. We demonstrate our approach on a healthcare scenario.

Keywords: Normative System, Dominance Relation, Norm

Poster:

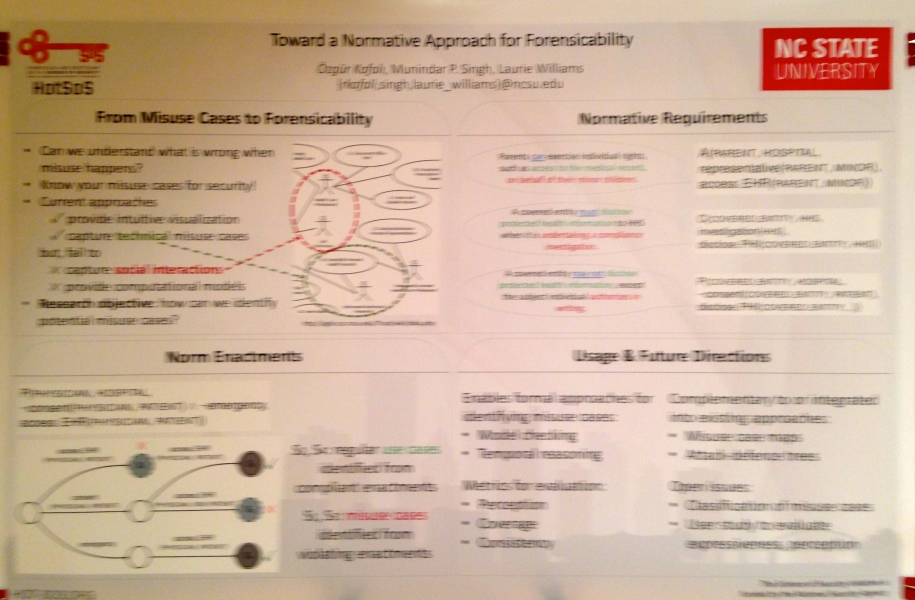

Özgür Kafalı, Munindar P. Singh, and Laurie Williams; “Toward a Normative Approach for Forensicability.” DOI:http://dx.doi.org/10.1145/2898375.2898386

Abstract: Sociotechnical systems (STSs), where users interact with software components, support automated logging, i.e., what a user has performed in the system. However, most systems do not implement automated processes for inspecting the logs when a misuse happens. Deciding what needs to be logged is crucial as excessive amounts of logs might be overwhelming for human analysts to inspect. The goal of this research is to aid software practitioners to implement automated forensic logging by providing a systematic method of using attackers' malicious intentions to decide what needs to be logged. We propose Lokma: a normative framework to construct logging rules for forensic knowledge. We describe the general forensic process of Lokma, and discuss related directions.

Keywords: Forensic logging, security, sociotechnical systems, requirements, social norms

Poster:

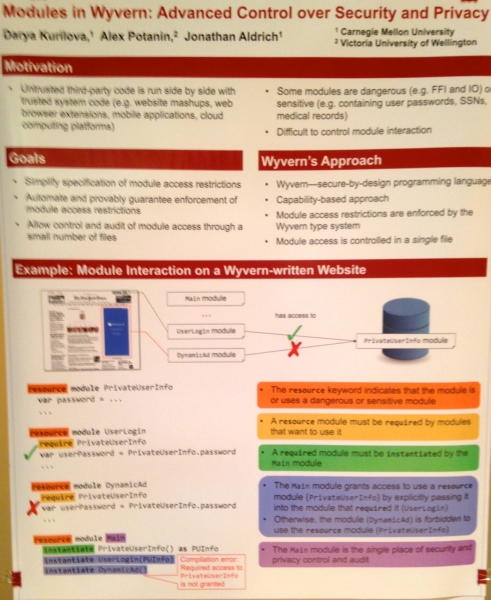

Darya Kurilova, Alex Potanin, Jonathan Aldrich; “Modules in Wyvern: Advanced Control over Security and Privacy” DOI:http://dx.doi.org/10.1145/2898375.2898376

Abstract: In today’s systems, restricting the authority of untrusted code is difficult because, by default, code has the same authority as the user running it. Object capabilities are a promising way to implement the principle of least authority, but being too low-level and fine-grained, take away many conveniences provided by module systems. We present a module system design that is capability-safe, yet preserves most of the convenience of conventional module systems. We demonstrate how to ensure key security and privacy properties of a program as a mode of use of our module system. Our authority safety result formally captures the role of mutable state in capability-based systems and uses a novel non-transitive notion of authority, which allows us to reason about authority restriction: the encapsulation of a stronger capability inside a weaker one.

Keywords: Language-based security; capabilities; modules; authority

Poster:

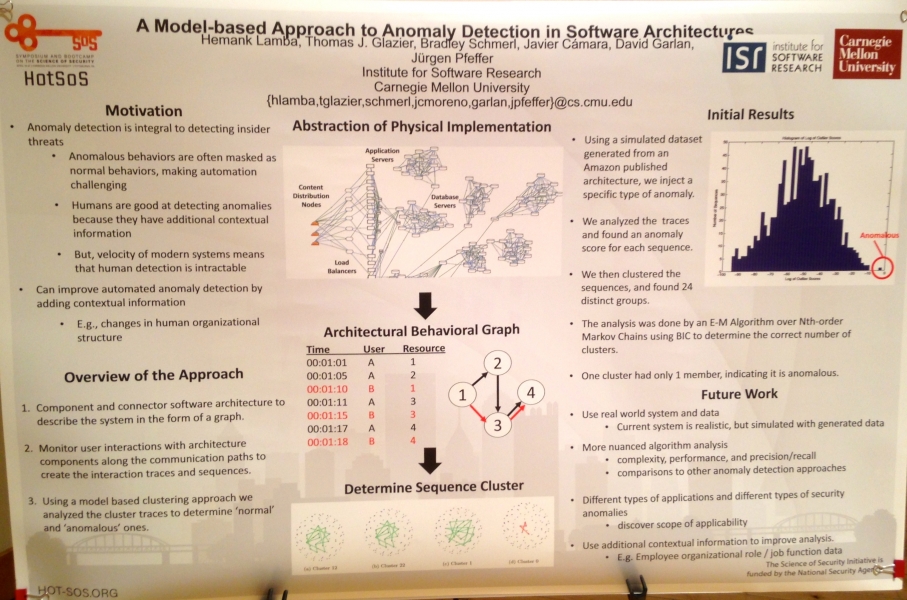

Hemank Lamba, Thomas J. Glazier, Bradley Schmerl, Javier Cámara, David Garlan, Jürgen Pfeffer; “A Model-based Approach to Anomaly Detection in Software Architectures.” DOI:http://dx.doi.org/10.1145/2898375.2898401

Abstract: In an organization, the interactions users have with software leave patterns or traces of the parts of the systems accessed. These interactions can be associated with the underlying software architecture. The first step in detecting problems like insider threat is to detect those traces that are anomalous. Here, we propose a method to end anomalous users leveraging these interaction traces, categorized by user roles. We propose a model based approach to cluster user sequences and find outliers. We show that the approach works on a simulation of a large scale system based on and Amazon Web application style.

Keywords: anomaly detection, model-based graph clustering

Poster:

Jian Lou and Yevgeniy Vorobeychik; “Decentralization and Security in Dynamic Traffic Light Control.” DOI:http://dx.doi.org/10.1145/2898375.2898384

Abstract: Complex traffic networks include a number of controlled intersections, and, commonly, multiple districts or municipalities. The result is that the overall traffic control problem is extremely complex computationally. Moreover, given that different municipalities may have distinct, non-aligned, interests, traffic light controller design is inherently decentralized, a consideration that is almost entirely absent from related literature. Both complexity and decentralization have great bearing both on the quality of the traffic network overall, as well as on its security. We consider both of these issues in a dynamic traffic network. First, we propose an effective local search algorithm to efficiently design system-wide control logic for a collection of intersections. Second, we propose a game theoretic (Stackelberg game) model of traffic network security in which an attacker can deploy denial-of-service attacks on sensors, and develop a resilient control algorithm to mitigate such threats. Finally, we propose a game theoretic model of decentralization, and investigate this model both in the context of baseline traffic network design, as well as resilient design accounting for attacks. Our methods are implemented and evaluated using a simple traffic network scenario in SUMO.

Keywords: Traffic Control System; Game Theoretical Model; Decentralization and Security; Simulation-Based Method

Poster:

Momin M. Malik, Jürgen Pfeffer, Gabriel Ferreira, Christian Kästner; “Visualizing the Variational Callgraph of the Linux Kernel: An Approach for Reasoning about Dependencies.” DOI:http://dx.doi.org/10.1145/2898375.2898398

Abstract: Software developers use #ifdef statements to support code configurability, allowing software product diversification. But because functions can be in many executions paths that depend on complex combinations of configuration options, the introduction of an #ifdef for a given purpose (such as adding a new feature to a program) can enable unintended function calls, which can be a source of vulnerabilities. Part of the difficulty lies in maintaining mental models of all dependencies. We propose analytic visualizations of the variational callgraph to capture dependencies across configurations and create visualizations to demonstrate how it would help developers visually reason through the implications of diversification, for example through visually doing change impact analysis.

Keywords: visualization, configuration complexity, Linux Kernel, callgraph, #ifdef, dependencies, vulnerabilities

Poster:

Akond Ashfaque, Ur Rahman, and Laurie Williams; “Security Practices in DevOps.” DOI:http://dx.doi.org/10.1145/2898375.2898383

Abstract: We summarize the contributions of this study as follows:

• A list of security practices and an analysis of how they are used in organizations that have adopted DevOps to integrate security; and

• An analysis that quantifies the levels of collaboration amongst the development teams, operations teams, and security teams within organizations that are using DevOps.

Keywords: (not provided)

Poster:

Bradley Schmerl, Jeffrey Gennari, Javier Cámara, David Garlan; “Raindroid – A System for Run-time Mitigation of Android Intent Vulnerabilities.” DOI:http://dx.doi.org/10.1145/2898375.2898389

Abstract: Modern frameworks are required to be extendable as well as secure. However, these two qualities are often at odds. In this poster we describe an approach that uses a combination of static analysis and run-time management, based on software architecture models, that can improve security while maintaining framework extendability. We implement a prototype of the approach for the Android platform. Static analysis identifies the architecture and communication patterns among the collection of apps on an Android device and which communications might be vulnerable to attack. Run-time mechanisms monitor these potentially vulnerable communication patterns, and adapt the system to either deny them, request explicit approval from the user, or allow them.

Keywords: software architecture, security, self-adaptation

Poster:

Daniel Smullen and Travis D. Breaux; “Modeling, Analyzing, and Consistentcy Checking Privacy Requirements using Eddy.” DOI:http://dx.doi.org/10.1145/2898375.2898381

Abstract: Eddy is a privacy requirements specification language that privacy analysts can use to express requirements over data practices; to collect, use, transfer and retain personal and technical information. The language uses a simple SQL-like syntax to express whether an action is permitted or prohibited, and to restrict those statements to particular data subjects and purposes. Eddy also supports the ability to express modifications on data, including perturbation, data append, and redaction. The Eddy specifications are compiled into Description Logic to automatically detect conflicting requirements and to trace data flows within and across specifications. Conflicts are highlighted, showing which rules are in conflict (expressing prohibitions and rights to perform the same action on equivalent interpretations of the same data, data subjects, or purposes), and what definitions caused the rules to conflict. Each specification can describe an organization's data practices, or the data practices of specific components in a software architecture.

Keywords: privacy; requirements engineering; data ow analysis; model checking

Poster:

Christopher Theisen and Laurie Williams; “Risk-Based Attack Surface Approximation.” DOI:http://dx.doi.org/10.1145/2898375.2898388

Abstract: Proactive security review and test efforts are a necessary component of the software development lifecycle. Since resource limitations often preclude reviewing, testing and fortifying the entire code base, prioritizing what code to review/test can improve a team’s ability to find and remove more vulnerabilities that are reachable by an attacker. One way that professionals perform this prioritization is the identification of the attack surface of software systems. However, identifying the attack surface of a software system is non-trivial. The goal of this poster is to present the concept of a risk-based attack surface approximation based on crash dump stack traces for the prioritization of security code rework efforts. For this poster, we will present results from previous efforts in the attack surface approximation space, including studies on its effectiveness in approximating security relevant code for Windows and Firefox. We will also discuss future research directions for attack surface approximation, including discovery of additional metrics from stack traces and determining how many stack traces are required for a good approximation.

Keywords: Stack traces; attack surface; crash dumps; security; metrics

Poster:

Olga Zielinska, Allaire Welk, and Christopher B. Mayhorn, Emerson Murphy-Hill; “The Persuasive Phish: Examining the Social Psychological Principles Hidden in Phishing Emails.” DOI:http://dx.doi.org/10.1145/2898375.2898382

Abstract: Phishing is a social engineering tactic used to trick people into revealing personal information [Zielinska, Tembe, Hong, Ge, Murphy-Hill, & Mayhorn 2014]. As phishing emails continue to infiltrate users’ mailboxes, what social engineering techniques are the phishers using to successfully persuade victims into releasing sensitive information?

Cialdini’s [2007] six principles of persuasion (authority, social proof, liking/similarity, commitment/consistency, scarcity, and reciprocation) have been linked to elements of phishing emails [Akbar 2014; Ferreira, & Lenzini 2015]; however, the findings have been conflicting. Authority and scarcity were found as the most common persuasion principles in 207 emails obtained from a Netherlands database [Akbar 2014], while liking/similarity was the most common principle in 52 personal emails available in Luxemborg and England [Ferreira et al. 2015]. The purpose of this study was to examine the persuasion principles present in emails available in the United States over a period of five years.

Two reviewers assessed eight hundred eighty-seven phishing emails from Arizona State University, Brown University, and Cornell University for Cialdini’s six principles of persuasion. Each email was evaluated using a questionnaire adapted from the Ferreira et al. [2015] study. There was an average agreement of 87% per item between the two raters.

Spearman’s Rho correlations were used to compare email characteristics over time. During the five year period under consideration (2010–2015), the persuasion principles of commitment/consistency and scarcity have increased over time, while the principles of reciprocation and social proof have decreased over time. Authority and liking/similarity revealed mixed results with certain characteristics increasing and others decreasing.

The commitment/consistency principle could be seen in the increase of emails referring to elements outside the email to look more reliable, such as Google Docs or Adobe Reader (rs(850) = .12, p =.001), while the scarcity principle could be seen in urgent elements that could encourage users to act quickly and may have had success in eliciting a response from users (rs(850) = .09, p=.01). Reciprocation elements, such as a requested reply, decreased over time (rs(850) = -.12, p =.001). Additionally, the social proof principle present in emails by referring to actions performed by other users also decreased (rs(850) = -.10, p =.01).

Two persuasion principles exhibited both an increase and decrease in their presence in emails over time: authority and liking/similarity. These principles could increase phishing rate success if used appropriately, but could also raise suspicions in users and decrease compliance if used incorrectly. Specifically, the source of the email, which corresponds to the authority principle, displayed an increase over time in educational institutes (rs(850) = .21, p <.001), but a decrease in financial institutions (rs(850) = -.18, p <.001). Similarly, the liking/similarity principle revealed an increase over time of logos present in emails (rs(850) = .18, p<.001) and decrease in service details, such as payment information (rs(850) = -.16, p <.001).

The results from this study offer a different perspective regarding phishing. Previous research has focused on the user aspect; however, few studies have examined the phisher perspective and the social psychological techniques they are implementing. Additionally, they have yet to look at the success of the social psychology techniques. Results from this study can be used to help to predict future trends and inform training programs, as well as machine learning programs used to identify phishing messages.

Keywords: Phishing, Persuasion, Social Engineering, Email, Security

Poster:

Wenyu Ren, Klara Nahrstedt, and Tim Yardley; “Operation-Level Traffic Analyzer Framework for Smart Grid.” DOI:http://dx.doi.org/10.1145/2898375.2898396

Abstract: The Smart Grid control systems need to be protected from internal attacks within the perimeter. In Smart Grid, the Intelligent Electronic Devices (IEDs) are resource-constrained devices that do not have the ability to provide security analysis and protection by themselves. And the commonly used industrial control system protocols offer little security guarantee. To guarantee security inside the system, analysis and inspection of both internal network traffic and device status need to be placed close to IEDs to provide timely information to power grid operators. For that, we have designed a unique, extensible and efficient operation-level traffic analyzer framework. The timing evaluation of the analyzer overhead confirms efficiency under Smart Grid operational traffic.

Keywords: Smart Grid, network security, traffic analysis

(ID#: 16-9552)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

In the News

|

In The News |

This section features topical, current news items of interest to the international security community. These articles and highlights are selected from various popular science and security magazines, newspapers, and online sources.

US News

“Top US Undergraduate Computer Science Programs Skip Cybersecurity Classes,” Dark Reading, 7 April 2016. [Online].

A new study conducted on the top 121 computer science programs revealed surprising details about the education gap in cybersecurity. Out of the top ten universities, only one requires any cybersecurity course be completed and three do not even offer a course on cybersecurity. Furthermore, the University of Alabama, which does not have a top program, was the only school that required 3 or more cybersecurity courses for the degree.

See: http://www.darkreading.com/vulnerabilities---threats/top-us-undergraduate-computer-science-programs-skip-cybersecurity-classes/d/d-id/1325024

“Do US universities deserve an “F” in teaching cybersecurity?,” Naked Security, 13 April 2016. [Online].

With a rapidly growing need for cybersecurity professionals there must also be proper education to fill those jobs. Research firm CloudPassage conducted a survey to see what cybersecurity education in the United States looked like. They graded the top universities on a scale from A to F.

See: https://nakedsecurity.sophos.com/2016/04/13/do-us-universities-deserve-an-f-in-teaching-cybersecurity/

“How These Mormon Women Became Some of the Best Cybersecurity Hackers in the U.S.,” Fortune, 27 April 2016. [Online].

This year's National Collegiate Cyber Defense Competition featured only seven women out of ten teams with eight members each. BYU featured four of those seven competitors. Jack Harrington, vice president of cybersecurity at Raytheon commented, “We’ve got to tap into this talent pool that’s 50% of the population.” BYU finished second in the competition.

See: http://fortune.com/2016/04/27/mormon-women-cybersecurity/

“NSA lauds The Citadel for cybersecurity training,” The Post and Courier, 28 April 2016. [Online].

The NSA designated The Citadel as a National Center of Excellence in Cyber Defense. The school’s provost said that the distinction will help set graduates apart from the rest of the field. The honor comes after the university introduced a minor in cybersecurity in 2013 and a graduate certificate in the same field in 2014.

See: http://www.postandcourier.com/20160428/160429361/nsa-lauds-the-citadel-for-cybersecurity-training

“The Aviation Industry is Starting to Grapple with Cybersecurity,” Slate, 3 May 2016. [Online].

Security of wireless networks on airplanes has been under some intense criticism following several events. In 2015, a security researcher was detained by the FBI after commenting about how easily hackable networks onboard his flight were. Since then, the Cybersecurity Standards for Aircraft to Improve Resilience Act of 2016 has been introduced which aims to create standards for security on in flight networks.

See: http://www.slate.com/articles/technology/future_tense/2016/05/the_aviation_industry_is_starting_to_grapple_with_cybersecurity.html

International News

“Whaling Emerges as Major Cybersecurity Threat,” CIO, 21 April 2016. [Online].

Whaling, a clever play on the term “phishing” that targets company executives and their relationships with employees, has recently stepped forward as one of the more prominent cyber threats to companies. The FBI estimates that companies have lost a collective $2.3 billion over the past three years.

See: http://www.cio.com/article/3059621/security/whaling-emerges-as-major-cybersecurity-threat.html

“Will Emotions be Hackable? Exploring How Cybersecurity Could Evolve,” CSMonitor, 2 May 2016. [Online].

The University of California Berkeley’s Center for Long-Term Cybersecurity (CLTC) published a report detailing what security may look like in the year 2020. Among their ideas, was the vulnerablility of the massive repositories of personal data and emotional histories that come with it.

See: http://www.csmonitor.com/World/Passcode/2016/0502/Will-emotions-be-hackable-Exploring-how-cybersecurity-could-evolve-video

“Instagram Hacked by 10-Year-Old, Who Wins $10,000 Prize for Finding Ways to Delete Comments,” Independent, 4 May 2016. [Online].

A ten year old from Finland was able to uncover a bug in Instagram that allowed the exploiter to delete comments posted by any user. In exchange for reporting the bug, the young hacker received a $10,000 prize from Facebook, who owns Instagram, through their Bug Bounty Program.

See: http://www.independent.co.uk/life-style/gadgets-and-tech/news/instagram-hacked-by-10-year-old-who-gets-10000-prize-for-finding-way-to-delete-users-comments-a7012496.html

“Big Data Breaches Found at Major Email Services — Expert,” Reuters, 5 May 2016. [Online].

The discovery of the breach came when Hold Security researchers stumbled upon a Russian hacker gloating that he had stolen over one billion email acounts. Most accounts came from Russian provider mail.ru with smaller portions of the stolen emails belonging to users of Gmail, Microsoft, and Yahoo.

See: http://www.reuters.com/article/us-cyber-passwords-idUSKCN0XV1I6

(ID#: 16-9549)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.

Publications of Interest

|

Publications of Interest |

The Publications of Interest section contains bibliographical citations, abstracts if available and links on specific topics and research problems of interest to the Science of Security community.

How recent are these publications?

These bibliographies include recent scholarly research on topics which have been presented or published within the past year. Some represent updates from work presented in previous years, others are new topics.

How are topics selected?

The specific topics are selected from materials that have been peer reviewed and presented at SoS conferences or referenced in current work. The topics are also chosen for their usefulness for current researchers.

How can I submit or suggest a publication?

Researchers willing to share their work are welcome to submit a citation, abstract, and URL for consideration and posting, and to identify additional topics of interest to the community. Researchers are also encouraged to share this request with their colleagues and collaborators.

Submissions and suggestions may be sent to: news@scienceofsecurity.net

(ID#:15-9551)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence

Acoustic Fingerprints 2015

|

Acoustic Fingerprints 2015 |

Acoustic fingerprints can be used to identify an audio sample or quickly locate similar items in an audio database. As a security tool, fingerprints offer a modality of biometric identification of a user. Current research is exploring various aspects and applications, including the use of these fingerprints for mobile device security, antiforensics, use of image processing techniques, and client side embedding. The research work cited here was presented in 2015.

Tsai, T.J.; Friedland, G.; Anguera, X., "An Information-Theoretic Metric of Fingerprint Effectiveness," in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, pp. 340-344, 19-24 April 2015. doi: 10.1109/ICASSP.2015.7177987

Abstract: Audio fingerprinting refers to the process of extracting a robust, compact representation of audio which can be used to uniquely identify an audio segment. Works in the audio fingerprinting literature generally report results using system-level metrics. Because these systems are usually very complex, the overall system-level performance depends on many different factors. So, while these metrics are useful in understanding how well the entire system performs, they are not very useful in knowing how good or bad the fingerprint design is. In this work, we propose a metric of fingerprint effectiveness that decouples the effect of other system components such as the search mechanism or the nature of the database. The metric is simple, easy to compute, and has a clear interpretation from an information theory perspective. We demonstrate that the metric correlates directly with system-level metrics in assessing fingerprint effectiveness, and we show how it can be used in practice to diagnose the weaknesses in a fingerprint design.

Keywords: audio coding; audio signal processing; copy protection; signal representation; audio fingerprinting literature; audio representation extraction; audio segment; fingerprint effectiveness; information theoretic metric; search mechanism; system level metrics; system level performance; Accuracy; Databases; Entropy; Information rates; Noise measurement; Signal to noise ratio; audio fingerprint; copy detection (ID#: 15-8805)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7177987&isnumber=7177909

Szlosarczyk, Sebastian; Schulte, Andrea, "Voice Encrypted Recognition Authentication - VERA," in Next Generation Mobile Applications, Services and Technologies, 2015 9th International Conference on, pp. 270-274, 9-11 Sept. 2015. doi: 10.1109/NGMAST.2015.74

Abstract: We propose VERA - an authentication scheme where sensitive data on mobile phones can be secured or whereby services can be locked by the user's voice. Our algorithm takes use of acoustic fingerprints to identify the personalized voice. The security of the algorithm depends on the discrete logarithm problem in ZN where N is a safe prime. Further we evaluate two practical examples on Android devices where our scheme is used: First the encryption of any data(set). Second locking a mobile phone. Voice is the basic for both of the fields.

Keywords: Acoustics; Authentication; Encryption; Mobile handsets; Protocols; Android; acoustic fingerprint; authentication; biometrics; cryptography; encryption; voice (ID#: 15-8806)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7373254&isnumber=7373199

Casagranda, P.; Sapino, M.L.; Candan, K.S., "Audio Assisted Group Detection Using Smartphones," in Multimedia & Expo Workshops (ICMEW), 2015 IEEE International Conference on, pp. 1-6, June 29 2015-July 3 2015. doi: 10.1109/ICMEW.2015.7169764

Abstract: In this paper we introduce a novel technique to discover groups of users sharing the same environment: a room, an office, a car. Using a smartphone device, we propose a method based on the joint usage of GPS and acoustic fingerprints, allowing to greatly improve the precision of GPS only group detection. To reach the objective, we use a novel variation of an existing audio fingerprinting algorithm with good noise tolerance, assessing it under several conditions. The method is shown to be especially effective for groups of listeners of audio and audio visual content. We finally propose an application of the method to deliver content recommendations for a specific use case, hybrid content radio, an adaptive radio service discussed in the European Broadcasting Union, allowing the enrichment of traditional broadcast linear radio with personalized and context-aware audio content.

Keywords: Global Positioning System; audio signal processing; mobile computing; mobility management (mobile radio); object detection; radio broadcasting; smart phones; European Broadcasting Union; GPS; acoustic fingerprints; audio assisted group detection; audio fingerprinting algorithm; audio visual content; broadcast linear radio; content recommendations; context-aware audio content; hybrid content radio; noise tolerance; smartphone device; Global Positioning System; Audience discovery; audio fingerprinting; contextual recommendation; group detection; group recommendation; hybrid content radio (ID#: 15-8807)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7169764&isnumber=7169738

Sankupellay, M.; Towsey, M.; Truskinger, A.; Roe, P., "Visual Fingerprints of the Acoustic Environment: The Use of Acoustic Indices to Characterise Natural Habitats," in Big Data Visual Analytics (BDVA), 2015, pp. 1-8, 22-25 Sept. 2015. doi: 10.1109/BDVA.2015.7314306

Abstract: Acoustic recordings play an increasingly important role in monitoring terrestrial environments. However, due to rapid advances in technology, ecologists are accumulating more audio than they can listen to. Our approach to this big-data challenge is to visualize the content of long-duration audio-recordings by calculating acoustic indices. These are statistics which describe the temporal-spectral distribution of acoustic energy and reflect content of ecological interest. We combine spectral indices to produce false-color spectrogram images. These not only reveal acoustic content but also facilitate navigation. An additional analytic challenge is to find appropriate descriptors to summarize the content of 24-hour recordings, so that it becomes possible to monitor long-term changes in the acoustic environment at a single location and to compare the acoustic environments of different locations. We describe a 24-hour 'acoustic-fingerprint' which shows some preliminary promise.

Keywords: Big Data; acoustic signal processing; data visualisation; national security; Big-data; acoustic data visualisation; acoustic energy; acoustic environment; acoustic recording; false-color spectrogram image; temporal-spectral distribution; visual fingerprint; Acoustics; Digital audio players; Entropy; Indexes; Meteorology; Monitoring; Spectrogram (ID#: 15-8808)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7314306&isnumber=7314277

Lu, Yao; Chang, Ye; Tang, Ning; Qu, Hemi; Pang, Wei; Zhang, Daihua; Zhang, Hao; Duan, Xuexin, "Concentration-Independent Fingerprint Library of Volatile Organic Compounds Based on Gas-Surface Interactions by Self-Assembled Monolayer Functionalized Film Bulk Acoustic Resonator Arrays," in SENSORS, 2015 IEEE, pp. 1-4, 1-4 Nov. 2015. doi: 10.1109/ICSENS.2015.7370506

Abstract: This paper reported a novel e-nose type gas sensor based on film bulk acoustic resonator (FBAR) array in which each sensor is functionalized individually by different organic monolayers. Such hybrid sensors have been successfully demonstrated for VOCs selective detections. Two concentration-independent fingerprints (adsorption energy constant and desorption rate) were obtained from the adsorption isotherms (Ka, K1, K2) and kinetic analysis (koff) with four different amphiphilic self-assembled monolayers (SAMs) coated on high frequency FBAR transducers (4.44 GHz). The multi-parameter fingerprints regardless of concentration effects compose a recognition library and improve the selectivity of VOCs.

Keywords: Adsorption; Film bulk acoustic resonators; Fingerprint recognition; Kinetic theory; Out of order; Silicon; Transducers; ??-nose; Adsorption analysis; Concentration-independent; FBAR; SAMs; VOCs (ID#: 15-8809)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7370506&isnumber=7370096

Ondel, L.; Anguera, X.; Luque, J., "MASK+: Data-Driven Regions Selection For Acoustic Fingerprinting," in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, pp. 335-339, 19-24 April 2015. doi: 10.1109/ICASSP.2015.7177986

Abstract: Acoustic fingerprinting is the process to deterministically obtain a compact representation of an audio segment, used to compare multiple audio files or to efficiently search for a file within a big database. Recently, we proposed a novel fingerprint named MASK (Masked Audio Spectral Keypoints) that encodes the relationship between pairs of spectral regions around a single spectral energy peak into a binary representation. In the original proposal the configuration of location and size of the regions pairs was determined manually to optimally encode how energy flows around the spectral peak. Such manual selection has always been considered as a weakness in the process as it might not be adapted to the actual data being represented. In this paper we address this problem by proposing a unsupervised, data-driven method based on mutual information theory to automatically define an optimal MASK fingerprint structure. Audio retrieval experiments optimizing for data distorted with additive Gaussian white noise show that the proposed method is much more robust than the original MASK and a well-known acoustic fingerprint.

Keywords: AWGN; audio coding; audio databases; information retrieval; optimisation; signal representation; MASK+; Masked Audio Spectral Keypoints; acoustic fingerprinting; additive Gaussian white noise; audio files; audio retrieval experiments; audio segment; binary representation; compact representation; data-driven region selection; mutual information theory; optimal MASK fingerprint structure; spectral energy; spectral regions; Acoustics; Distortion; Mutual information; Noise measurement; Robustness; Signal to noise ratio; Audio fingerprinting; content recognition (ID#: 15-8810)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7177986&isnumber=7177909

Fung, S.; Yipeng Lu; Hao-Yen Tang; Tsai, J.M.; Daneman, M.; Boser, B.E.; Horsley, D.A., "Theory and Experimental Analysis of Scratch Resistant Coating for Ultrasonic Fingerprint Sensors," in Ultrasonics Symposium (IUS), 2015 IEEE International, pp. 1-4, 21-24 Oct. 2015. doi: 10.1109/ULTSYM.2015.0150

Abstract: Ultrasonic imaging for fingerprint applications offers better tolerance of external conditions and high spatial resolution compared to typical optical and solid state sensors respectively. Similar to existing fingerprint sensors, the performance of ultrasonic imagers is sensitive to physical damage. Therefore it is important to understand the theory behind transmission and reflection effects of protective coatings for ultrasonic fingerprint sensors. In this work, we present the analytical theory behind effects of transmitting ultrasound through a thin film of scratch resistant material. Experimental results indicate transmission through 1 μm of Al2O3 is indistinguishable from the non-coated cover substrate. Furthermore, pulse echo measurements of 5 μm thick Al2O3 show ultrasound pressure reflection increases in accordance with both theory and finite element simulation. Consequently, feasibility is demonstrated of ultrasonic transmission through a protective layer with greatly mismatched acoustic impedance when sufficiently thin. This provides a guide for designing sensor protection when using materials of vastly different acoustic impedance values.

Keywords: acoustic impedance; fingerprint identification; finite element analysis; ultrasonic transducers; ultrasonic transmission; acoustic impedance; finite element simulation; pulse echo measurements; scratch resistant coating; ultrasonic fingerprint sensors; ultrasonic transmission; ultrasound pressure reflection; Acoustic measurements; Acoustics; Aluminum oxide; Coatings; Sensors; Substrates; Ultrasonic imaging; piezoelectric micromachined ultrasound transducers; ultrasonic transducers; ultrasonic transmission (ID#: 15-8811)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7329365&isnumber=7329057

Hoople, J.; Kuo, J.; Abdel-moneum, M.; Lal, A., "Chipscale GHZ Ultrasonic Channels for Fingerprint Scanning," in Ultrasonics Symposium (IUS), 2015 IEEE International, pp. 1-4, 21-24 Oct. 2015. doi: 10.1109/ULTSYM.2015.0027

Abstract: In this paper we present 1-3 GHz frequency ultrasonic interrogation of surface ultrasonic impedances. The chipscale and CMOS integration of GHz transducers can enable surface identification imaging for many applications. We use aluminum nitride piezoelectric thin films driven at maximum amplitudes of 4-Vpp to launch and measure pulse packets. In this paper we first use the contrast in ultrasonic impedance between air and skin to create an image of a fingerprint. As a second application we directly measure the reflection coefficient for different liquids to demonstrate the ability to measure the ultrasonic impedance and distinguish between three different liquids. Using a rubber phantom the image of a portion of a fingerprint is captured by measuring changes in signal levels at the resonance frequency of the piezoelectric transducers 2.7 GHz. Reflected amplitude waves from air and skin differ by factors of 1.8-2. The measurements for three different liquids; water, isopropyl alcohol, and acetone show that the three liquids have sufficiently different acoustic impedances to be able to identify them.

Keywords: CMOS image sensors; aluminium compounds; fingerprint identification; phantoms; piezoelectric thin films; piezoelectric transducers; surface impedance; ultrasonic transducers; AlN; CMOS integration; aluminum nitride piezoelectric thin films; chipscale GHz ultrasonic channels; fingerprint scanning; frequency 1 GHz to 3 GHz; piezoelectric transducers; rubber phantom; surface ultrasonic impedances; Acoustics; Aluminum nitride; CMOS integrated circuits; Fingerprint recognition; Impedance; Reflection coefficient; Transducers; AlN; Fingerprint; MEMS (ID#: 15-8812)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7329436&isnumber=7329057

Hon, Tsz-Kin; Wang, Lin; Reiss, Joshua D.; Cavallaro, Andrea, "Fine Landmark-Based Synchronization of Ad-Hoc Microphone Arrays," in Signal Processing Conference (EUSIPCO), 2015 23rd European, pp. 1331-1335, Aug. 31 2015-Sept. 4 2015. doi: 10.1109/EUSIPCO.2015.7362600

Abstract: We use audio fingerprinting to solve the synchronization problem between multiple recordings from an ad-hoc array consisting of randomly placed wireless microphones or handheld smartphones. Synchronization is crucial when employing conventional microphone array techniques such as beam-forming and source localization. We propose a fine audio landmark fingerprinting method that detects the time difference of arrivals (TDOAs) of multiple sources in the acoustic environment. By estimating the maximum and minimum TDOAs, the proposed method can accurately calculate the unknown time offset between a pair of microphone recordings. Experimental results demonstrate that the proposed method significantly improves the synchronization accuracy of conventional audio fingerprinting methods and achieves comparable performance to the generalized cross-correlation method.

Keywords: Array signal processing; Feature extraction; Microphone arrays; Signal processing algorithms; Synchronization; Time-frequency analysis; Synchronization; audio fingerprinting; microphone array (ID#: 15-8813)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7362600&isnumber=7362087

Kohout, J.; Pevny, T., "Unsupervised Detection of Malware in Persistent Web Traffic," in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, pp. 1757-1761, 19-24 April 2015. doi: 10.1109/ICASSP.2015.7178272

Abstract: Persistent network communication can be found in many instances of malware. In this paper, we analyse the possibility of leveraging low variability of persistent malware communication for its detection. We propose a new method for capturing statistical fingerprints of connections and employ outlier detection to identify the malicious ones. Emphasis is put on using minimal information possible to make our method very lightweight and easy to deploy. Anomaly detection is commonly used in network security, yet to our best knowledge, there are not many works focusing on the persistent communication itself, without making further assumptions about its purpose.

Keywords: Internet; computer network security; invasive software telecommunication traffic; anomaly detection; network security; outlier detection; persistent malware communication; persistent network communication; persistent web traffic; statistical fingerprints; unsupervised detection; Companies; Detection algorithms; Detectors; Histograms; Joints; Malware; Servers; malware; outlier detection; persistent communication (ID#: 15-8814)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7178272&isnumber=7177909

Tang, H.; Lu, Y.; Fung, S.; Tsai, J.M.; Daneman, M.; Horsley, D.A.; Boser, B.E., "Pulse-Echo Ultrasonic Fingerprint Sensor on a Chip," in Solid-State Sensors, Actuators and Microsystems (TRANSDUCERS), 2015 Transducers - 2015 18th International Conference on, pp. 674-677, 21-25 June 2015. doi: 10.1109/TRANSDUCERS.2015.7181013

Abstract: A fully-integrated ultrasonic fingerprint sensor based on pulse-echo imaging is presented. The device consists of a 24×8 Piezoelectric Micromachined Ultrasonic Transducer (PMUT) array bonded at the wafer level to custom readout electronics fabricated in a 180-nm CMOS process. The proposed top-driving bottom-sensing technique minimizes signal attenuation due to the large parasitics associated with high-voltage transistors. With 12V driving signal strength, the sensor takes 24μs to image a 2.3mm by 0.7mm section of a fingerprint.

Keywords: CMOS image sensors; integrated circuit bonding; micromachining; microsensors; piezoelectric transducers; pulse measurement; readout electronics; sensor arrays; ultrasonic transducer arrays; ultrasonic variables measurement; CMOS process; PMUT array; high-voltage transistor; piezoelectric micromachined ultrasonic transducer array; pulse-echo imaging; pulse-echo ultrasonic fingerprint sensor on a chip; readout electronics; signal attenuation; size 180 mum; time 24 mus; top-driving bottom-sensing technique; voltage 12 V; wafer level bonding; Acoustics; Aluminum nitride; Arrays; Electrodes; Fingerprint recognition; Micromechanical devices; Transducers; Fingerprint sensor; MEMS-CMOS integration; PMUT; Ultrasound transducer (ID#: 15-8815)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7181013&isnumber=7180834

Qi Yan; Rui Yang; Jiwu Huang, "Copy-Move Detection of Audio Recording with Pitch Similarity," in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, pp. 1782-1786, 19-24 April 2015. doi: 10.1109/ICASSP.2015.7178277

Abstract: The widespread availability of audio editing software has made it very easy to create forgeries without perceptual trace. Copy-move is one of popular audio forgeries. It is very important to identify audio recording with duplicated segments. However, copy-move detection in digital audio with sample by sample comparison is invalid due to post-processing after forgeries. In this paper we present a method based on pitch similarity to detect copy-move forgeries. We use a robust pitch tracking method to extract the pitch of every syllable and calculate the similarities of these pitch sequences. Then we can use the similarities to detect copy-move forgeries of digital audio recording. Experimental result shows that our method is feasible and efficient.

Keywords: audio recording; counterfeit goods; audio editing software; audio forgeries; copy-move detection; digital audio recording; pitch sequences; pitch tracking; post-processing; Audio databases; Audio recording; Fingerprint recognition; Forgery; Image segmentation; Robustness; Security; Audio forensics; Audio forgeries; Copy-Move detection; Pitch similarity (ID#: 15-8816)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7178277&isnumber=7177909

Nagano, H.; Mukai, R.; Kurozumi, T.; Kashino, K., "A Fast Audio Search Method Based on Skipping Irrelevant Signals By Similarity Upper-Bound Calculation," in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on, pp. 2324-2328, 19-24 April 2015. doi: 10.1109/ICASSP.2015.7178386

Abstract: In this paper, we describe an approach to accelerate fingerprint techniques by skipping the search for irrelevant sections of the signal and demonstrate its application to the divide and locate (DAL) audio fingerprint method. The search result for the applied method, DAL3, is the same as that of DAL mathematically. Experimental results show that DAL3 can reduce the computational cost of DAL to approximately 25% for the task of music signal retrieval.

Keywords: acoustic signal processing; audio signal processing; fingerprint identification; musical acoustics; DAL audio fingerprint method; divide-and-locate audio fingerprint method; fast audio search method; finger print technology; music signal retrieval; similarity upper-bound calculation; skipping irrelevant signals; Acceleration; Accuracy; Computational efficiency; Databases; Fingerprint recognition; Histograms; Multiple signal classification; Audio fingerprint; audio search; information retrieval (ID#: 15-8817)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7178386&isnumber=7177909

Xu, G.; Meng, Z.; Lin, J.; Deng, C.; Carson, P.; Fowlkes, J.; Tomlins, S.; Siddiqui, J.; Davis, M.; Kunju, L.; Wang, X., "In Vivo Biopsy by PhotoacousticUS Based Tissue Characterization," in Ultrasonics Symposium (IUS), 2015 IEEE International, pp. 1-4, 21-24 Oct. 2015. doi: 10.1109/ULTSYM.2015.0216

Abstract: Our recent research has demonstrated that the frequency domain power distribution of radio-frequency (RF) photoacoustic (PA) signals contains the microscopic information of the optically absorbing materials in the sample. In this research, we were seeking for methods of systematically analyzing the PA measurement from biological tissues and the feasibility of evaluating tissue chemical and microstructural features for potential tissue characterization. By performing PA scan over a broad spectrum covering the optical fingerprints of specific relevant chemical components, and then transforming the radio-frequency signals into the frequency domain, a 2D spectrogram, namely physio-chemical spectrogram (PCS) can be generated. The PCS contains rich diagnostic information allowing quantification of not only contents but also histological microfeatures of various chemical components in tissue. Comprehensive analysis of PCS, namely photoacoustic physio-chemical analysis (PAPCA), could reveal the histopathology information in tissue and hold the potential to achieve comprehensive and accurate tissue characterization.

Keywords: bio-optics; biological tissues; biomedical ultrasonics; photoacoustic effect; biological tissues; biopsy; chemical components; frequency domain power distribution; optically absorbing materials; photoacoustic physiochemical analysis; photoacousticUS based tissue characterization; physiochemical spectrogram; radiofrequency photoacoustic signals; Acoustics; Biomedical optical imaging; Chemicals; Fingerprint recognition; Lipidomics; Liver; Microscopy; fatty liver; multi-spectral; photoacoustic imaging; prostate cancer; spectral analysis; tissue characterization (ID#: 15-8818)

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7329159&isnumber=7329057