Side-channel Vulnerability Factor

ABSTRACT

There have been many attacks that exploit information leakage and many proposed countermeasures to protect against these attacks. Currently, however, there is no systematic, holistic methodology for understanding leakage or making performance-security trade-offs. As a result, it is not well studied or known how various system design decisions affect information leakage or their vulnerability to side- channel attacks.

In this paper, we propose a new metric for measuring information leakage called the Side-channel Vulnerability Factor (SVF). SVF is based on our observation that all side-channel attacks ranging from physical to microarchitectural to software rely on recognizing leaked execution patterns. Accordingly, SVF examines patterns in attackers' observations and measures their correlation to the victim's actual execution patterns.

SVF can be used by designers to evaluate security options and create robust systems, and by at- tackers to pick out leaky targets. As a detailed case study, we show how SVF can be used to evaluate microarchitectural design choices. This study demonstrates the danger of evaluating ad hoc side-channel countermeasures in a vacuum and without quantitative leakage metrics. A whole-system security met- ric like SVF has the potential to establish a quantitative basis for evaluating system security decisions pertaining to side-channel leaks.

1 Introduction

Computing is steadily moving to a model where most of the data lives on the cloud and personal electronic devices are used to access this data from multiple locations. The aggregation of services in the backend provides increased efficiency but also creates a security risk because of information leakage through shared resources such as processors, caches and networks. These information leaks, known as side-channel leaks, can compromise security by allowing a user to obtain information from an unauthorized security level or even by simply violating observability restrictions.

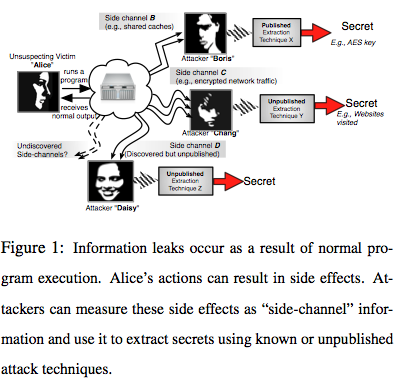

In a side-channel attack, an attacker is able to deduce secret data by observing the indirect effects of that data. For instance, in Figure 1 Alice runs a program on a shared system. The inputs to that program may include URLs she is requesting, or sensitive information like encryption keys for an HTTPS connection. Even though the shared system is secure enough that attackers cannot directly read Alice's inputs, they can observe and leverage the inputs' indirect effects on the system which leave unique signatures. For instance, web pages have different sizes and fetch latencies. Different bits in the encryption key affect processor cache and core usage in different ways. All of these network and processor effects can and have been measured by attackers. Through complex post-processing, attackers are able to gain a surprising amount of information from this data.

While defenses to many side-channels have been proposed, currently no metrics exist to quantitatively capture the vulnerability of a system to side-channel attacks.

Existing security analyses offers only  existence proofs that a specific attack on a particular machine is possible or that it can be defeated. As a result, it is largely unknown what level of protection (or conversely, vulnerability) modern computing architectures provide. Does turning off simultaneous multi-threading or partitioning the caches truly plug the information leaks? Does a particular network feature obscure information needed by an attacker? Although each of these modifications can be tested easily enough and they are likely to defeat existing, documented attacks, it is extremely difficult to show that they increase resiliency to future attacks or even that they increase difficulty for the attacker using novel improvements to known attacks.

existence proofs that a specific attack on a particular machine is possible or that it can be defeated. As a result, it is largely unknown what level of protection (or conversely, vulnerability) modern computing architectures provide. Does turning off simultaneous multi-threading or partitioning the caches truly plug the information leaks? Does a particular network feature obscure information needed by an attacker? Although each of these modifications can be tested easily enough and they are likely to defeat existing, documented attacks, it is extremely difficult to show that they increase resiliency to future attacks or even that they increase difficulty for the attacker using novel improvements to known attacks.

To solve this problem, we present a quantitative metric for measuring side channel vulnerability. We observe a commonality in all side-channel attacks: the attacker always uses patterns in the victims program behavior to carry out the attack. These patterns arise from the structure of programs used, typical user behavior, and user inputs. For instance, memory access patterns in OpenSSL (a commonly used crypto library) have been used to deduce secret encryption keys [4]. These accesses were indirectly observed through a shared cache between the victim and the attacker process. As another example, crypto keys on smart cards have been compromised by measuring power profiles patterns arising from repeating crypto operations.

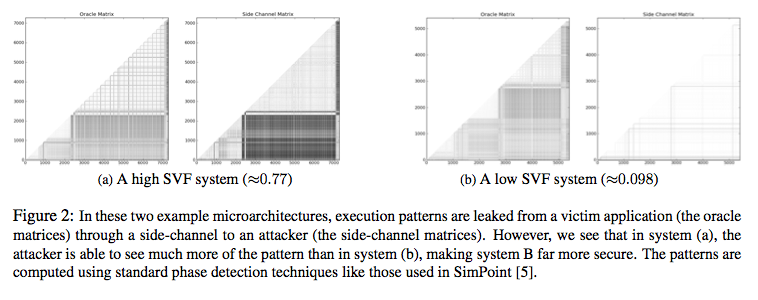

In addition to being central to side channels, patterns have the useful property of being computationally recognizable. In fact, pattern recognition in the form of phase detection [3, 5] is well known and used in computer architecture. In light of our observation about patterns, it seems obvious that side-channel attackers actually do no more than recognize execution phase shifts over time in victim applications. In the case of encryption, computing with a 1 bit from the key is one phase, whereas computing with a 0 bit is another. By detecting shifts from one phase to the other, an attacker can reconstruct the original key [4, 2]. Even HTTPS attacks work similarly - the attacker detects the network phase transitions from "request" to "waiting" to "transferring" and times each phase. The timing of each phase is, in many cases, sufficient to identify a surprising amount and variety of information about the request and user session [1]. Given this commonality of side-channel attacks, our key insight is that side-channel information leakage can be characterized entirely by recognition of patterns through the channel. Figure 2 shows an example of pattern leakage through two microarchitectures, one of which transmits patterns readily and one of which does not.

Accordingly, we can measure information leakage by computing the correlation between ground-truth patterns and attacker observed patterns. We call this correlation Side-channel Vulnerability Factor (SVF). SVF measures the signal-to-noise ratio in an attacker's observations. While any amount of leakage could compromise a system, a low signal-to-noise ratio means that the attacker must either make do with inaccurate results (and thus make many observations to create an accurate result) or become much more intelligent about recovering the original signal. This assertion appears to be historically true, as evidenced by the example SVFs given in Table ??. While the attack [4] on the 0.73 SVF system was relatively simple, the 0.27 system's attack [2] required a trained artificial neural network to filter noisy observations.

As a case study for SVF, we examine the side-channel vulnerability of processor memory microarchi- tecture, specifically caches which have been shown to be vulnerable in previous studies. Our case study show that design features can interact and affect system leakage in odd, non-linear manners; evaluation of a system's security, therefore, must take into account all components. Further, we observed that features designed or thought to protect against side-channels (such as dynamic cache partitioning) can themselves leak information. These results indicate the potential and value of embedding our evaluation method into traditional architecture design cycle.

SVF can be useful to architects, auditors and attackers. A security minded computer architect can use SVF to determine microarchitecture configurations that reduce information leakage. Security auditors can use the vulnerability metric to classify systems according to CC or TCSEC criteria. Attackers can use SVF methodology to determine vulnerable programs and microarchitectures. SVF can be used to identify components in a system that leak sensitive information. These components can then be targeted for attack or defense.

To summarize, we propose a metric and methodology for measuring information leakage in systems; this metric represents a step in the direction of a quantitative approach to system security, a direction that has not been explored before. We also evaluate cache design parameters for their effect on side-channel vulnerability and present several surprising results, motivating the use of a quantitative approach for security evaluation. Finally, we briefly discuss how our measurement methods can be extended to network, disk, or entire systems.

References

-

[1] S. Chen, R. Wang, X. Wang, and K. Zhang. Side-channel leaks in web applications: A reality today, a challenge tomorrow. In Security and Privacy (SP), 2010 IEEE Symposium on, pages 191 -206, may 2010.

-

[2] D. Gullasch, E. Bangerter, and S. Krenn. Cache games - bringing access-based cache attacks on aes to practice. In Security and Privacy (SP), 2011 IEEE Symposium on, pages 490-505, may 2011.

-

[3] M. J. Hind, V. T. Rajan, and P. F. Sweeney. Phase shift detection: A problem classification, 2003.

-

[4] C. Percival. Cache missing for fun and profit, 2005.

-

[5] T. Sherwood, E. Perelman, G. Hamerly, S. Sair, and B. Calder. Discovering and exploiting program phases. Micro, IEEE, 23(6):84 - 93, nov.-dec. 2003.

Switch to experimental viewer