IEEE Security & Privacy 2016 - Trip Update

IEEE Security & Privacy 2016 - Trip Update

This week,I am at the IEEE Security and Privacy; The Oakland Conference. I'm in San Jose, CA. Breakfast started at 7:30 and main session started right at 8:30 and with just a short introduction; 8:40 began the research presentation.

For the duration of the conference: the papers are available to download for free. http://www.ieee-security.org/TC/SP2016/program.html Also the 2015 are free to download now. I highly recommend looking at the papers before jumping to conclusion. I can misinterpret what is being said while I write notes here.

The first set of talks were about hardware for security. The first talk proposed a idea where a bit of sensitivity was activated for return pointer (etc) and then if a non sensitive code over writes it; the hardware stores the write as non sensitive.The 2nd paper was looking at to secure hardware. they designed a small and stealthy attack that could be inserted into hardware during fabrication. Proposed using a capacitor as a trigger. It's good at hiding from dynamic analysis and small for visual inspection. The key is using a small and large capacitor charge sharing. This trigger could be used to tell the processor to switch to a more privileged mode. He didn't have a defense yet. The third talk in this area; look at using cache to break formally proven security application. It relies on the mismatch on cacheability. the attacker changes memory without getting the cache marked as dirty. and when a cache miss happens; it fetches the attacker's variable from memory. Yes, I skipped details. The defense section was short and was not the focus. Most of the solutions have multiple X slow down. The fourth talk look at how you can abuse from within process. ie take advance of a library fault (heartbleed) or use private APIs (iOS Apps). They introduced shred. sub part of the a threat. A Shred is a arbitrary scoped segment of a thread execution. I think shred can have their own secure memory. Then there was lots of engineering talk about how it works and to make it work. The final speaker was on cache assisted secure execution ARM. The goal was to defnese against multi-vector adversary; such as cold boot, OS compromise. It appears that they setup a secure zone and sensitive information/code is decrypted only in CPU Cache.

During the first break; I went to the FedEx Office to get the boxes that HR mailed for our table here. They upped their support. They upped their support this year. I got a giant tube, and three boxes. The tube had a background for the table. I worked on setting up the background. I didn't put it together correctly the first time. The top bar has a lable that says top, but it doesn't actually go on the top. It's really on the bottom of the top bar.... The bottom is also the same oddness; bottom label is on the top of the bottom bar. But it's up now. Also HR sent a box of NSA water bottles. I'll post a picture after lunch.

During the first break; I went to the FedEx Office to get the boxes that HR mailed for our table here. They upped their support. They upped their support this year. I got a giant tube, and three boxes. The tube had a background for the table. I worked on setting up the background. I didn't put it together correctly the first time. The top bar has a lable that says top, but it doesn't actually go on the top. It's really on the bottom of the top bar.... The bottom is also the same oddness; bottom label is on the top of the bottom bar. But it's up now. Also HR sent a box of NSA water bottles. I'll post a picture after lunch.

And now back to the science. The 2nd program is from MITLL and Northeastern. Working on LAVA. A technique to add cheap, plentiful, realistic; security critical bugs into code to test whether all those technologies proposed actually work and how well. Currently on C source code. He transition to doing taint analysis as the analysis to identify the places where buffer overflows could be so that they can add a security buffer overflow bug. They tested tool,a fuzzer, against these bugs, the tool found 2%. But wasn't wasn't a big part. They also started to measure how hard the bugs are to find, how far into the trace.

Third talk is about building new gesture recognizers. like the stuff microsoft kinect does. They want to improve gestures; away from the machine learning approach. I didn't see the security aspect of it. The 4th is on offensive techniques against binaries. It's a systematization of knowledge; so that means they are trying to capture what is going on, They found that tools are not composable; closed source; practically closed source (difficult language), inflexible, architecture dependent. They made a framework, angr, that is composable and overcomes many of the issues out there. They then applied it to the 131 binaries released in the DARPA Cyber Grand Challenge. He mention that he had good success with a fuzzer unlike LAVA. The final paper before lunch as the 2nd Annual paper competition honorable mention author, Matthew Smith. They worked on make binary code more analyzable by decompiling. The concept is far from new, they focused on readibility and they did user studies on the readbility. The user studies results that the improvement was in both students and experts but the difference between experts and students was smallest with the readibility improvements the students were closets to the experts with the tool and were even better than the experts with the worse decompiler for readiblity.

The first session after lunch seems to be much about oblivious RAM (ORAM), The latest few years the number of papers on ORAM seems to be going up though; according to the 2nd paper presentation. there have been impadctful papers since it w was introuced in 1996. . First up was a presenation of a project with a lablet co-author; Adam Aviv. The research was supported by ONR and NSF. They worked on extending ORAM to have arbitary block sizes and secure delettion. The second was also about dealing with the limitation of ORAM, a asynchronous attack threat model and then develop a way to take real queries and make it appear the access patterns are random, but adding additional queries. So they propose a multiuser addition for ORAM. The third paper was presented by a

The first session after lunch seems to be much about oblivious RAM (ORAM), The latest few years the number of papers on ORAM seems to be going up though; according to the 2nd paper presentation. there have been impadctful papers since it w was introuced in 1996. . First up was a presenation of a project with a lablet co-author; Adam Aviv. The research was supported by ONR and NSF. They worked on extending ORAM to have arbitary block sizes and secure delettion. The second was also about dealing with the limitation of ORAM, a asynchronous attack threat model and then develop a way to take real queries and make it appear the access patterns are random, but adding additional queries. So they propose a multiuser addition for ORAM. The third paper was presented by a  former phd student, who received his phd yesterday. It was support by NSF, SaTC and others. Co-author was Jonathan Katz, UMD lablet PI. They worked with secure multi party computation and oram. they wanted to hide the accesses. THey proposed a new structure; that has faster initative speed and multi user. At this point; we transition from ORAM talks to a talk on migrating from X.509 to anaonymous certificates and verifyable computing. The last paper deals with photographs as evidence. The worked on a scheme that cryptologically proved that photo is authenticate. But signing the photo in camera; problem that any change invalidates the signature. such as crop, roate. but they wouldn't be invalidate authenticity. So they want to enable a set of permissions and have everyone along the line to verify that it was an authentic photo. One consequence of this work is no more air brushing dating website photos.

former phd student, who received his phd yesterday. It was support by NSF, SaTC and others. Co-author was Jonathan Katz, UMD lablet PI. They worked with secure multi party computation and oram. they wanted to hide the accesses. THey proposed a new structure; that has faster initative speed and multi user. At this point; we transition from ORAM talks to a talk on migrating from X.509 to anaonymous certificates and verifyable computing. The last paper deals with photographs as evidence. The worked on a scheme that cryptologically proved that photo is authenticate. But signing the photo in camera; problem that any change invalidates the signature. such as crop, roate. but they wouldn't be invalidate authenticity. So they want to enable a set of permissions and have everyone along the line to verify that it was an authentic photo. One consequence of this work is no more air brushing dating website photos.

After

After the afternoon break, the first presentation was an SoS Presentation by Elissa Redmiles of UMD. Her project with Michelle Mazurek is on how user understand security advice. This is definitely the easiest presentation to type about. :) Anyway, they did a human subject research; a survey on how people decide on what to believe; they look in both the physical and cyber world. The 1hr long interviews were coded and analyzed. Their first recommendation is to develop vignette so people do not need to learn the harm first hand. There is also an issue of marketing materials; people don't trust information that is marketing. The 2nd presentation feels like a followup from the 2nd paper competition honorable mention paper. Ok, it definitely is; Yasemin Acar is talking about that paper. Sascha Fahl is also in attendance and a coauthor here. So to further investigate that reearch, they did developer studies in the lab; both in Germany and at UMD. They taught the subjects how to explain out loud what they are doing. Then there put them in one of 4 conditions about resources to have; Then they did 4 tasks; with the tasks could be solved in 20min and had a secure and secure way of doing that. The status are fun about security; on 16% said security while doing it but 79% said they thought security in the exit interview. The results is that the order in functional was stack overflow; official documentation; book; google while the security done right went in the reverse; official documentation; book, google and then stack overflow.

the afternoon break, the first presentation was an SoS Presentation by Elissa Redmiles of UMD. Her project with Michelle Mazurek is on how user understand security advice. This is definitely the easiest presentation to type about. :) Anyway, they did a human subject research; a survey on how people decide on what to believe; they look in both the physical and cyber world. The 1hr long interviews were coded and analyzed. Their first recommendation is to develop vignette so people do not need to learn the harm first hand. There is also an issue of marketing materials; people don't trust information that is marketing. The 2nd presentation feels like a followup from the 2nd paper competition honorable mention paper. Ok, it definitely is; Yasemin Acar is talking about that paper. Sascha Fahl is also in attendance and a coauthor here. So to further investigate that reearch, they did developer studies in the lab; both in Germany and at UMD. They taught the subjects how to explain out loud what they are doing. Then there put them in one of 4 conditions about resources to have; Then they did 4 tasks; with the tasks could be solved in 20min and had a secure and secure way of doing that. The status are fun about security; on 16% said security while doing it but 79% said they thought security in the exit interview. The results is that the order in functional was stack overflow; official documentation; book; google while the security done right went in the reverse; official documentation; book, google and then stack overflow.

The third presentation from UIUC (but I don't think SoS connected), the concept is that people plug in USB drives that they find. First research question is it true, and what is the percentage. Can you make it more effective, and why do people do that; demographics. So they did a experiment with 297 USB drives at UIUC. *Yes, it was IRB approved. Results - 297 total, 290 were taken, 135 were plugged in a a file opened. (had no ethical way to measure; plugged in without clicking on a file). This study is fun; and is getting lots of laughs from the audience. They couldn't make them more effective; but a return label was effective at decreasing it. http://www.ieee-security.org/TC/SP2016/papers/0824a306.pdf (to get the paper). 68% said they wanted to return it; but winter break pictures were looked at first. 68% also didn't take precautions; though one was worried so he sacrificed a university computer.

The 4th paper was a survey of techniques against telephone spam. There are 200,000 complaints every month about robo calls. There is fraud; and it on the rise. So the 3 techniques; call request header analysis; voice interaction screen, caller compliance. None are perfect. but voice interactive screen was the worse.

For the last research presentation of the day; before the poster session - is about SMS Messages (Text Messages). It looks about the now larger attack surface of the text messages. Since its being used as 2 factor authentication; it's an interesting thing to look at it. They built their dataset is based on the public text messages gateways. Services that post messages sent to a phone number. So they looked at the Pins sent from line. it looked pretty random; then WeChat the numbers are pretty structure and had 626 pins. Talk2 is even worse.. the graphics are worth looking at. Of the 33 services examined; 13 fail randomness test. Found: password rest links; usernames and passwords; name and address; credit card numbers with exp and cvc codes. They found a phising campaign that sent text messages asking for icloud information. But it's a text message, so it seen on the "missing iPhone".....??. hmm.

One other note about usability. The hall this year is warmer. Its so nice not to be freezing; which is was the theme of last year.

One last post of the day. After the last session was the poster session. Got to see some research posters.

Tuesday, began with the paper awards. They recognized the best reviewer and 4 papers. I believe the best paper was the paper presented yesterday about developing stealthy hardware attacks.

The first paper being presented is on look at potential harmful apps. Study of 1.3 million android apps, 10,000 iOS apps. They were looking for poor libraries. They build dummy apps, that only had the library and then submitted to VirusTotal. They examined iOS apps, but looking at the cross platform libraries from Android. Then they had to do some examining to see if the libraries were equivalent between the platforms. Found in the Apple App Store, about 3%, while in the jailbreak stores were between 5-17% of apps had potential malicious libraries. They found library contamination and a vector for propagating potential harmful code.

The 2nd presentation was working to detecting logic bombs in android apps. Logic Bombs are code where under narrow conditions they behavior of the app is subtly changed. They focus on the check, because that could be weird so they focused on trigger analysis. They tested the tool with 21,747 begin apps from Google Play store and 11 malicious apps from DARPA red teams and 3 real world malware. --> Begin apps (35, they flagged. They called are false positives). The looked at each to see what the behavior was. Some were license check; other welcome to a train station when you got near it. They also found a backdoor on an app that enabled remote locked based on a sms with a user set password. They found it accepted a long string it would unlock. They also had success at the DARPA dataset.

The third paper is about the leakage of location and routing information based on zero permission sensors. (things other than like GPS so gyroscope, accelerometer and compass). To do this; the built a graph of the map. These sensors are really in accurate so a challenge. So from the data from the sensor they work on find the path from the data collected. They simulated how well it works with the biggest cities. They also did real world experiment in Boston, Waltham, CA. Boston - 13% top 1, 30 - 35% in top 5. In Waltham 50% for top 5.

The fourth paper was looking at timing attacks on android. The idea is through analyzing the time series of interrupts occurred for a particular devices, user's sensitive information could be inferred. For example, by looking at the timing of touch/untouch on touch screen can lead to figuring out the unlock pattern.

The final paper was presented by Sasha Fahl, an honorable mention paper winner from the 2nd paper competition; his co author then and as now is Matthew Smith and co-author Patrick McDaniel, from PSU and also PI from ARL's SoS CRA effort. (yay to using acronyms). They did take a android security in the academic community. For example what are the open research challenges and lessons learned from the research. They concluded that Android permission concept failed and was presumably doomed to fail from the beginning. this paper pulls together the multitude of papers published in android security and get to more of a science. It organizes the knowledge. There are way too many conclusions and areas evaluated for me to document here so I recommend reading the paper.

The 2nd morning session.,

The first paper is about key exchange. A formalized key confirmation model; look at refresh than MAC protocol transforms; and at TLS 1.3 for key confirmation. They confirm the resresh then mac transform generically add key confirmation and that TLS 1.3 provides key confirmation.

The 2nd paper on formal analysis of TLS 1.3. They did symbolic verification of the protocol. So they discovered an issue where a man in the middle could end up with the same message has values and thus the victim would send a signed hashed enabling the man in the middle to have the signature needed for authentication. The attack was shared with TLS 1.3 group and they adjusted the signature in TLS 1.3 version 11.

The third paper look multiple handshake security in TLS 1.3. They proved that stage 10 was secure in that way.

The fourth paper in this session is part of a bigger project to an open source; verified; implementation of TLS 1.3. THe topic is about the attacker method of downgrading to a less secure method. TLS 1.3 is a suite of protocols which are negotiated. they have a definition that tolerates weka algorithms and capture downgrade attacks. And then they analyzed TLS.

The final talk was not about TLS. Instead it was about trusting authorities. as in certificate authorities. Arguing to go from 1 link to multi party. but that is a grand challenge, so they are looking at just transparency. So make the signature public; so what is signed; the signature are public. They made a protocol and tested to thousands and tens of thousand of nodes. The key is signing trees.

Lunch was salad, followed by chicken and an apple tort. Then after lunch a group of presentations of privacy.

The first up was from UIUC. Looking at Privacy Preserving Data Publishing; by producing a synthetic data set instead of the sensitive data. So similar fake data rather than the real data. So Census has done something like this.

The second was on formal methods for verification of crypto protocols convening privacy. So the example problem he used is with electronic passports. to an eavesdropper all passport communication must look the same, so the attacker couldn't track a passport. So the passport protocol fails most types of attacks.

The third paper was the phd student of Patrick McDaniel. It's an ARL CRA funded project. It's looking at neural networks and security / privacy. Looking at how adversaries can attack neural networks so that it can change behavior. So they increased robustness of the neural network to the attacker while only slightly reducing accuracy.

The last talk was from CMU with Anupam Datta. The research was to make algorithms more transparent. I don't know if this SoS is funded or not. The research paper doesn't mention where the funding came from. So with a machine learning classifier, what inputs matter. It gets even more difficult cause data can be correlated. The idea is fix the inputs and then vary one input to see how the output changes. But single inputs can have no impact on the decision. So look for the marginal. So game theory is the approach. Look at is a cooperative game. Set of agent and value of subsets. Then they tested with predictive on arrests and one on income. So can see if the algorithm discriminated.

After the break was a session on

After the break was a session on  IoT attacks then the short papers; which for it felt like the parade of government agencies. Started with Greg Shannon talking about the RD Strategy; then NIST, ONR which their research plan. A few research talks; and an update for CCS, 840 papers were submitted. the deadline was the day before.

IoT attacks then the short papers; which for it felt like the parade of government agencies. Started with Greg Shannon talking about the RD Strategy; then NIST, ONR which their research plan. A few research talks; and an update for CCS, 840 papers were submitted. the deadline was the day before.

After short talks was the business meeting. two main topics. 2nd topic was to investigate a plan to move from one deadline for paper submissions to year long submissions process with like monthly deadlines and quicker responses. That way papers can be released before the conference. The hope it will reduce the number of not full baked papers submitted that would have done better with a little more time. One other conference last year, in the submissions page asked submitted to voluntarily say if the paper was submitted to a previous conference and which. They accepted some papers that were submitted to S&P but rejected most.

The 2nd part was that in the past year, S&P ran a student Program Committee. As in a group of 40 students acted as a PC. They reviewed the papers and made selections. One student who was on the PC said it was a good experience and learning experience. The interesting parts is that it was possible to compare the performance between the student PC and the senior PC. They are going to release a paper that has more details and what they learned about it. Some highlights here. At a high level the students gave out the same ratings as the senior PC. However, students were slightly more likely to keep considering a boarderline paper than the senior reviewers. The super interesting part is that how much difference there was in acceptance. See table photographed.

The Last day of IEEE Security and Privacy starts with an English muffin with egg and cheese and then to the first session on protecting the web.

The first presentation on on proxies, and domain name collisions because of all the new top level internet domains. If the attacker can get a collision with an internal network domain name, and the proxy configuration files to reroute traffic. This study looked at root cause of these dns queries leave the internet network and being looked up on the global internet DNS. Then a vulnerability assessment. They used 2 year NXDomain traffic at DNS rooter server A & J. 85% of leakage from 12 ASs. 10 AS associated with home users; but most of the searches are corporate related. Finding end devices mistakenly issue those when traveling. In looking at the that the collision names are register at 13% but the trend is that in 2 years, 60% will be registered. The recommend solutions were weak. filter at top level DNS and stop configuring the OS to be hard coded. CERT WPAD name collision alert just came out.

2nd - looking at residual trusted domain after a change in ownership. There are accounts that are trusted to send password to expired domains, could register and then get the account information. Lots of examples of things that are really sensitive being locked to email address. They did a 6 year study on the domains; data set of expired domains; malware domains and blacklist domains. So looked at the union of sets. Found lots of numbers; but for here; 260,000 domains were malware after the domain expired and 10% appeared on blacklist. Trend of growth of bad registration. in the thousands a year. But looked at other ways for domain ownership change other than expiration and registration. They found a ATP expired name; and they registered and starting getting APT traffic.

The third paper is about advertising inserted into vulnerable webpages. Such as buy cilais on a state.gov webpage. But the idea here is that there will be a semantic inconsistency with the interested add compared to the content of the rest of the page. They built a algorithm to find this. nih.gov was the top domain for having pages that had out of context ads injected.

The fourth paper was on https cookie hijacking. In unencrypted; it was easy; the cookie was an identified, present the cookie your in. But it gets more interesting with encrypted. There are opportunities.Users often go http://goo.. which redirects to https://goo.. but with the first sent; an encrypted cookie is sent (cookies are different from the encrypted pages, but still have accesses, also services have to mark their cookies as private and only to be sent of encryption channel). They studies 25 major services and 15 expose sensitive information to the unencrypted cookie. Yahoo let them send email as the user with the unencrypted cookie. Pretty much the top websites only do a little with encrypted cookies; most http. Also found ad network cookies useful to figuring out browsing history.

The final paper is about web cloaking useful for search engine optimization and ads. cloaking is giving different content to different users. Ie give the gooblebot differnent content than a regular browser. ie deciding to give real or fake content. They went got black market cloaking software. The software is pretty advance in creating fake content to improve fake content. They work really hard with big black lists; machine learning to find bots and security researchers, companies, etc. So the google researchers take lots of approaches to compare features based on different information.

The 2nd Morning Session on Cryptography.

1st Paper is about trusted ASICs and dealing with untrusted manufacturer. DMA got shown on screen. Trusted fabrication not cheap and foundries only exist in 5 countries. So how to get trustworthy chips more cheaply. Their approach two chips, prover and verifier. Prover is untrusted and Verified built trusted. There are challenges. Built a design that sometime saves costs than building the whole trusted. The prover job is to convince the verified tha the the answer to satisfiable. The verifier tests each layer to be trusted. so they worked on improving speed. It allows the trusted fab to use older technology; like 1997, 350nm. They were looking for cost in power use. they tested with two math, like ecc. as they get bigger; it becomes more power efficient. There are some limitations and applicability.

The 2nd paper was a SoK paper on e-voting protocol. I wonder if the e-voting protocol from UMD will be mention. The 2 properties; no one should find out how a single voter voted. and if something goes wrong, then this should be detected. so privacy and verifiability. The paper then goes on about verifiability. As often as the case; if you want more details; see the paper.

3rd paper was about password typos. If a slight typo the server will accept it. The server accepts some variants of the password. Interesting in that a kid at the science fair that was also working on password typos. So this paper was can it built, it help; is it secure. First they built it that they stored the has as normal, then if failed then then it would start trying version with correct the typos. Problem is hash is intentionally slow, so limited set of typos possible to correct. To determine what type of typos were common that got amazon mturk people to retrype passwords to find top 3. So they worked with dropbox to instrument the loggins to see if these were occurring within 24hrs 3% of users failed to login because one of top 3 typos, of those 3%, 20% would have saved 1min or more of time in logging on. But question is it secure? Threat 1, server compromise, no change as hash database is the same. Threat 2, remote guessing attack. lock account after q wrong guesses. So the attacker gets 3 free guesses. attacker success up by 300%, but that isn't correct, because humans don't choose random passwords. So simulation study with password leaks as a proxy for real passwords. Assume adversary knows distribution of passwords and the set of correctors. and the server can choose not to check for typos if the password is too common. then the attacker success rate would rise by .2% 2.something to 2.something. This presentation got lots of questions.

The fourth paper was on access controls in the cloud. Answering the question if you don't trust the cloud service provider who will be the reference that is security. Such as trusting the crypto being use. Lots of options. Literatures of assumes that crypto is enough, but the research is the cyrpto is enough in dynamic systems where files are edited or permission changes. Then lots of discussion on encryption and signing and changing so that normal RBAC permissions are possible. But the administrative costs don't scale well at revocation of access.

The last paper is blockchain. It's not far from the UMD lablet, eventhough we didn't support. Elaine Shi is a coauthor and Jonathan Katz got a shoutout in the acknowledgements. Note that everything on the blockchain is public. like the code and data. This project is about an auction Now we see lots on how it works.

I also learned that the US Government has been growing Mario World trees.

And back to research Presentations. I heard this is session 11. That's allot for just day 3. Anyway, The first presentation is about localizing fault tollerance

The next presentationon is on browser fingerprinting. This is trying to get as much informationm about the computer to identify you. Lots of attributes. They have a website to see if you are unique: http://www.amiunique.org I was unique. Says they haven't collected just .35% is Microsoft Edge. My phone is unique too.

The third paper is about end end integrity for web apps. So how to guarentee the integrity of the data. Such as when the server is compromised.

The last paper is is about nation state censorship circumvention. They examined 55 papers. And are discussing the censoring technologies.They found 34 tor blocking inciedents, the majority on Iran and China, but there were 9 other countries.

So Google had a booth, there were giving out lego sets of the Android. SoS Lock Lego set anyone'?

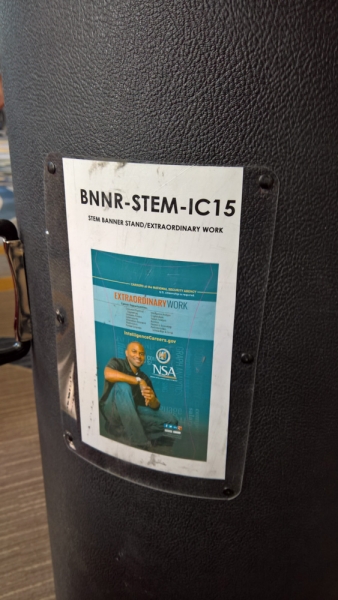

And now, the final research session of IEEE S&P. Well, before that, it was time to packup the booth and take it to FedEx to be shipped back. The giant tube plus 3 boxes was reduced to the giant tube and 1 box. In the giant tube is the background for the booth. It kinda has terrible opsec. The picture here is on the outside of the tube. I wonder what is in side and I wonder what organization it is associated with.

And now, the final research session of IEEE S&P. Well, before that, it was time to packup the booth and take it to FedEx to be shipped back. The giant tube plus 3 boxes was reduced to the giant tube and 1 box. In the giant tube is the background for the booth. It kinda has terrible opsec. The picture here is on the outside of the tube. I wonder what is in side and I wonder what organization it is associated with.

So the 2nd session is about gadgets in the stack. This is relevant to return address programing, ie you are an attacker and dealing with ADSLR. Gadgets are small bits of code in libraries with a return pointer, so they can do what they want with controlling the program flow. keep adding defense to mitigate it. So they were interestged in what binary compatible applications for complex applications (like chrome or acrobat). One mitigation they are propsoing, NEAR - no execute after read. So whenever a section of code they replace the first byte in the execute with halt. it was good idea they weren't first to publish. So now are they checking the suitability. Found 3 assumptions.They could break the assumption and break the mitigation. Assumption 1) code is singular in memory., but they found it not to hold. so an attacker could find one; destory it, and then use the information learned to use the 2nd. 2) Does transient code exsist. Dynamically loaded libraries. so read the library, destoryl force an unload of the library then reload and have a working library. 3) do functino prologue inform about epiloge. So in a function, the begining save register, while end restor those registers. So a technique by reading the register store in the beginning no the order they appear at the end. So after this technique, 90% of gadgets were still avilable. This was fun. they had idea, but lost out to publishing first, did more work and showed the whole thing was ineffective. Guess they got the last laugh., muwahwahwah.

Third paper - about control attacks getting harder.

The final paper of final session. Using a hardware bug and deduplication (software side-channel) allows exploit MS Edge wihtou software bugs. So attack leaks help and code pointer addresses. create a fake object. user rowhammer to flip a bit in the pointer to the object which as read/write priveldges. Windows some hyper visions to save memory. so normal write is fast, copy then write is slower, can be measured in javascript. Couple of challengesm that the secrete isn't a page with known data. the screte has too much entropy to do at once.So iterative. take the big known part of the page, change the unkown part to evetually get to when it gets the same, know the pointer. so probe with lots of popinters; need only one match. then in javascript create an object within a array, get a pointer to another object and rowhammer it to flip a bit so it points to the object and then they can do whatever they want.Question on how long it takes. The depulicaion (about 30min), rowhammer is based on how faster rowhammer.

The session for this year's S&P is the NIRTD Panel hosted by Tomas Vagoun. Greg Shannon started with an overview the R&D Cybersecurity Strategy. Then Tomas started talking about a privacy research strategy. He was coordinating and leading the group on the strategy. The strategy isn't out yet. Jim Kurose talked about NSF foundation security and privacy research is a priority and it one area that doesn't get cut. Following him Was Doug Maughanm who talked about the role of DHS and how they are looking at more at the tactical level, 3 years or less, short enough and mature enough to productize. New focus in silicon valley to get technology to market in 2 years or less. About 50% to industry 40% to academia. They are really focused in privacy because they have lots of private data. They support the strategy and expect to see more privacy. They are particularly interested in the human aspect of cybersecurity. They also need quantitative metrics to measure how secure we are in cyber. He also stressed the need for researchers to contextualize their research in the needs laid out in the strategy. Of note this year, Doug Maughan isn't traveling with a photograph to post pictures on the DHS S&T Twitter account. Last up was Bill Newhouse from NIST to talk about NIST's role. they have 7 labs. he asked about the audience interaction with NIST. most of the room had some interaction. NIST is interested in all level of research, from the basic, applied, even to the practical advice. NIST does lots of work intramurally. They like to engage through workshop and publications with public comments. This past year new division of the applied cybersecurity division. One project is get away from passwords for authentication.

It was then opened for questions.

First question was about HPC and security. There was a strategy that came out a year ago, and it s a green area for growth.

2nd question; about measure R&D performance. everyone said a challenge. One way to see how many people want to engage in research with US. Cool SoS must be awesome go look at the map and number of people who want to collaborate. http://www.sos-vo.org/map

Question - about privacy and cybersecurity working together instead of cybersecurity often undoing the privacy work with more privacy sensitive approaches. Idea of longer term opportunities for collaboration between the two communities. (sounds like a good topic to consider in Science of Security)

Question about how OPM breach impacts relations. Lack of credibility lower credibility. -- Need for collaboration, working together. We all get tarnished together. Other take home message is human are huge part.