2017 SoS Lablet Annual Report - CMU

2017 SoS Lablet Annual Report - CMU

Lablet Annual Report

Purpose: To highlight progress made within the reporting year. Information is generally at a higher level which is accessible to the interested public. This will be published in an overall SoS Annual Report to be shared with stakeholders to highlight the accomplishments the Lablets have made over the past year.

A). Lablet Introduction

Purpose

The Carnegie Mellon University (CMU) Science of Security (SoS) Lablet includes nine projects, seven university subcontracts, and about fifty faculty, postdoc, and PhD student researchers working at eight campuses.

At Carnegie Mellon, researchers are drawn from six different academic departments in three different colleges.

- In the School of Computer Science (SCS): the Institute for Software Research (ISR), the Human Computer Interaction Institute (HCII), and the Computer Science Department (CSD).

- In the engineering college (the Carnegie Institute of Technology, CIT): the Electrical and Computer Engineering (ECE) department and the Engineering and Public Policy (EPP) department.

- In the Heinz College, the School of Information Systems and Management.

- Many of the Lablet faculty and graduate students are affiliated with the CMU CyLab coordinating entity for cybersecurity research.

Subcontractors (and sub-PI's) include the following:

- Cornell (Dexter Kozen)

- UCI (Sam Malek)

- UC Berkeley (Serge Egelman)

- U Pittsburgh (Scott Beach)

- Wayne State (Marwan Abi-Antoun)

- U Nebraska (Matt Dwyer, Witawas Srisa-an)

- UTSA (Jianwei Niu)

The team also collaborates with researchers at Bosch, Google, and Microsoft.

The Carnegie Mellon team is led by William Scherlis, with co-leads Travis Breaux and Jonathan Aldrich. The lead financial manager is Monika DeReno.

Approximately 16 PhD students participate in the program, drawn from the departments and institutions identified above. In addition, several MS and undergraduate students participate directly in lablet projects.

B). Fundamental Research

Hard Problems

The CMU lablet addresses all five of the Science of Security Hard Problems, with emphasis on (1) Composability and Scale and (5) Human behavior. There is significant additional effort relating to the other three problems, (2) Policy-governed secure collaboration, (3) Predictive security metrics, and (4) Resilient architectures.

Projects

The CMU Lablet includes nine projects. The chart below identifies the projects, their faculty leaders and subcontractors, and the hard problems addressed:

| Com | Pol | Met | Res | Hum | Leads and Subs | Title | |

| 1 | X | X | X | Aldrich, Garlan, Malek (UCI), Abi-Antoun (Wayne State) | Frameworks, APIs, and Composable Security Models | ||

| 2 | X | X | Kästner | Limiting Recertification in Highly Configurable Systems: Interactions and Isolation among Configuration Options | |||

| 3 | X | X | Platzer, Kozen (Cornell) | Security Reasoning for Distributed Systems with Uncertainties | |||

| 4 | X | X | Datta, Jia | Compositional Security | |||

| 5 | X | X | Aldrich, Sunshine | A Language and Framework for Development of Secure Mobile Applications | |||

| 6 | X | X | X | Garlan, Schmerl | Multi-model run-time security analysis | ||

| 7 | X | X | Aldrich, Dwyer (Nebraska) | Race Vulnerability Study and Hybrid Race Detection | |||

| 8 | X | X | X | Breaux, Niu (UTSA) | Usable Formal Methods for the Design and Composition of Security and Privacy Policies | ||

| 9 | X | X | Cranor, Acquisti, Christin, Telang, Egelman (Berkeley), Beach (U Pitt) | Understanding user behavior when security is a secondary task |

Two projects were discontinued: #5 (Harper) and #11 (Carley).

Highlights of results and impacts

This section presents research highlights CMU SoS Lablet activity, organized according to the Hard Problems.

Hard Problem #1 (Composition and Scale)

Aldrich – Secure Mobile. Project lead Aldrich with co-lead Sunshine developed language and framework concepts that support “building security in” for mobile and web applications, with specific benefits relating to both command injection, such as SQL injection attacks, and also least privilege, in how applications use system resources. The key idea is to embed domain-specific command languages, such as SQL, within the programming language—and to do this in a way such that it is more convenient to use the embedded (and secure) DSL than to use insecure string operations, which is typical in current web applications. A key feature of the approach is to do this in a general manner, enabling extension of a host language with new command DSLs—and to do this in a modular way. Past approaches were not adopted because they were un-modular, preventing separately-defined embedded DSLs to be used together.

The team developed a novel composition mechanism, type-specific languages, that supports modular DSL embeddings by associating a unique DSL with appropriate types.

- Recognition. The paper describing this work, Safely Composable Type-Specific Languages, was awarded an ECOOP '14 Distinguished Paper Award.

- Recognition (applying similar ideas to Python). The paper, Statically Typed String Sanitation Inside a Python, received a Best Paper Award at Privacy and Security in Programming, 2014.

Aldrich – Race Vulnerabilities. Aldrich and team developed a compositional approach to “deep immutability” that addresses the frequent mismatch of programmer expectations when reference variables are declared immutable: The reference itself might be guaranteed not to change, but the object referenced may nonetheless change. This “expectation gap” is the source of a range of escalation-of-privilege vulnerabilities (such as the Java 1.1 getSigners() method that shares an “immutable” reference to an array of signers that turns out to be mutable). Transitive immutability is a composition issue, since expectations must be expressed and analyzed across component boundaries.

Evaluation of this approach included not just mathematical analysis with respect to composition, but also empirical evaluations involving developers and code. As noted above, the underlying issue is the semantic expectations of developers. The team therefore assessed “developer usability” issues (Hard Problem #5) with human-subject studies demonstrating success rates that improved from a plain-Java control baseline of 0-40% (depending on task) to 70-90% success rates with our intervention. The team also examined existing code bases to assess the extent of change required to model and analyze deep immutability, with results indicating very few minor changes were needed. The results were reported in ICSE 2016 and ICSE 2017.

Datta – Secure Composition. The goal of this project is to allow both sequential and parallel composition of arbitrary number of verified and unverified system program modules—and in a potentially adversarial environment (e.g., with untrusted system modules). The team developed a semantic model that supports compositionality of components in the presence of such adversaries. The UberSpark system enforces verifiably-secure object abstractions in systems software written in C99 and assembly. This is significant because the absence of explicit abstractions in low-level programming is a major source of vulnerabilities (and the subject of much binary analysis). UberSpark provides a verifiable overlay of abstractions, and can compositionally verify security properties of extensible commodity systems software (e.g., BIOS, OS, hypervisor) written in low-level languages. The UberSpark abstractions include interfaces, function-call semantics for implementations of interfaces, access control on interfaces, and forms of concurrency. It uses a combination of hardware mechanisms and light-weight static analysis. UberSpark provides a verifiable assembly language CASM which is compiled using the certifying CompCert compiler into executable binaries. The team has validated UberSpark on a performant hypervisor, demonstrating only minor performance overhead, and low verification costs. UberSpark is available at http://uberspark.org

- Recognition. The project leader received the Outstanding Community Service Award, 2014 IEEE Technical Committee on Security and Privacy for service as Program Co-Chair for 2013 & 2014 IEEE Computer Security Foundations Symposium. Note: The top papers from this conference that were nominated by the program committee won the 2014 and 2015 NSA Best Scientific Cybersecurity Paper Awards.

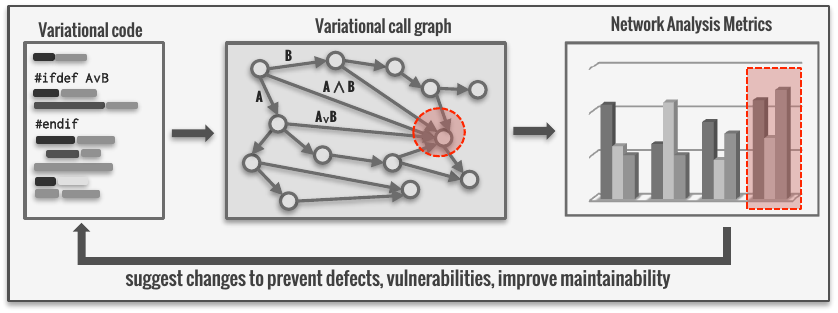

Kästner – Variability, Change, and Certification. Both evolution and configuration of a system increases the security challenge significantly. While some versions and configurations of a system might be secure, changing a single configuration option, updating a single dependency, or combining multiple configuration options in unanticipated ways can expose new vulnerabilities. Heartbleed, for example, affected only users who activated the (default, but not required) heartbeat configuration option. In the Linux kernel there are 15K compile-time configuration settings, with the total number of configurations (a theoretical maximum of 2^15k) being intractably huge. The Kästner team investigated techniques to simultaneously analyze very large numbers of compile-time configurations of C code at once, without looking at each configuration individually, a kind of configuration-aware taint tracking. They analyzed which parts of the code might suffer from increased configuration complexity (e.g., many #ifdefs), and applied the ideas directly to examples such as the Linux kernel and OpenSSL.

It turns out that network analysis techniques can be a predictor of which code fragments are more likely to contain security vulnerabilities in the Linux kernel. At the same time, we have investigated evolution issues by analyzing unusual commits that are outliers and merit security review. In an empirical evaluation, we found that our anomaly detection mechanism detected code commits that are actionable for developers to prioritize reviews. (The figure illustrates the use of graph-theoretic network metrics to identify areas of variational code needing more scrutiny.) Papers published in 2016 by the Kästner group regarding variability appear in IEEE/ACM ASE and ACM FSE.

In a related study, the team analyzed how security practices are enforced in standardized software certification processes, such as Common Criteria. Interviews with subject matter experts led to identification of tensions in the certification process, emphasizing that rapid recertification and composition issues are among the main concerns in today's certification. The effect of these issues is that old, vulnerable, but certified versions are often preferred to newer patched ones that are still going through the lengthy certification process. The studies confirm that a larger focus on rapid and compositional certification is critical to address security concerns for software systems, including those that are highly configurable.

Hard Problem #2 (Policy)

Breaux – Policy modeling and analysis. This team undertook work on a framework to support holistic analysis of systems for privacy. There are four interrelated scientific thrusts: (1) formal languages, based on natural language privacy policies, designed to express privacy principles and check data flow for compliance in a system’s architecture, (2) information type ontology theory to enable algorithmic checks of whether meanings assigned to predicates in a formal privacy language are consistent among policy makers and developers, (3) static and dynamic analysis techniques to find privacy violations by identifying and tracing data collection, use, and transfer practices throughout source code, and (4) privacy risk metrics to reliably measure how users and data subjects perceive the risk of specific data practices, so that developers can focus effort on mitigating the most important privacy threats.

These results are significant because it is otherwise too easy for policy makers and developers to “speak past” each other by hiding inconsistent data practices through ambiguous terminology. This includes tracing high-level data types to low-level expressions in source code, including both platform API-managed data types and user-provided data.

Privacy differs from security, because privacy is characteristically about the data subject, or the person about whom the data is descriptive. One of the goals of this work is to trigger a new line of design thinking that is not limited to access control and anonymization, and which can introduce consent and use-based restrictions imposed by architecture.

- Recognition. The journal paper Privacy Goal Mining through Hybridized Task Re-composition,” is invited for presentation at the 39th ICSE, 2017.

- The paper Ambiguity in Privacy Policies and the Impact of Regulation, receives Honorable Mention in the “must-read” Privacy Papers for Policy Makers Award competition from the Future of Privacy Forum in 2016.

- The paper A Theory of Vagueness and Privacy Risk Perception, is nominated for best paper at the 24th IEEE Intl Requirements Engineering Conference in 2016.

- The 2006 paper Towards Regulatory Compliance: Extracting Rights and Obligations to Align Requirements with Regulations, receives Honorable Mention for 2016 IEEE RE Most Influential Paper Award.

Hard Problem #3 (Metrics)

Breaux – Policy Modeling and Analysis. (See above.)

Garlan – Architectural Resiliency. (See above.)

Hard Problem #4 (Resiliency)

Garlan – Architecture Resiliency. Project lead Garlan with co-leads Aldrich, Schmerl, Cámara, Malek (GMU), Abi-Antoun (Wayne State) combined techniques from dynamic network analysis and software architecture to improve security to create a model-based anomaly detection algorithm. The algorithm identifies evidence for insider attacks based on the interaction of sequences of user interactions with resilient architectural behaviors. The algorithm proved better at detecting suspicious activities than other approaches that do not consider architectural behavior, such as unigrams or bigrams, but which are traditionally used for anomaly detection.

One of the challenges faced by the team was measurement (Hard Problem #3)—how to evaluate the anomaly detection approach. Experimental evaluation was hindered by a combination of a lack of real insider threat data and real platforms to run experiments on. This led to the implementation of an open source testbed platform whose design is based on knowledge of real attacks on enterprise software systems. With the simulated large-scale web infrastructure, the team showed (a) the approach could perfectly rank anomalous traces on an architecture with 240 components and 10% anomalous behaviors, (b) it could maintain better than 95% perfect ranking of anomalous traces with up to 40% of simulated traces as anomalies, and (c) while large architectures give better results, even with small architectures (those with 100 nodes), the team were able to rank 60% of the anomalies.

This work is on-going (supported by bridge funding), with a goal of enabling simulation of real advanced persistent threats on the testbed, leading to both improved self-protecting resilience technologies to mitigate threats and also improved measurement techniques, based on testbed use.

- Recognition. In April 2017, David Garlan and Bradley Schmerl, with colleague Mary Shaw, received the CMU School of Computer Science Allen Newell Award for Research Excellence for their work on software architecture and adaptive systems. This is top annual award for research in the School of Computer Science.

- Recognition. The paper, Challenges in Physical Modeling for Adaptation of Cyber-Physical Systems, won the IEEE World Forum on the Internet of Things Best Paper Award, 2016.

Hard Problem #5 (Humans)

Cranor – Security Behavior Observatory. In the past three years, the team has developed a multi-purpose observational resource, the Security Behavior Observatory (SBO), to collect data from Windows home computers. The SBO collects a wide array of system, network, and browser data from over 500 home Windows computer users (who participate as human subjects).

This resource has already led to some surprising discoveries: (1) Participants who are more engaged with computer security and maintenance did not necessarily have better security outcomes, in the sense of failures to patch, evidence of malware, etc. (2) These deficiencies are not necessarily best addressed through automated security measures, which work well for some users but fail others who circumvent those measures. (3) The subcontractor partner developed and validated a Security Behavior Intentions Scale to quickly measure user security behavior. One conclusion is that end-user survey results show that intentions are not a good proxy for actual behavior. (4) More recently, the team has been collecting high quality password data (i.e., not from self-reports or lab studies, but from actual password use), and has shown that partial password reuse (e.g., a common stem) is rampant, which suggests potential security risks that go beyond reuse of entire passwords (ACM CCS 2017).

- Recognition. CHI 2016 Best Paper Award Honorable Mention for Behavior Ever Follows Intention? A Validation of the Security Behavior Intentions Scale (SeBIS). SOUPS 2016 Distinguished Poster Award for Risk Compensation in Home-User Computer Security Behavior: A Mixed-Methods Exploratory Study.

- Recognition. Work done by Cranor and colleagues was cited as providing the scientific rationale for several of the major changes in the NIST password guidelines (see Section 2.1 above.)

Aldrich – Frameworks, APIs, composable models. Developers of plugins for frameworks, such as apps in an app store, require ways to analyze their code to ensure that security properties hold in the context of a highly complex and rich software framework. Many of the challenges in framework use by developers is the complexity of the rules of engagement at the framework APIs, particularly regarding protocols. An empirical study of software developers by this team shows specifically how developers have trouble understanding the complex protocols associated with framework APIs. This leads to problems with productivity, program correctness, and security. An intervention was tested that shows the value to developer quality—and productivity—of adding protocol guidance to developer tooling, with developers working twice as fast and eight times less likely to make a mistake in our intervention.

Breaux – Policy Modeling and Analysis. (See above.)

Aldrich – Race Vulnerabilities. (See above.)

C). Publications

PI(s): Jonathan Aldrich (CMU), Josh Sunshine (CMU) - A Language and Framework for Development of Secure Mobile Applications

- Filipe Militao and Jonathan Aldrich. Composing Interfering Abstract Protocols. 30th International European Conference on Object-Oriented Programming (ECOOP), Rome, Italy, July 17-22, 2016. Presented by Filipe Militao

- Cyrus Omar and Jonathan Aldrich. Programmable Semantic Fragments. 15th International Conference on Generative Programming: Concepts and Experience (GPCE), part of Systems, Programming, Languages and Applications: Software for Humanity (SPLASH), Amsterdam, October 31 – Nov 1, 2016. Presented by Cyrus Omar

- Jonathan Aldrich and Alex Potanin. Naturally Embedded DSLs. In Workshop on Domain-Specific Language Design and Implementation (DSLDI), part of Systems, Programming, Languages and Applications: Software for Humanity (SPLASH), Amsterdam, October 31 – Nov 1, 2016. Presented by Jonathan Aldrich

- Cyrus Omar, Ian Voysey, Michael Hilton, Jonathan Aldrich, Matthew Hammer. Hazelnut: A Bidirectionally Typed Structure Editor Calculus. Symposium on Principles of Programming Languages (POPL), Paris, France, January 15 – 21, 2017. Presented by Cyrus Omar

- Ian Voysey, Cyrus Omar, Matthew Hammer. Running Incomplete Programs. Part of POPL, Off the Beaten Track Workshop (OBT), Paris, France, January 15 – 21, 2017. Presented by Ian Voysey

- Cyrus Omar, Ian Voysey, Michael Hilton, Joshua Sunshine, Claire Le Goues, Jonathan Aldrich, Matthew A. Hammer. Toward Semantic Foundations for Program Editors. 2nd Summit on Advances in Programming Languages (SNAPL), Asilomar, CA, May 7 – 10, 2017. DOI: 10.4230/LIPIcs.SNAPL.2017.23. Presented by Cyrus Omar

- Darya Melicher, Yangqingwei Shi, Alex Potanin, and Jonathan Aldrich. A Capability-Based Module System for Authority Control. In Proc. 31st International European Conference on Object-Oriented Programming (ECOOP), Barcelona, Spain, June 18 – 23, 2017. Presented by Darya Melicher

PI(s): Jonathan Aldrich (CMU), Witawas Srisa-an (University of Nebraska, Lincoln); Researchers: Joshua Sunshine (CMU) - Race Vulnerability Study and Hybrid Race Detection

- Junjie Qian, Witawas Srisa-an, Hong Jiang, Sharad Seth. Exploiting FIFO Scheduler to Improve Parallel Garbage Collection Performance. In Proc. ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments (VEE), Atlanta, GA, April 2 – 3, 2016. Presented by Junjie Qian

- Michael Coblenz, Joshua Sunshine, Jonathan Aldrich, Brad Myers, Sam Weber, and Forrest Shull. Exploring Language Support for Immutability. In Proc. International Conference on Software Engineering (ICSE), Austin, TX, May 14 – 22, 2016. Presented by Michael Coblenz

- Supat Rattanasuksun, Tingting Yu, Witawas Srisa-an, Gregg Rothermel. RRF: A Race Reproduction Framework for Use in Debugging Process-Level Races. International Symposium on Software Reliability Engineering (ISSRE), Ottawa, Canada, October 23 – 27, 2016. Presented by Supat Rattanasuksun

- Zhiqiang Li, Lichao Sun, Qiben Yan, Witawas Srisa-an, Zhenxiang Chen. DroidClassifier: Efficient Adaptive Mining of Application-Layer Header for Classifying Android Malware. 12th EAI International Conference on Security and Privacy in Communication Networks (SecureComm), Guangzhou, People’s Republic of China, October 10 – 12, 2016. Presented by Zhiqiang Li

- Lichao Sun, Zhiqiang Li, Qiben Yan, and Witawas Srisa-an. SigPID: Significant Permission Identification for Android Malware Detection. 11th International Conference on Malicious and Unwanted Software (MALWARE), Fajardo, Puerto Rico, October 18 – 21, 2016. Presented by Lichao Sun

- Tingting Yu, Witty Srisa-an, and Gregg Rothermel. SimExplorer: An Automated Framework to Support Testing for System-Level Race Conditions. Journal of Software: Testing, Verification and Reliability. Vol 27, 4-5. May 10, 2017. DOI: 10.1002/stvr.1634

- Junjie Qian, Hong Jiang, Witawas Srisa-an, Sharad Seth, Stan Skelton, and Joseph Moore. Energy-efficient I/O Thread Schedulers for NVMe SSDs on NUMA. 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid). Madrid, Spain. May 14 – 17, 2017. Presented by Junjie Qian

- Yutaka Tsutano, Shakthi Bachala, Witawas Srisa-an, Gregg Rothermel, Jackson Dinh. An Efficient, Robust, and Scalable Approach for Analyzing Interacting Android Apps. 39th International Conference on Software Engineering (ICSE), Buenos Aires, Argentina, May 20 – 28, 2017. Presented by Yutaka Tsutano

- Michael Coblenz, Whitney Nelsony, Jonathan Aldrich, Brad Myers, Joshua Sunshine. Glacier: Transitive Class Immutability for Java. 39th International Conference on Software Engineering ICSE), Buenos Aires, Argentina, May 20 – 28, 2017. Presented by Michael Coblenz

PI(s): Travis Breaux (CMU), Jianwei Niu (UTSA) - Usable Formal Methods for the Design and Composition of Security and Privacy Policies

- R. Slavin, X. Wang, M.B. Hosseini, W. Hester, R. Krishnan, J. Bhatia, T.D. Breaux, J. Niu. Toward a Framework for Detecting Privacy Policy Violation in Android Application Code. 38th International Conference on Software Engineering (ICSE), Austin, TX, May 14 - 22, 2016. Presented by Rocky Slavin

- Hui Shen, Ram Krishnan, Rocky Slavin, and Jianwei Niu. Sequence Diagram Aided Privacy Policy Specification. IEEE Transactions on Dependable and Secure Computing. Vol 13(3). May, 2016.

- J. Bhatia, M. Evans, S. Wadkar, T.D. Breaux. Automated Extraction of Regulated Information Types using Hyponymy Relations. IEEE 3rd International Workshop on Artificial Intelligence and Requirements Engineering (AIRE), Beijing, China, September 12 – 16, 2016. Presented by Morgan Evans

- H. Hibshi, T. Breaux, M. Riaz, L. Williams. A Grounded Analysis of Experts' Decision-Making During Security Assessments. Journal of Cybersecurity, Oxford Press. Vol 2(2), pgs 147-63. October 4, 2016. DOI: https://doi.org/10.1093/cybsec/tyw010

- H. Hibshi, T.D. Breaux, C. Wagner. Improving Security Requirements Adequacy: An Interval Type 2 Fuzzy Logic Security Assessment System. IEEE Symposium Series on Computational Intelligence (SSCI'16), Athens, Greece, December 6 – 9, 2016. Presented by Hanan Hibshi

PENDING PUBLICATIONS

- H. Hibshi, T. D. Breaux. Reinforcing Security Requirements with Multifactor Quality Measurement. In Submission: 25th IEEE International Requirements Engineering Conference (RE'17), Lisbon, Portugal, September 4 – 8, 2017.

- M. Bokaei Hosseini, T. D. Breaux, J. Niu. Inferring Ontology Fragments from Semantic Typing of Lexical Variants. In Submission: 25th IEEE International Requirements Engineering Conference (RE'17), Lisbon, Portugal, September 4 – 8, 2017.

- M. C. Evans, J. Bhatia, S. Wadkar, T. D. Breaux. An Evaluation of Constituency-based Hyponymy Extraction from Privacy Policies, In Submission: 25th IEEE International Requirements Engineering Conference (RE'17), Lisbon, Portugal, September 4 – 8, 2017.

PI(s): Alessandro Acquisti, Lorrie Cranor, Nicolas Christin, Rahul Telang; Researchers: Alain Forget (CMU), Serge Egelman (Berkeley), and Scott Beach (Univ of Pittsburgh) - USE: User Security Behavior

- Serge Egelman, Marian Harbach, and Eyal Peer. Behavior Ever Follows Intention? A Validation of the Security Behavior Intentions Scale (SeBIS). Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '16), Santa Clara, CA, May 7 – 12, 2016. doi>10.1145/2858036.2858265. Presented by Serge Egelman - Received a CHI '16 Best Paper Honorable Mention award.

- A. Forget, S. Pearman, J. Thomas, A. Acquisti, N. Christin, L.F. Cranor, S. Egelman, M. Harbach, and R. Telang. Do or Do Not, There Is No Try: User Engagement May Not Improve Security Outcomes. In Proceedings of the 12th Symposium on Usable Privacy and Security (SOUPS '16), Denver, CO, June 22-24, 2016. Presented by Alain Forget

- J. Tan, L. Bauer, J. Bonneau, L. F. Cranor, J. Thomas, and B. Ur. Can Unicorns Help Users Compare Crypto Key Fingerprints? Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. Denver, CO, May 6 – 11, 2017. Presented by Joshua Tan

- C. Canfield, B. Fischoff, A. Davis, A. Forget, S. Pearman, and J. Thomas. Replication: Challenges in Using Data Logs to Validate Phishing Detection Ability Metrics. 13th Symposium on Usable Privacy and Security (SOUPS), Santa Clara, CA, July 12 – 14, 2017. Presented by Alain Forget

PI(s): Anupam Datta, Limin Jia; Researchers: Amit Vasudevan (Cylab/CMU), Sagar Chaki (SEI/CMU), Petros Maniatis (Google Inc.) - Secure Composition of Systems and Policies

- Amit Vasudevan, Sagar Chaki, Petros Maniatis, Limin Jia and Anupam Datta. UberSpark: Enforcing Verifiable Object Abstractions for Automated Compositional Security Analysis of a Hypervisor. Proceedings of 25th USENIX Security Symposium, Austin, TX, August 10-12, 2016. Presented by Amit Vasudevan

PI(s): David Garlan (CMU), Jonathan Aldrich (CMU); Researchers: Marwan Abi Antoun (Wayne State University), Sam Malek (University of California, Irvine), Joshua Sunshine (CMU), Bradley Schmerl (CMU) - Science of Secure Frameworks

- Eric Yuan and Sam Malek. "Mining Software Component Interactions to Detect Security Threats at the Architectural Level." In proceedings of the 13th Working IEEE/IFIP Conference on Software Architecture (WICSA 2016), Venice, Italy, April 5 – 8, 2016. Presented by Eric Yuan

- Nariman Mirzaei, Joshua Garcia, Hamid Bagheri, Alireza Sadeghi, and Sam Malek. "Reducing Combinatorics in GUI Testing of Android Applications." In proceedings of the 38th International Conference on Software Engineering (ICSE 2016), Austin, TX, May 14 – 22, 2016. (19% acceptance rate) Presented by Joshua Garcia

- Waqar Ahmad, Christian Kastner, Joshua Sunshine, and Jonathan Aldrich. Inter-app Communication in Android: Developer Challenges. In Proc. International Conference on Mining Software Repositories (MSR), Austin, TX, May 14 – 16, 2016. Presented by Waqar Ahmad

- Hamid Bagheri, Alireza Sadeghi, Reyhaneh Jabbarvand Behrouz, and Sam Malek. Practical,Formal Synthesis and Autonomic Enforcement of Security Policies for Android. In proceedings of the 46th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN 2016), Toulouse, France, June 28 – July 1, 2016. (20% acceptance rate) Presented by Hamid Bagheri

- Javier Camara, David Garlan, Gabriel A. Moreno and Bradley Schmerl. Evaluating Trade-Offs of Human Involvement in Self-Adaptive Systems. In Ivan Mistrik, Nour Ali, John Grundy, Rick Kazman and Bradley Schmerl editors, Managing Trade-Offs in Self-Adaptive Systems, Elsevier, September 2016.

- Esther Wang and Jonathan Aldrich. Capability Safe Reflection for the Wyvern Language. In Proceedings of the Workshop on Meta-Programming Techniques and Reflection (META), part of SPLASH, Amsterdam, Netherlands, October 30 – November 4, 2016. Presented by Esther Wang

- Hamid Bagheri and Sam Malek. Titanium: Efficient Analysis of Evolving Alloy Specifications. 24th ACM SIGSOFT International Symposium on the Foundations of Software Engineering (FSE), Seattle, WA, November 13 – 18, 2016. Presented by Hamid Bagheri

- Bradley Schmerl, Jeffrey Gennari, Alireza Sadeghi, Hamid Bagheri, Sam Malek, Javier Camara and David Garlan. Architecture Modeling and Analysis ofSecurity in Android Systems. In Proceedings of the 10th European Conference on Software Architecture (ECSA 2016), Vol. 9839 of Lecture Notes in Computer Science, Springer, Copenhagen, Denmark, November 28 - December 2, 2016. Presented by Bradley Schmerl

- Mahmoud Hammad, Hamid Bagheri, and Sam Malek. DELDroid: Determination and Enforcement of Least-Privilege Architecture in Android. IEEE International Conference on Software Architecture (ICSA 2017), Gothenburg, Sweden, April 3 – 7, 2017. Presented by Hamid Bagheri

- Alireza Sadeghi, Hamid Bagheri, Joshua Garcia, and Sam Malek. A Taxonomy and Qualitative Comparison of Program Analysis Techniques for Security Assessment of Android Software. Published online October 6, 2016: http://ieeexplore.ieee.org/document/7583740/ IEEE Transactions on Software Engineering, Vol: 43(6), June 1, 2017. DOI: 10.1109/TSE.2016.2615307

- Alireza Sadeghi, Naeem Esfahani, and Sam Malek. Ensuring the Consistency of Adaptation through Inter- and Intra-Component Dependency Analysis. Journal ACM Transactions on Software Engineering and Methodology (TOSEM). Vol 26(1), Article 2. July 12, 2017. https://doi.org/10.1145/3063385

PI(s): David Garlan, Bradley Schmerl - Multi-Model Run-Time security analysis

- Hemank Lamba, Thomas J. Glazier, Javier Camara, Bradley Schmerl, David Garlan and Jurgen Pfeffer. Model-based cluster analysis for identifying suspicious activity sequences in software. 3rd International Workshop on Security and Privacy Analytics (IWSPA), co-located with ACM CODASPY. Scottsdale, AZ, March 23 – 25, 2017. Presented by Hemank Lamba

PI(s): Christian Kastner, Juergen Pfeffer - Highly Configurable Systems

- F. Medeiros, C. Kastner, M. Ribeiro, R. Gheyi, and S. Apel. A Comparison of 10 Sampling Algorithms for Configurable Systems. In Proceedings of the 38th International Conference on Software Engineering (ICSE), New York, NY: ACM Press. Austin, TX, May 14 - 22, 2016. DOI: 10.1145/2884781.2884793. Presented by Flavio Medeiros

- Jens Meinicke, Chu-Pan Wong, Christian Kastner, Thomas Thum, Gunter Saake. On Essential Configuration Complexity: Measuring Interactions In Highly-Configurable Systems. In Proceedings Int'l Conf. Automated Software Engineering (ASE). Singapore, Singapore, September 3 – 7, 2016. Presented by Jens Meinicke

- G. Ferreira, M. Malik, C. Kastner, J. Pfeffer, and S. Apel. Do #ifdefs Influence the Occurrence of Vulnerabilities? An Empirical Study of the Linux Kernel. In Proceedings of the 20th International Software Product Line Conference (SPLC), New York, NY: ACM Press. Beijing, China, September 19 – 23, 2016. Presented by Gabriel Ferreira

- C. Bogart, C. Kastner, J. Herbsleb, and F. Thung. How to Break an API: Cost Negotiation and Community Values in Three Software Ecosystems. In Proceedings of the ACM SIGSOFT Symposium on the Foundations of Software Engineering (FSE), New York, NY: ACM Press, November 13 – 18, 2016. Presented by Christopher Bogart

- J. Al-Kofahi, T. Nguyen, and C. Kastner. Escaping AutoHell: A Vision For Automated Analysis and Migration of Autotools Build Systems. In Proceedings of the 4th International Workshop on Release Engineering (Releng), New York, NY: ACM Press. Seattle, WA, November 18, 2016. Presented by Jafar Al-Kofahi

- M. Meng, J. Meinicke, C. Wong, E. Walkingshaw, and C. Kastner. A Choice of Variational Stacks: Exploring Variational Data Structures. In Proceedings of the 11st Int'l Workshop on Variability Modelling of Software-Intensive Systems (VaMoS), Eindhoven, The Netherlands, February 1 – 3, 2017. Presented by Eric Walkingshaw

- F. Medeiros, M. Ribeiro, R. Gheyi, S. Apel, C. Kastner, B. Ferreira, L. Carvalho, and B. Fonseca. Discipline Matters: Refactoring of Preprocessor Directives in the #ifdef Hell. IEEE Transactions on Software Engineering (TSE), March 28, 2017. DOI: 10.1109/TSE.2017.2688333. Presented by Flavio Medeiros

- Gabriel Ferreira. 2017. Software Certification in Practice: How Are Standards Being Applied? Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering Companion (ICSE), Buenos Aires, Argentina, May 20 – 28, 2017. Presented by Gabriel Ferreira

- (ACCEPTED) - R. Goyal, G. Ferreira, C. Kastner, and J. Herbsleb. Identifying Unusual Commits on GitHub. Journal of Software: Evolution and Process (JSEP), 2017.

PI(s): Andre Platzer; Researchers: Dexter Kozen (Cornell) - Security Reasoning for Distributed Systems with Uncertainty

N/A this year

D). Community Engagements

PI(s): Jonathan Aldrich (CMU), Josh Sunshine (CMU) - A Language and Framework for Development of Secure Mobile Applications

N/A this year

PI(s): Jonathan Aldrich (CMU), Witawas Srisa-an (University of Nebraska, Lincoln)

Researchers: Joshua Sunshine (CMU) - Race Vulnerability Study and Hybrid Race Detection

N/A this year

PI(s): Travis Breaux (CMU), Jianwei Niu (UTSA) - Usable Formal Methods for the Design and Composition of Security and Privacy Policies

- CMU and UTSA published a website for mobile app developers and regulators to help detect and repair potential privacy violations in mobile app source code. The website is located: http://polidroid.org/

PI(s): Alessandro Acquisti, Lorrie Cranor, Nicolas Christin, Rahul Telang; Researchers: Alain Forget (CMU), Serge Egelman (Berkeley), and Scott Beach (Univ of Pittsburgh) - USE: User Security Behavior

N/A this year

PI(s): Anupam Datta, Limin Jia; Researchers: Amit Vasudevan (Cylab/CMU), Sagar Chaki (SEI/CMU), Petros Maniatis (Google Inc.) - Secure Composition of Systems and Policies

- We have open-sourced and released the first academic prototype of UberSpark. The development and evolution of the framework continues at http://uberspark.org

- Our hope, and ultimate vision with UberSpark is to foster the growth of the next generation of systems developers who can design and develop low-level verifiable and trustworthy systems with a focus on verifiability, development compatibility and performance.

PI(s): David Garlan (CMU), Jonathan Aldrich (CMU); Researchers: Marwan Abi Antoun (Wayne State University), Sam Malek (University of California, Irvine), Joshua Sunshine (CMU), Bradley Schmerl (CMU) - Science of Secure Frameworks

N/A this year

PI(s): David Garlan, Bradley Schmerl - Multi-Model Run-Time security analysis

- We engaged with a new group from the NSA to start a new resilience subtask.

PI(s): Christian Kastner, Juergen Pfeffer - Highly Configurable Systems

N/A this year

PI(s): Andre Platzer; Researchers: Dexter Kozen (Cornell) - Security Reasoning for Distributed Systems with Uncertainty

N/A this year

E). Educational

PI(s): Jonathan Aldrich (CMU), Josh Sunshine (CMU) - A Language and Framework for Development of Secure Mobile Applications

N/A this year

PI(s): Jonathan Aldrich (CMU), Witawas Srisa-an (University of Nebraska, Lincoln); Researchers: Joshua Sunshine (CMU) - Race Vulnerability Study and Hybrid Race Detection

N/A this year

PI(s): Travis Breaux (CMU), Jianwei Niu (UTSA) - Usable Formal Methods for the Design and Composition of Security and Privacy Policies

N/A this year

PI(s): Alessandro Acquisti, Lorrie Cranor, Nicolas Christin, Rahul Telang; Researchers: Alain Forget (CMU), Serge Egelman (Berkeley), and Scott Beach (Univ of Pittsburgh) - USE: User Security Behavior

N/A this year

PI(s): Anupam Datta, Limin Jia; Researchers: Amit Vasudevan (Cylab/CMU), Sagar Chaki (SEI/CMU), Petros Maniatis (Google Inc.) - Secure Composition of Systems and Policies

N/A this year

PI(s): David Garlan (CMU), Jonathan Aldrich (CMU); Researchers: Marwan Abi Antoun (Wayne State University), Sam Malek (University of California, Irvine), Joshua Sunshine (CMU), Bradley Schmerl (CMU) - Science of Secure Frameworks

- Ebrahim Khalaj completed the requirements for the M.S. degree in Dec. 2017, and is on target to graduate with a Ph.D. by May or Aug. 2017.

PI(s): David Garlan, Bradley Schmerl - Multi-Model Run-Time security analysis

We have engaged a team from the Master of Information Technology Strategy program for development of the the exemplar testbed as part of their practicum project, a Masters of Softwre Engineering student in an independent study for formal modeling of advanced persistent threats, and an undergraduate from Duke University to implement an instance of a simulated advanced persistent threat scenario on the exemplar testbed.

PI(s): Christian Kastner, Juergen Pfeffer - Highly Configurable Systems

N/A this year

PI(s): Andre Platzer; Researchers: Dexter Kozen (Cornell) - Security Reasoning for Distributed Systems with Uncertainty

N/A this year

- NSA Program Manager

- Scalability and Composability

- Policy-Governed Secure Collaboration

- Metrics

- Resilient Architectures

- Human Behavior

- CMU

- A Language and Framework for Development of Secure Mobile Applications

- Epistemic Models for Security

- Geo-Temporal Characterization of Security Threats

- Highly Configurable Systems

- Multi-Model Run-Time Security Analysis

- Race Vulnerability Study and Hybrid Race Detection

- Science of Secure Frameworks

- Secure Composition of Systems and Policies

- Security Reasoning for Distributed Systems with Uncertainty

- Usable Formal Methods for the Design and Composition of Security and Privacy Policies

- USE: User Security Behavior

- FY14-18