Hot SoS 2016 Papers, Posters, and Tutorials

Hot SoS 2016 Papers, Posters, and Tutorials

SoS Newsletter- Advanced Book Block

|

Hot SoS 2016 Papers, Posters, and Tutorials |

The 2016 Symposium and Bootcamp on the Science of Security (HotSoS) was held April 19-21 at Carnegie-Mellon University in Pittsburgh, PA. There were nine papers representing the work of forty-seven researchers and co-authors, two tutorials, and 14 posters presented. This bibliography provides the abstract and author information for each. Papers are cited first, then tutorials, and finally posters in the order they were presented. While the conference proceedings will soon be available from the ACM Digital Library, this bibliography includes digital object identifiers that will allow the reader to find the document prior to publication. The names of the authors who presented the work at Hot SoS are underlined. More information about Hot SoS 2016 can be found on the CPS-VO web page at: http://cps-vo.org/node/24119

Hui Lin, Homa Alemzadeh, Daniel Chen, Zbigniew Kalbarczyk, Ravishankar Iyer; “Safety-critical Cyber-physical Attacks: Analysis, Detection, and Mitigation Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898391

Hui Lin, Homa Alemzadeh, Daniel Chen, Zbigniew Kalbarczyk, Ravishankar Iyer; “Safety-critical Cyber-physical Attacks: Analysis, Detection, and Mitigation Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898391

Abstract: Today’s cyber-physical systems (CPSs) can have very different characteristics in terms of control algorithms, configurations, underlying infrastructure, communication protocols, and real-time requirements. Despite these variations, they all face the threat of malicious attacks that exploit the vulnerabilities in the cyber domain as footholds to introduce safety violations in the physical processes. In this paper, we focus on a class of attacks that impact the physical processes without introducing anomalies in the cyber domain. We present the common challenges in detecting this type of attacks in the contexts of two very different CPSs (i.e., power grids and surgical robots). In addition, we present a general principle for detecting such cyber-physical attacks, which combine the knowledge of both cyber and physical domains to estimate the adverse consequences of malicious activities in a timely manner.

Keywords: (not provided)

Aron Laszka, Waseem Abbas, Shankar Sastry, Yevgeniy Vorobeychik, Xenofon Koutsoukos; “Optimal Thresholds for Intrusion Detection Systems.” DOI:http://dx.doi.org/10.1145/2898375.2898399

Abstract: Intrusion-detection systems can play a key role in protecting sensitive computer systems since they give defenders a chance to detect and mitigate attacks before they could cause substantial losses. However, an oversensitive intrusion-detection system, which produces a large number of false alarms, imposes prohibitively high operational costs on a defender since alarms need to be manually investigated. Thus, defenders have to strike the right balance between maximizing security and minimizing costs. Optimizing the sensitivity of intrusion detection systems is especially challenging in the case when multiple interdependent computer systems have to be defended against a strategic attacker, who can target computer systems in order to maximize losses and minimize the probability of detection. We model this scenario as an attacker-defender security game and study the problem.

Keywords: Intrusion detection system; game theory; economics of security; Stackelberg equilibrium; computational complexity

Atul Bohara, Uttam Thakore, and William Sanders; “Intrusion Detection in Enterprise Systems by Combining and Clustering Diverse Monitor Data.” DOI:http://dx.doi.org/10.1145/2898375.2898400

Atul Bohara, Uttam Thakore, and William Sanders; “Intrusion Detection in Enterprise Systems by Combining and Clustering Diverse Monitor Data.” DOI:http://dx.doi.org/10.1145/2898375.2898400

Abstract: Intrusion detection using multiple security devices has received much attention recently. The large volume of information generated by these tools, however, increases the burden on both computing resources and security administrators. Moreover, attack detection does not improve as expected if these tools work without any coordination.

In this work, we propose a simple method to join information generated by security monitors with diverse data formats. We present a novel intrusion detection technique that uses unsupervised clustering algorithms to identify malicious behavior within large volumes of diverse security monitor data. First, we extract a set of features from network-level and host-level security logs that aid in detecting malicious host behavior and flooding-based network attacks in an enterprise network system. We then apply clustering algorithms to the separate and joined logs and use statistical tools to identify anomalous usage behaviors captured by the logs. We evaluate our approach on an enterprise network data set, which contains network and host activity logs. Our approach correctly identifies and prioritizes anomalous behaviors in the logs by their likelihood of maliciousness. By combining network and host logs, we are able to detect malicious behavior that cannot be detected by either log alone.

Keywords: Security, Monitoring, Intrusion Detection, Anomaly Detection, Machine Learning, Clustering

Jeffrey Carver, Morgan Burcham, Sedef Akinli Kocak, Ayse Bener, Michael Felderer, Matthias Gander, Jason King, Jouni Markkula, Markku Oivo, Clemens Sauerwein, Laurie Williams; “Establishing a Baseline for Measuring Advancement in the Science of Security – an Analysis of the 2015 IEEE Security & Privacy Proceedings.” DOI:http://dx.doi.org/10.1145/2898375.2898380

Abstract: In this paper we aim to establish a baseline of the state of scientific work in security through the analysis of indicators of scientific c research as reported in the papers from the 2015 IEEE Symposium on Security and Privacy.

To conduct this analysis, we developed a series of rubrics to determine the completeness of the papers relative to the type of evaluation used (e.g. case study, experiment, proof). Our findings showed that while papers are generally easy to read, they often do not explicitly document some key information like the research objectives, the process for choosing the cases to include in the studies, and the threats to validity. We hope that this initial analysis will serve as a baseline against which we can measure the advancement of the science of security.

Keywords: Science of Security, Literature Review

Bradley Potteiger, Goncalo Martins, Xenofon Koutsoukos; “Software and Attack Centric Integrated Threat Modeling for Quantitative Risk Assessment.” DOI:http://dx.doi.org/10.1145/2898375.2898390

Abstract: Threat modeling involves understanding the complexity of the system and identifying all of the possible threats, regardless of whether or not they can be exploited. Proper identification of threats and appropriate selection of countermeasures reduces the ability of attackers to misuse the system.

This paper presents a quantitative, integrated threat modeling approach that merges software and attack centric threat modeling techniques. The threat model is composed of a system model representing the physical and network infrastructure layout, as well as a component model illustrating component specific threats. Component attack trees allow for modeling specific component contained attack vectors, while system attack graphs illustrate multi-component, multi-step attack vectors across the system. The Common Vulnerability Scoring System (CVSS) is leveraged to provide a standardized method of quantifying the low level vulnerabilities in the attack trees. As a case study, a railway communication network is used, and the respective results using a threat modeling software tool are presented.

Keywords: Quantitative risk assessment, threat modeling, cyber-physical systems

Antonio Roque, Kevin Bush, Christopher Degni; “Security is about Control: Insights from Cybernetics.” DOI:http://dx.doi.org/10.1145/2898375.2898379

Abstract: Cybernetic closed loop regulators are used to model sociotechnical systems in adversarial contexts. Cybernetic principles regarding these idealized control loops are applied to show how the incompleteness of system models enables system exploitation. We consider abstractions as a case study of model incompleteness, and we characterize the ways that attackers and defenders interact in such a formalism. We end by arguing that the science of security is most like a military science, whose foundations are analytical and generative rather than normative.

Keywords: Computer Security, Cybernetics, Control Systems

Zhenqi Huang, Yu Wang, Sayan Mitra, Geir Dullerud; “Controller Synthesis for Linear Dynamical Systems with Adversaries.” DOI:http://dx.doi.org/10.1145/2898375.2898378

Zhenqi Huang, Yu Wang, Sayan Mitra, Geir Dullerud; “Controller Synthesis for Linear Dynamical Systems with Adversaries.” DOI:http://dx.doi.org/10.1145/2898375.2898378

Abstract: We present a controller synthesis algorithm for a reach-avoid problem in the presence of adversaries. Our model of the adversary abstractly captures typical malicious attacks envisioned on cyber-physical systems such as sensor spoofing, controller corruption, and actuator intrusion. After formulating the problem in a general setting, we present a sound and complete algorithm for the case with linear dynamics and an adversary with a budget on the total L2-norm of its actions. The algorithm relies on a result from linear control theory that enables us to decompose and compute the reachable states of the system in terms of a symbolic simulation of the adversary-free dynamics and the total uncertainty induced by the adversary. With this decomposition, the synthesis problem eliminates the universal quantifier on the adversary's choices and the symbolic controller actions can be effectively solved using an SMT solver. The constraints induced by the adversary are computed by solving second-order cone programmings. The algorithm is later extended to synthesize state-dependent controller and to generate attacks for the adversary. We present preliminary experimental results that show the effectiveness of this approach on several example problems.

Keywords: Cyber-physical security, constraint-based synthesis, controller synthesis

Carl Pearson, Allaire Welk, William Boettcher, Roger Mayer, Sean Streck, Joseph Simons-Rudolph, Christopher Mayhorn; “Differences in Trust between Human and Automated Decision Aids.” DOI:http://dx.doi.org/10.1145/2898375.2898385

Abstract: Humans can easily find themselves in high cost situations where they must choose between suggestions made by an automated decision aid and a conflicting human decision aid. Previous research indicates that humans often rely on automation or other humans, but not both simultaneously. Expanding on previous work conducted by Lyons and Stokes (2012), the current experiment measures how trust in automated or human decision aids differs along with perceived risk and workload. The simulated task required 126 participants to choose the safest route for a military convoy; they were presented with conflicting information from an automated tool and a human. Results demonstrated that as workload increased, trust in automation decreased. As the perceived risk increased, trust in the human decision aid increased. Individual differences in dispositional trust correlated with an increased trust in both decision aids. These findings can be used to inform training programs for operators who may receive information from human and automated sources. Examples of this context include: air traffic control, aviation, and signals intelligence.

Keywords: Trust; reliance; automation; decision-making; risk; workload; strain

Phuong Cao, Eric Badger, Zbigniew Kalbarczyk, Ravishankar Iyer; “A Framework for Generation, Replay and Analysis of Real-World Attack Variants.” DOI:http://dx.doi.org/10.1145/2898375.2898392

Abstract: This paper presents a framework for (1) generating variants of known attacks, (2) replaying attack variants in an isolated environment and, (3) validating detection capabilities of attack detection techniques against the variants. Our framework facilitates reproducible security experiments. We generated 648 variants of three real-world attacks (observed at the National Center for Supercomputing Applications at the University of Illinois). Our experiment showed the value of generating attack variants by quantifying the detection capabilities of three detection methods: a signature-based detection technique, an anomaly-based detection technique, and a probabilistic graphical model-based technique.

Keywords: (not provided)

Tutorial:

Hanan Hibshi; “Systematic Analysis of Qualitative Data in Security.” DOI:http://dx.doi.org/10.1145/2898375.2898387

Hanan Hibshi; “Systematic Analysis of Qualitative Data in Security.” DOI:http://dx.doi.org/10.1145/2898375.2898387

Abstract: This tutorial will introduce participants to Grounded Theory, which is a qualitative framework to discover new theory from an empirical analysis of data. This form of analysis is particularly useful when analyzing text, audio or video artifacts that lack structure, but contain rich descriptions. We will frame Grounded Theory in the context of qualitative methods and case studies, which complement quantitative methods, such as controlled experiments and simulations. We will contrast the approaches developed by Glaser and Strauss, and introduce coding theory – the most prominent qualitative method for performing analysis to discover Grounded Theory. Topics include coding frames, first- and second-cycle coding, and saturation. We will use examples from security interview scripts to teach participants: developing a coding frame, coding a source document to discover relationships in the data, developing heuristics to resolve ambiguities between codes, and performing second-cycle coding to discover relationships within categories. Then, participants will learn how to discover theory from coded data. Participants will further learn about inter-rater reliability statistics, including Cohen's and Fleiss' Kappa, Krippendorf's Alpha, and Vanbelle's Index. Finally, we will review how to present Grounded Theory results in publications, including how to describe the methodology, report observations, and describe threats to validity.

Keywords: Grounded theory; qualitative; security analysis

Tutorial:

Tao Xie and William Enck; “Text Analytics for Security.” DOI:http://dx.doi.org/10.1145/2898375.2898397

Abstract: Computing systems that make security decisions often fail to take into account human expectations. This failure occurs because human expectations are typically drawn from in textual sources (e.g., mobile application description and requirements documents) and are hard to extract and codify. Recently, researchers in security and software engineering have begun using text analytics to create initial models of human expectation. In this tutorial, we provide an introduction to popular techniques and tools of natural language processing (NLP) and text mining, and share our experiences in applying text analytics to security problems. We also highlight the current challenges of applying these techniques and tools for addressing security problems. We conclude the tutorial with discussion of future research directions.

Keywords: Security; human expectations; text analytics

Poster:

Marwan Abi-Antoun, Ebrahim Khalaj, Radu Vanciu, Ahmad Moghimi; “Abstract Runtime Structure for Reasoning about Security.” DOI:http://dx.doi.org/10.1145/2898375.2898377

Abstract: We propose an interactive approach where analysts reason about the security of a system using an abstraction of its runtime structure, as opposed to looking at the code. They interactively refine a hierarchical object graph, set security properties on abstract objects or edges, query the graph, and investigate the results by studying highlighted objects or edges or tracing to the code. Behind the scenes, an inference analysis and an extraction analysis maintain the soundness of the graph with respect to the code.

Keywords: object graphs; ownership type inference; graph query

Poster:

Clark Barrett. Cesare Tinelli, Morgan Deters, Tianyi Liang, Andrew Reynolds, Nestan Tsiskaridze; “Efficient Solving of String Constraints for Security Analysis.” DOI:http://dx.doi.org/10.1145/2898375.2898393

Abstract: The security of software is increasingly more critical for consumer confidence, protection of privacy, protection of intellectual property, and even national security. As threats to software security have become more sophisticated, so too have the techniques developed to ensure security. One basic technique that has become a fundamental tool in static security analysis is symbolic execution. There are now a number of successful approaches that rely on symbolic methods to reduce security questions about programs to constraint satisfaction problems in some formal logic (e.g., [4, 5, 7, 16]). Those problems are then solved automatically by specialized reasoners for the target logic. The found solutions are then used to construct automatically security exploits in the original programs or, more generally, identify security vulnerabilities.

Keywords: String solving, SMT, automated security analysis

Poster:

Susan G. Campbell, Lelyn D. Saner, Michael F. Bunting; “Characterizing Cybersecurity Jobs: Applying the Cyber Aptitude and Talent Assessment Framework.” DOI:http://dx.doi.org/10.1145/2898375.2898394

Abstract: Characterizing what makes cybersecurity professions difficult involves several components, including specifying the cognitive and functional requirements for performing job-related tasks. Many frameworks that have been proposed are focused on functional requirements of cyber work roles, including the knowledge, skills, and abilities associated with them. In contrast, we have proposed a framework for classifying cybersecurity jobs according to the cognitive demands of each job and for matching applicants to jobs based on their aptitudes for key cognitive skills (e.g., responding to network activity in real-time). In this phase of research, we are investigating several cybersecurity jobs (such as operators vs. analysts), converting the high-level functional tasks of each job into elementary tasks, in order to determine what cognitive requirements distinguish the jobs. We will then examine how the models of cognitive demands by job can be used to inform the designs of aptitude tests for different kinds of jobs. In this poster, we will describe our framework in more detail and how it can be applied toward matching people with the jobs that fit them best.

Keywords: Aptitude testing; job analysis; task analysis; cybersecurity workforce; selection

Poster:

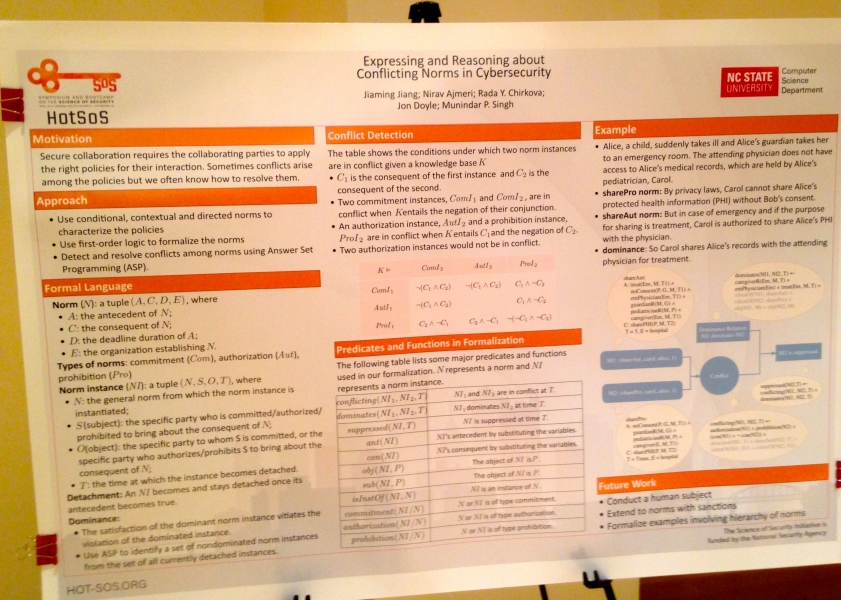

Jiaming Jiang, Nirav Ajmeri, Rada Y. Chirkova, Jon Doyle, Munindar P. Singh; “Expressing and Reasoning about Conflicting Norms in Cybersecurity.” DOI:http://dx.doi.org/10.1145/2898375.2898395

Abstract: Secure collaboration requires the collaborating parties to apply the right policies for their interaction. We adopt a notion of conditional, directed norms as a way to capture the standards of correctness for a collaboration. How can we handle conflicting norms? We describe an approach based on knowledge of what norm dominates what norm in what situation. Our approach adapts answer-set programming to compute stable sets of norms with respect to their computed conflicts and dominance. It assesses agent compliance with respect to those stable sets. We demonstrate our approach on a healthcare scenario.

Keywords: Normative System, Dominance Relation, Norm

Poster:

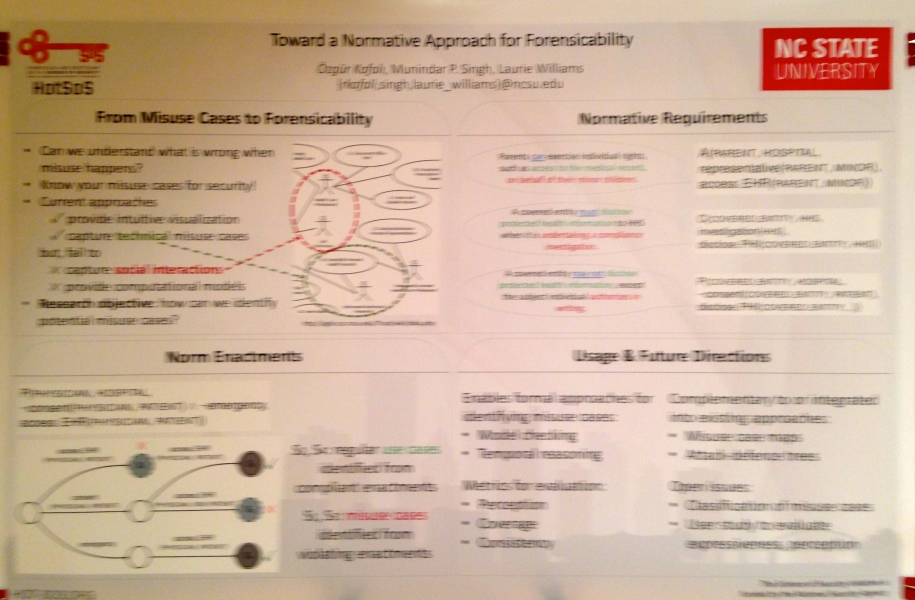

Özgür Kafalı, Munindar P. Singh, and Laurie Williams; “Toward a Normative Approach for Forensicability.” DOI:http://dx.doi.org/10.1145/2898375.2898386

Abstract: Sociotechnical systems (STSs), where users interact with software components, support automated logging, i.e., what a user has performed in the system. However, most systems do not implement automated processes for inspecting the logs when a misuse happens. Deciding what needs to be logged is crucial as excessive amounts of logs might be overwhelming for human analysts to inspect. The goal of this research is to aid software practitioners to implement automated forensic logging by providing a systematic method of using attackers' malicious intentions to decide what needs to be logged. We propose Lokma: a normative framework to construct logging rules for forensic knowledge. We describe the general forensic process of Lokma, and discuss related directions.

Keywords: Forensic logging, security, sociotechnical systems, requirements, social norms

Poster:

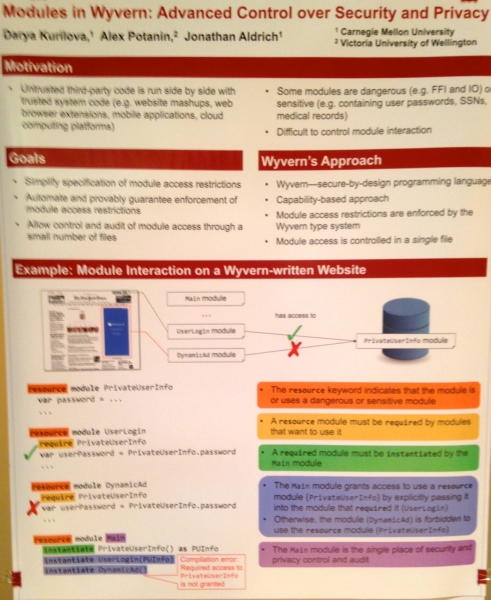

Darya Kurilova, Alex Potanin, Jonathan Aldrich; “Modules in Wyvern: Advanced Control over Security and Privacy” DOI:http://dx.doi.org/10.1145/2898375.2898376

Abstract: In today’s systems, restricting the authority of untrusted code is difficult because, by default, code has the same authority as the user running it. Object capabilities are a promising way to implement the principle of least authority, but being too low-level and fine-grained, take away many conveniences provided by module systems. We present a module system design that is capability-safe, yet preserves most of the convenience of conventional module systems. We demonstrate how to ensure key security and privacy properties of a program as a mode of use of our module system. Our authority safety result formally captures the role of mutable state in capability-based systems and uses a novel non-transitive notion of authority, which allows us to reason about authority restriction: the encapsulation of a stronger capability inside a weaker one.

Keywords: Language-based security; capabilities; modules; authority

Poster:

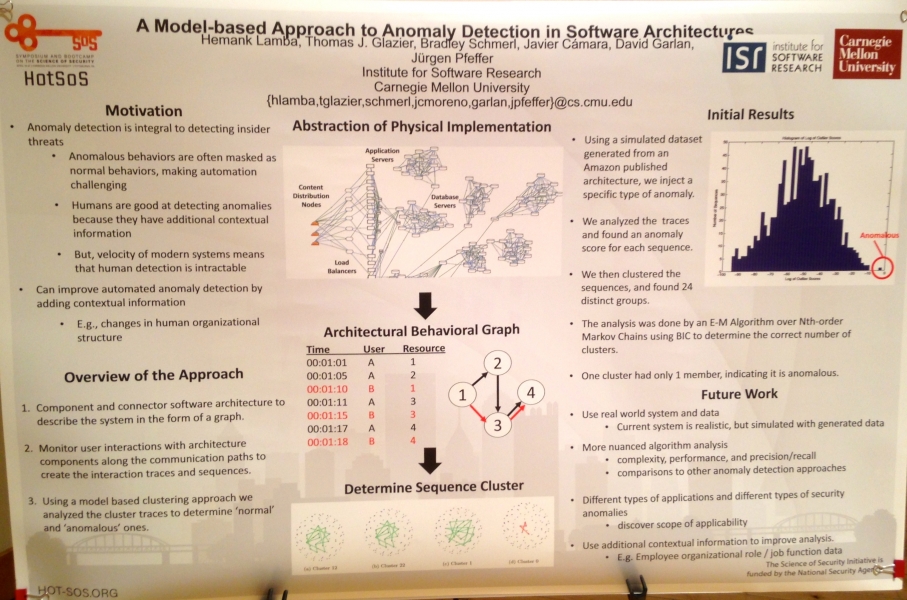

Hemank Lamba, Thomas J. Glazier, Bradley Schmerl, Javier Cámara, David Garlan, Jürgen Pfeffer; “A Model-based Approach to Anomaly Detection in Software Architectures.” DOI:http://dx.doi.org/10.1145/2898375.2898401

Abstract: In an organization, the interactions users have with software leave patterns or traces of the parts of the systems accessed. These interactions can be associated with the underlying software architecture. The first step in detecting problems like insider threat is to detect those traces that are anomalous. Here, we propose a method to end anomalous users leveraging these interaction traces, categorized by user roles. We propose a model based approach to cluster user sequences and find outliers. We show that the approach works on a simulation of a large scale system based on and Amazon Web application style.

Keywords: anomaly detection, model-based graph clustering

Poster:

Jian Lou and Yevgeniy Vorobeychik; “Decentralization and Security in Dynamic Traffic Light Control.” DOI:http://dx.doi.org/10.1145/2898375.2898384

Abstract: Complex traffic networks include a number of controlled intersections, and, commonly, multiple districts or municipalities. The result is that the overall traffic control problem is extremely complex computationally. Moreover, given that different municipalities may have distinct, non-aligned, interests, traffic light controller design is inherently decentralized, a consideration that is almost entirely absent from related literature. Both complexity and decentralization have great bearing both on the quality of the traffic network overall, as well as on its security. We consider both of these issues in a dynamic traffic network. First, we propose an effective local search algorithm to efficiently design system-wide control logic for a collection of intersections. Second, we propose a game theoretic (Stackelberg game) model of traffic network security in which an attacker can deploy denial-of-service attacks on sensors, and develop a resilient control algorithm to mitigate such threats. Finally, we propose a game theoretic model of decentralization, and investigate this model both in the context of baseline traffic network design, as well as resilient design accounting for attacks. Our methods are implemented and evaluated using a simple traffic network scenario in SUMO.

Keywords: Traffic Control System; Game Theoretical Model; Decentralization and Security; Simulation-Based Method

Poster:

Momin M. Malik, Jürgen Pfeffer, Gabriel Ferreira, Christian Kästner; “Visualizing the Variational Callgraph of the Linux Kernel: An Approach for Reasoning about Dependencies.” DOI:http://dx.doi.org/10.1145/2898375.2898398

Abstract: Software developers use #ifdef statements to support code configurability, allowing software product diversification. But because functions can be in many executions paths that depend on complex combinations of configuration options, the introduction of an #ifdef for a given purpose (such as adding a new feature to a program) can enable unintended function calls, which can be a source of vulnerabilities. Part of the difficulty lies in maintaining mental models of all dependencies. We propose analytic visualizations of the variational callgraph to capture dependencies across configurations and create visualizations to demonstrate how it would help developers visually reason through the implications of diversification, for example through visually doing change impact analysis.

Keywords: visualization, configuration complexity, Linux Kernel, callgraph, #ifdef, dependencies, vulnerabilities

Poster:

Akond Ashfaque, Ur Rahman, and Laurie Williams; “Security Practices in DevOps.” DOI:http://dx.doi.org/10.1145/2898375.2898383

Abstract: We summarize the contributions of this study as follows:

• A list of security practices and an analysis of how they are used in organizations that have adopted DevOps to integrate security; and

• An analysis that quantifies the levels of collaboration amongst the development teams, operations teams, and security teams within organizations that are using DevOps.

Keywords: (not provided)

Poster:

Bradley Schmerl, Jeffrey Gennari, Javier Cámara, David Garlan; “Raindroid – A System for Run-time Mitigation of Android Intent Vulnerabilities.” DOI:http://dx.doi.org/10.1145/2898375.2898389

Abstract: Modern frameworks are required to be extendable as well as secure. However, these two qualities are often at odds. In this poster we describe an approach that uses a combination of static analysis and run-time management, based on software architecture models, that can improve security while maintaining framework extendability. We implement a prototype of the approach for the Android platform. Static analysis identifies the architecture and communication patterns among the collection of apps on an Android device and which communications might be vulnerable to attack. Run-time mechanisms monitor these potentially vulnerable communication patterns, and adapt the system to either deny them, request explicit approval from the user, or allow them.

Keywords: software architecture, security, self-adaptation

Poster:

Daniel Smullen and Travis D. Breaux; “Modeling, Analyzing, and Consistentcy Checking Privacy Requirements using Eddy.” DOI:http://dx.doi.org/10.1145/2898375.2898381

Abstract: Eddy is a privacy requirements specification language that privacy analysts can use to express requirements over data practices; to collect, use, transfer and retain personal and technical information. The language uses a simple SQL-like syntax to express whether an action is permitted or prohibited, and to restrict those statements to particular data subjects and purposes. Eddy also supports the ability to express modifications on data, including perturbation, data append, and redaction. The Eddy specifications are compiled into Description Logic to automatically detect conflicting requirements and to trace data flows within and across specifications. Conflicts are highlighted, showing which rules are in conflict (expressing prohibitions and rights to perform the same action on equivalent interpretations of the same data, data subjects, or purposes), and what definitions caused the rules to conflict. Each specification can describe an organization's data practices, or the data practices of specific components in a software architecture.

Keywords: privacy; requirements engineering; data ow analysis; model checking

Poster:

Christopher Theisen and Laurie Williams; “Risk-Based Attack Surface Approximation.” DOI:http://dx.doi.org/10.1145/2898375.2898388

Abstract: Proactive security review and test efforts are a necessary component of the software development lifecycle. Since resource limitations often preclude reviewing, testing and fortifying the entire code base, prioritizing what code to review/test can improve a team’s ability to find and remove more vulnerabilities that are reachable by an attacker. One way that professionals perform this prioritization is the identification of the attack surface of software systems. However, identifying the attack surface of a software system is non-trivial. The goal of this poster is to present the concept of a risk-based attack surface approximation based on crash dump stack traces for the prioritization of security code rework efforts. For this poster, we will present results from previous efforts in the attack surface approximation space, including studies on its effectiveness in approximating security relevant code for Windows and Firefox. We will also discuss future research directions for attack surface approximation, including discovery of additional metrics from stack traces and determining how many stack traces are required for a good approximation.

Keywords: Stack traces; attack surface; crash dumps; security; metrics

Poster:

Olga Zielinska, Allaire Welk, and Christopher B. Mayhorn, Emerson Murphy-Hill; “The Persuasive Phish: Examining the Social Psychological Principles Hidden in Phishing Emails.” DOI:http://dx.doi.org/10.1145/2898375.2898382

Abstract: Phishing is a social engineering tactic used to trick people into revealing personal information [Zielinska, Tembe, Hong, Ge, Murphy-Hill, & Mayhorn 2014]. As phishing emails continue to infiltrate users’ mailboxes, what social engineering techniques are the phishers using to successfully persuade victims into releasing sensitive information?

Cialdini’s [2007] six principles of persuasion (authority, social proof, liking/similarity, commitment/consistency, scarcity, and reciprocation) have been linked to elements of phishing emails [Akbar 2014; Ferreira, & Lenzini 2015]; however, the findings have been conflicting. Authority and scarcity were found as the most common persuasion principles in 207 emails obtained from a Netherlands database [Akbar 2014], while liking/similarity was the most common principle in 52 personal emails available in Luxemborg and England [Ferreira et al. 2015]. The purpose of this study was to examine the persuasion principles present in emails available in the United States over a period of five years.

Two reviewers assessed eight hundred eighty-seven phishing emails from Arizona State University, Brown University, and Cornell University for Cialdini’s six principles of persuasion. Each email was evaluated using a questionnaire adapted from the Ferreira et al. [2015] study. There was an average agreement of 87% per item between the two raters.

Spearman’s Rho correlations were used to compare email characteristics over time. During the five year period under consideration (2010–2015), the persuasion principles of commitment/consistency and scarcity have increased over time, while the principles of reciprocation and social proof have decreased over time. Authority and liking/similarity revealed mixed results with certain characteristics increasing and others decreasing.

The commitment/consistency principle could be seen in the increase of emails referring to elements outside the email to look more reliable, such as Google Docs or Adobe Reader (rs(850) = .12, p =.001), while the scarcity principle could be seen in urgent elements that could encourage users to act quickly and may have had success in eliciting a response from users (rs(850) = .09, p=.01). Reciprocation elements, such as a requested reply, decreased over time (rs(850) = -.12, p =.001). Additionally, the social proof principle present in emails by referring to actions performed by other users also decreased (rs(850) = -.10, p =.01).

Two persuasion principles exhibited both an increase and decrease in their presence in emails over time: authority and liking/similarity. These principles could increase phishing rate success if used appropriately, but could also raise suspicions in users and decrease compliance if used incorrectly. Specifically, the source of the email, which corresponds to the authority principle, displayed an increase over time in educational institutes (rs(850) = .21, p <.001), but a decrease in financial institutions (rs(850) = -.18, p <.001). Similarly, the liking/similarity principle revealed an increase over time of logos present in emails (rs(850) = .18, p<.001) and decrease in service details, such as payment information (rs(850) = -.16, p <.001).

The results from this study offer a different perspective regarding phishing. Previous research has focused on the user aspect; however, few studies have examined the phisher perspective and the social psychological techniques they are implementing. Additionally, they have yet to look at the success of the social psychology techniques. Results from this study can be used to help to predict future trends and inform training programs, as well as machine learning programs used to identify phishing messages.

Keywords: Phishing, Persuasion, Social Engineering, Email, Security

Poster:

Wenyu Ren, Klara Nahrstedt, and Tim Yardley; “Operation-Level Traffic Analyzer Framework for Smart Grid.” DOI:http://dx.doi.org/10.1145/2898375.2898396

Abstract: The Smart Grid control systems need to be protected from internal attacks within the perimeter. In Smart Grid, the Intelligent Electronic Devices (IEDs) are resource-constrained devices that do not have the ability to provide security analysis and protection by themselves. And the commonly used industrial control system protocols offer little security guarantee. To guarantee security inside the system, analysis and inspection of both internal network traffic and device status need to be placed close to IEDs to provide timely information to power grid operators. For that, we have designed a unique, extensible and efficient operation-level traffic analyzer framework. The timing evaluation of the analyzer overhead confirms efficiency under Smart Grid operational traffic.

Keywords: Smart Grid, network security, traffic analysis

(ID#: 16-9552)

Note:

Articles listed on these pages have been found on publicly available internet pages and are cited with links to those pages. Some of the information included herein has been reprinted with permission from the authors or data repositories. Direct any requests via Email to news@scienceofsecurity.net for removal of the links or modifications to specific citations. Please include the ID# of the specific citation in your correspondence.